How transferable are features in deep neural networks?

Quick links: paper | code | video of NIPS 2014 talk (video on youtube, slides as keynote, pdf)

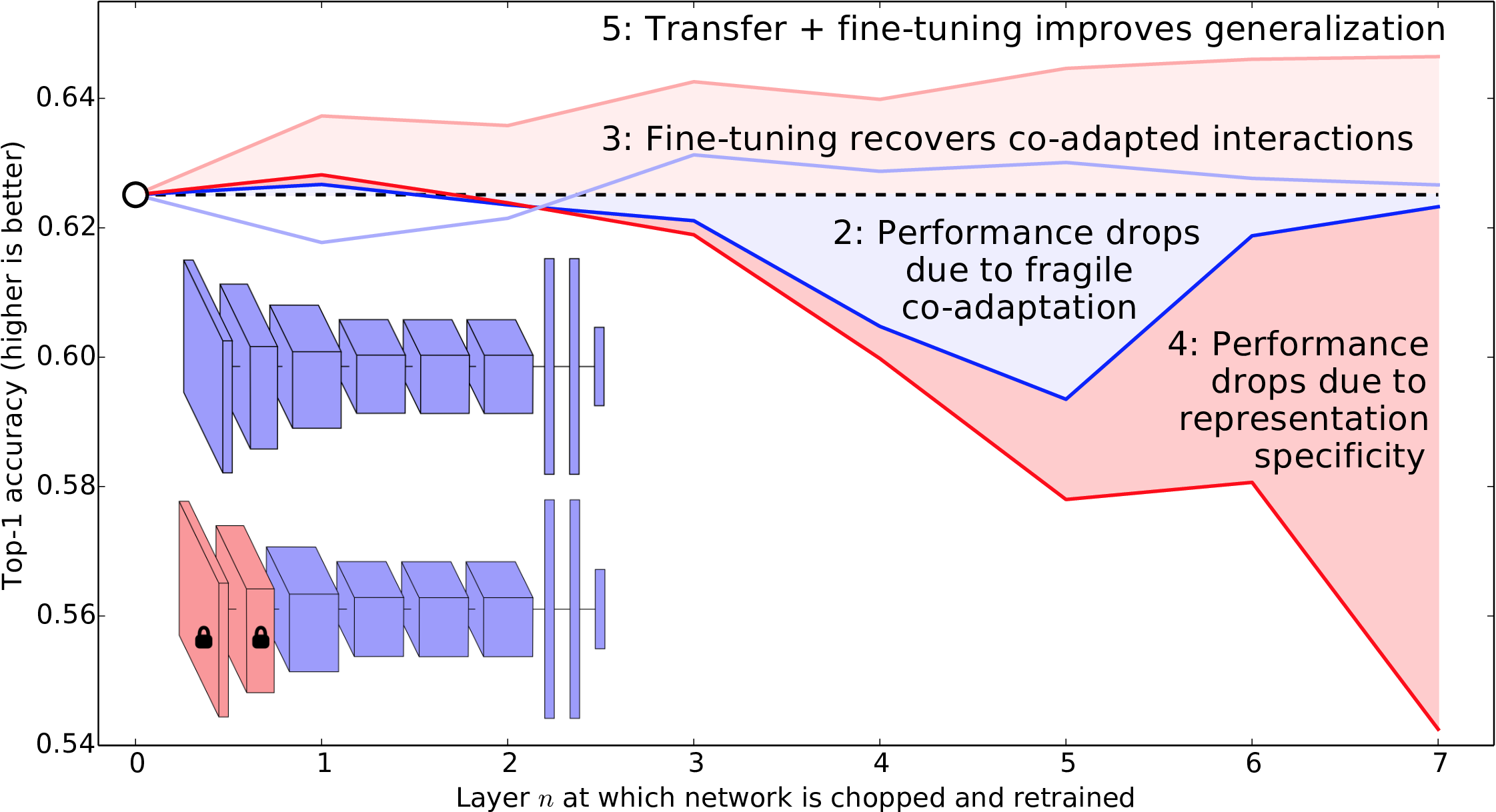

Many deep neural networks trained on natural images exhibit a curious phenomenon: they all learn roughly the same Gabor filters and color blobs on the first layer. These features seem to be generic — useful for many datasets and tasks — as opposed to specific — useful for only one dataset and task. By the last layer features must be task specific, which prompts the question: how do features transition from general to specific throughout the network? In this paper, presented at NIPS 2014, we show the manner in which features transition from general to specific, and also uncover a few other interesting results along the way.

Read more in the paper.

Posted November 10, 2014. Updated January 11, 2016.