Probabilistic Topic Models

David M. Blei

Department of Computer Science Princeton University

September 2, 2012

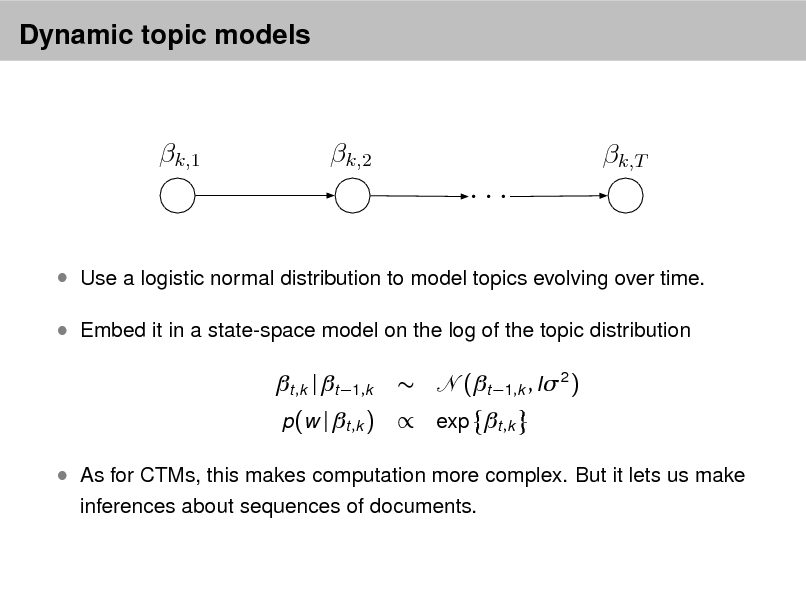

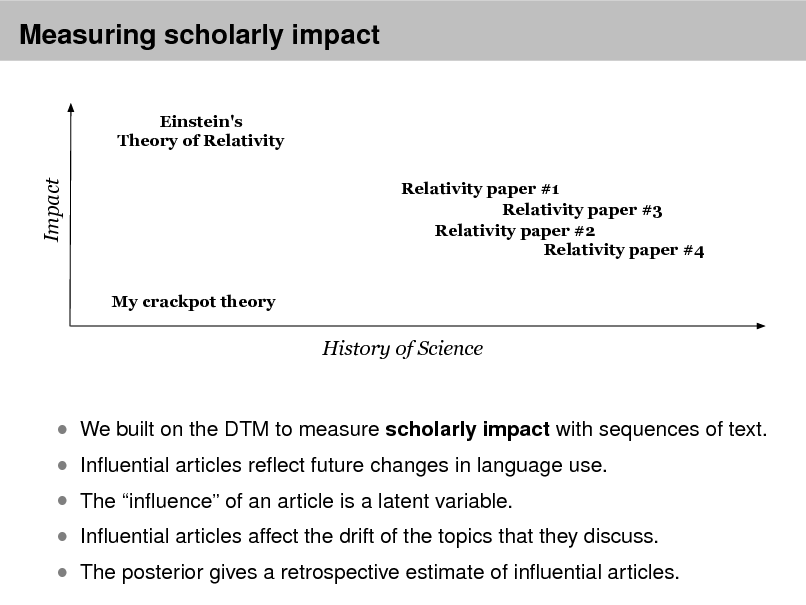

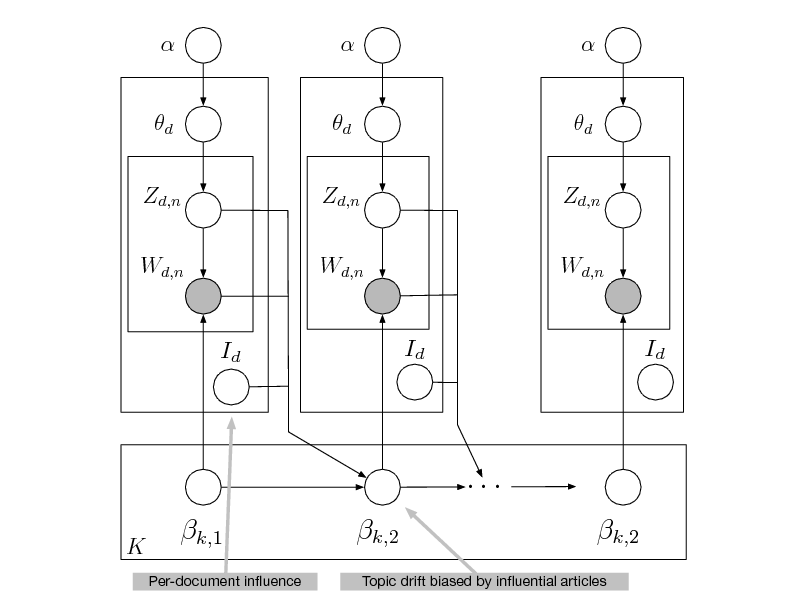

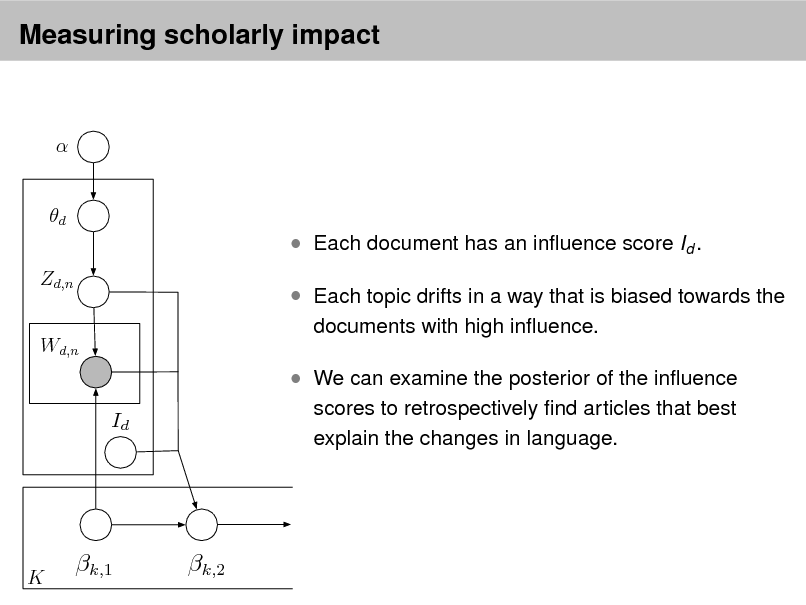

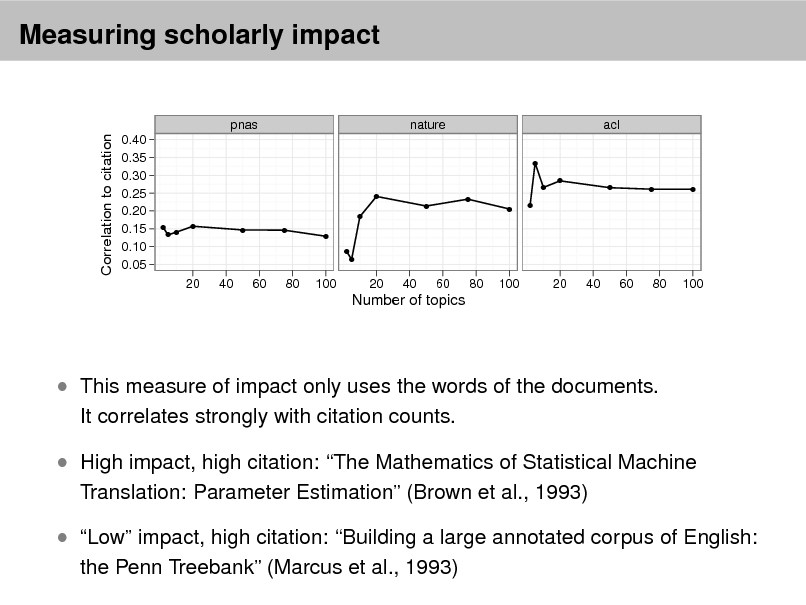

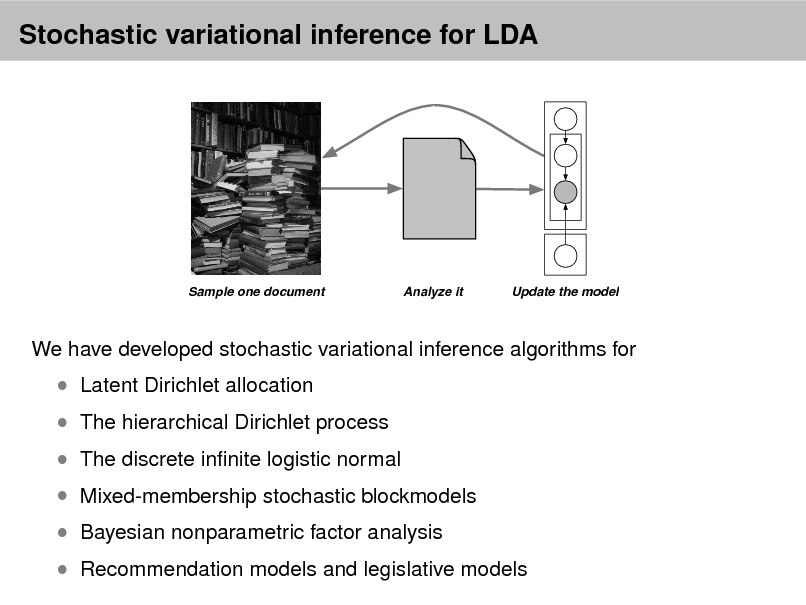

Probabilistic topic modeling provides a suite of tools for the unsupervised analysis of large collections of documents. Topic modeling algorithms can uncover the underlying themes of a collection and decompose its documents according to those themes. This analysis can be used for corpus exploration, document search, and a variety of prediction problems. My lectures will describe three aspects of topic modeling: Topic modeling assumptions Algorithms for computing with topic models Applications of topic models In (1), I will describe latent Dirichlet allocation (LDA), which is one of the simplest topic models, and then describe a variety of ways that we can build on it. These include dynamic topic models, correlated topic models, supervised topic models, author-topic models, bursty topic models, Bayesian nonparametric topic models, and others. I will also discuss some of the fundamental statistical ideas that are used in building topic models, such as distributions on the simplex, hierarchical Bayesian modeling, and models of mixed-membership. In (2), I will review how we compute with topic models. I will describe approximate posterior inference for directed graphical models using both sampling and variational inference, and I will discuss the practical issues and pitfalls in developing these algorithms for topic models. Finally, I will describe some of our most recent work on building algorithms that can scale to millions of documents and documents arriving in a stream. In (3), I will discuss applications of topic models. These include applications to images, music, social networks, and other data in which we hope to uncover hidden patterns. I will describe some of our recent work on adapting topic modeling algorithms to collaborative filtering, legislative modeling, and bibliometrics without citations. Finally, I will discuss some future directions and open research problems in topic models.

Scroll with j/k | | | Size

Probabilistic Topic Models

David M. Blei

Department of Computer Science Princeton University

September 2, 2012

1

Probabilistic topic models

As more information becomes available, it becomes more difcult to nd and discover what we need.

We need new tools to help us organize, search, and understand these vast amounts of information.

2

Probabilistic topic models

Topic modeling provides methods for automatically organizing, understanding, searching, and summarizing large electronic archives.

1 2 3

Discover the hidden themes that pervade the collection. Annotate the documents according to those themes. Use annotations to organize, summarize, search, form predictions.

3

Probabilistic topic models

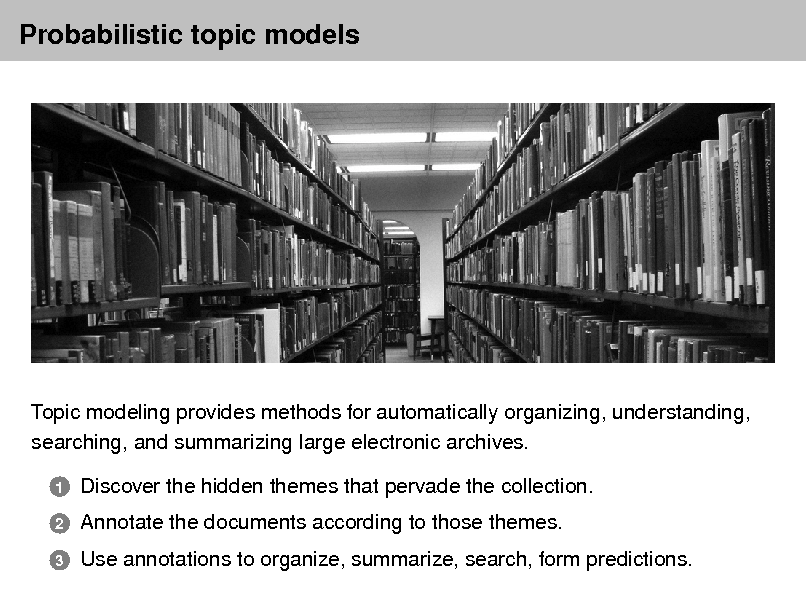

Genetics human genome dna genetic genes sequence gene molecular sequencing map information genetics mapping project sequences Evolution Disease evolution disease evolutionary host species bacteria organisms diseases life resistance origin bacterial biology new groups strains phylogenetic control living infectious diversity malaria group parasite new parasites two united common tuberculosis Computers computer models information data computers system network systems model parallel methods networks software new simulations

4

Probabilistic topic models

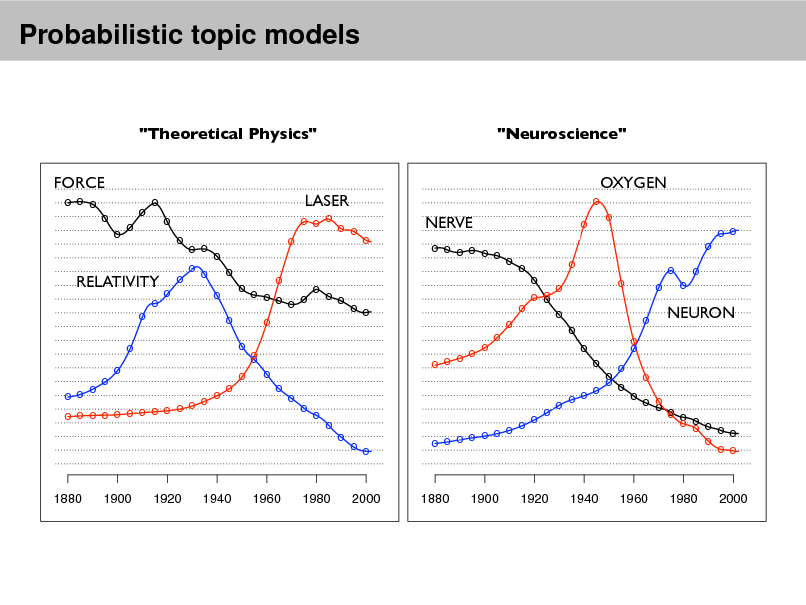

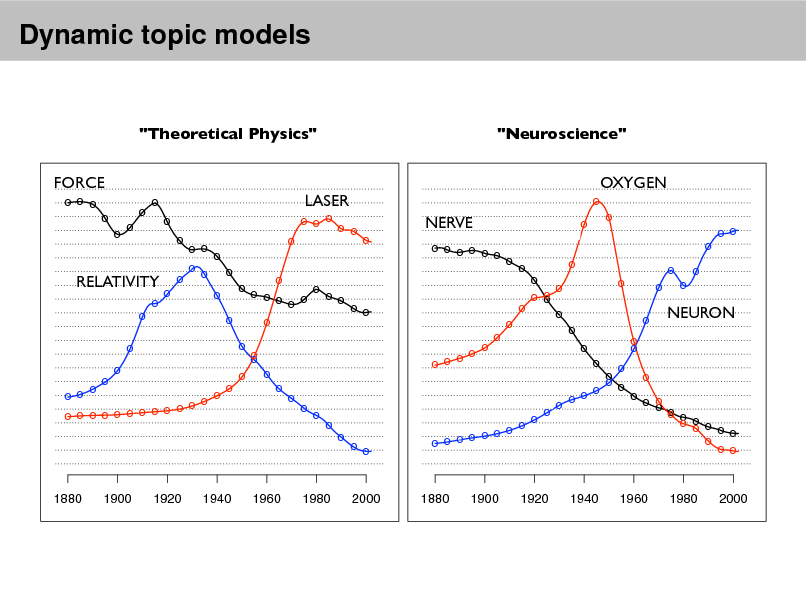

"Theoretical Physics" FORCE

o o o o o o o o o o o

"Neuroscience" OXYGEN

o

LASER

o o o o o

NERVE

o o o o o o o o o o

o

o o o o o o o o o o

o o o o o o o o RELATIVITY o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o

o o o o o o o

o o o o o o o o o o o o o o

NEURON

o o o o o o o o o o o o o o o o o o o o o o

o

o

o o

o o o o o o o o o

o

1880

1900

1920

1940

1960

1980

2000

1880

1900

1920

1940

1960

1980

2000

5

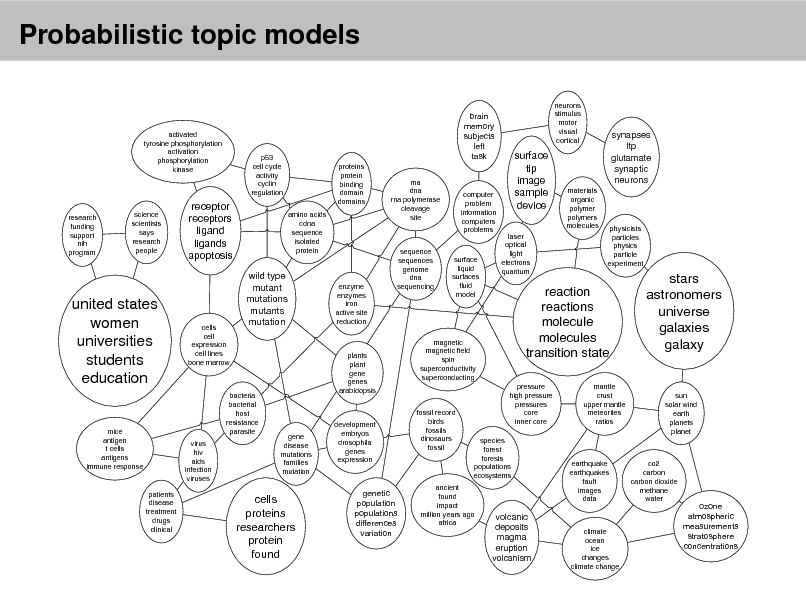

Probabilistic topic models

brain memory subjects left task

proteins protein binding domain domains amino acids cdna sequence isolated protein rna dna rna polymerase cleavage site neurons stimulus motor visual cortical

activated tyrosine phosphorylation activation phosphorylation kinase

p53 cell cycle activity cyclin regulation

research funding support nih program

science scientists says research people

receptor receptors ligand ligands apoptosis

wild type mutant mutations mutants mutation

computer problem information computers problems

surface tip image sample device

laser optical light electrons quantum

synapses ltp glutamate synaptic neurons

materials organic polymer polymers molecules

united states women universities students education

cells cell expression cell lines bone marrow

enzyme enzymes iron active site reduction

sequence sequences genome dna sequencing

surface liquid surfaces uid model

physicists particles physics particle experiment

mice antigen t cells antigens immune response

bacteria bacterial host resistance parasite virus hiv aids infection viruses patients disease treatment drugs clinical

plants plant gene genes arabidopsis

magnetic magnetic eld spin superconductivity superconducting

reaction reactions molecule molecules transition state

pressure high pressure pressures core inner core mantle crust upper mantle meteorites ratios

stars astronomers universe galaxies galaxy

gene disease mutations families mutation

development embryos drosophila genes expression

fossil record birds fossils dinosaurs fossil

sun solar wind earth planets planet

species forest forests populations ecosystems

cells proteins researchers protein found

genetic population populations differences variation

ancient found impact million years ago africa

earthquake earthquakes fault images data

co2 carbon carbon dioxide methane water

volcanic deposits magma eruption volcanism

climate ocean ice changes climate change

ozone atmospheric measurements stratosphere concentrations

6

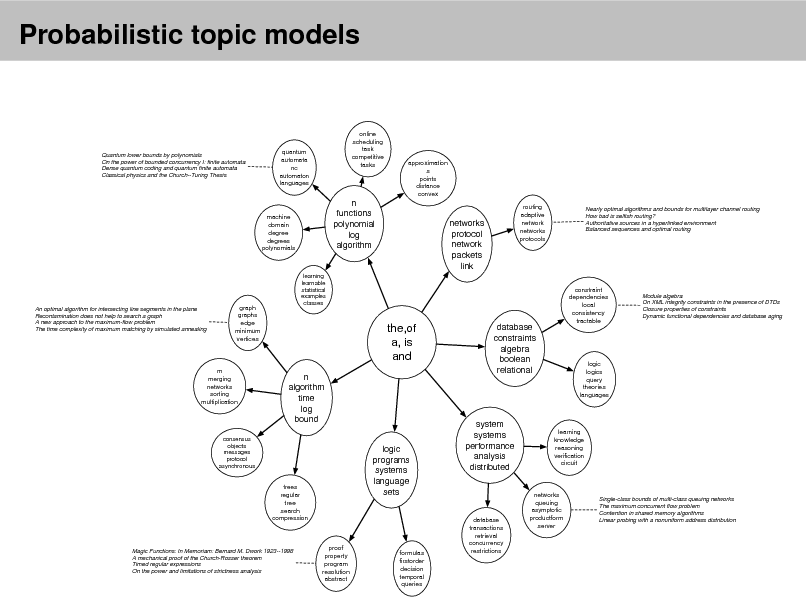

Probabilistic topic models

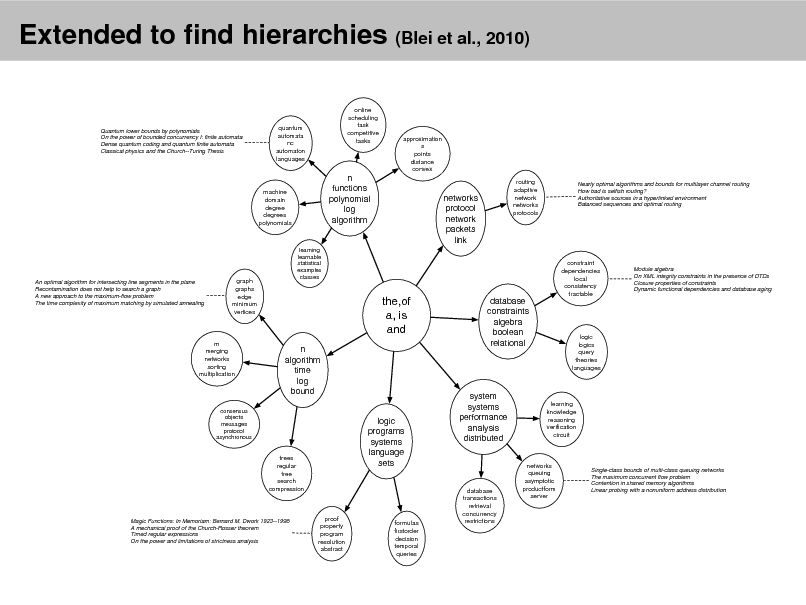

Quantum lower bounds by polynomials On the power of bounded concurrency I: finite automata Dense quantum coding and quantum finite automata Classical physics and the Church--Turing Thesis

quantum automata nc automaton languages

online scheduling task competitive tasks

approximation s points distance convex routing adaptive network networks protocols

Nearly optimal algorithms and bounds for multilayer channel routing How bad is selfish routing? Authoritative sources in a hyperlinked environment Balanced sequences and optimal routing

machine domain degree degrees polynomials

n functions polynomial log algorithm

networks protocol network packets link

An optimal algorithm for intersecting line segments in the plane Recontamination does not help to search a graph A new approach to the maximum-flow problem The time complexity of maximum matching by simulated annealing

graph graphs edge minimum vertices

learning learnable statistical examples classes

the,of a, is and

n algorithm time log bound logic programs systems language sets

m merging networks sorting multiplication

database constraints algebra boolean relational

constraint dependencies local consistency tractable

Module algebra On XML integrity constraints in the presence of DTDs Closure properties of constraints Dynamic functional dependencies and database aging

logic logics query theories languages

consensus objects messages protocol asynchronous

system systems performance analysis distributed

learning knowledge reasoning verication circuit

trees regular tree search compression

Magic Functions: In Memoriam: Bernard M. Dwork 1923--1998 A mechanical proof of the Church-Rosser theorem Timed regular expressions On the power and limitations of strictness analysis

proof property program resolution abstract

formulas rstorder decision temporal queries

database transactions retrieval concurrency restrictions

networks queuing asymptotic productform server

Single-class bounds of multi-class queuing networks The maximum concurrent flow problem Contention in shared memory algorithms Linear probing with a nonuniform address distribution

7

Probabilistic topic models

predicted caption: birds nest leaves branch tree

tain people

Automatic image annotation predicted caption: caption: Automatic predictedwaterpattern textile display predicted caption: tree image annotation leaves branch sky people market tree mountain people birds nest

p p

predicted caption: predicted caption: predicted caption: p predicted caption: predicted predicted caption: utomatic image mountainnest caption: flowers tree coralpredicted caption: image anno SCOTLAND WATER people marketWATER BUILDING e coral SKY WATER TREE sky water buildings people fish branch Automatic sky water textile display SKY patternbuildings people mountain s sky water tree mountain people annotation hills tree birds scotland water ocean leaves water tree predicted caption: MOUNTAIN PEOPLE sky water tree mountain people predicted caption:HILLS TREEpredicted caption: FLOWER PEOPLE WATER birds nest leaves branch tree people market pattern textile display

predicted caption: predicted caption: predicted caption: fish water ocean tree coral sky water buildings people mountain scotland water flowers hills tree Probabilistic modelsof text and images p.5/53 predicted predicted caption: predicted caption: caption: predicted caption: caption: predicted caption: predicted caption: predicted FISH WATERtree coral MARKET PATTERN tain peoplefish water ocean leaves branch treePEOPLE sky water pattern textile display water flowersleavestree sky water buildings peoplemountain people birds nest OCEAN people market tree mountain scotland BIRDS NEST TREE birds nest hills branch tree

pr pe

TREE CORAL

TEXTILE DISPLAY

BRANCH LEAVES

8

![Slide: Probabilistic topic models

Derek E. Wildman et al., Implications of Natural Selection in Shaping 99.4% Nonsynonymous DNA Identity between Humans and Chimpanzees: Enlarging Genus Homo, PNAS (2003) [178 citations]

0.030

0.025

Jared M. Diamond, Distributional Ecology of New Guinea Birds. Science (1973) [296 citations]

WeightedInfluence

0.020

0.015

William K. Gregory, The New Anthropogeny: Twenty-Five Stages of Vertebrate Evolution, from Silurian Chordate to Man, Science (1933) [3 citations]

0.010

0.005

0.000 1880 1900 1920 1940 1960 1980 2000

Year W. B. Scott, The Isthmus of Panama in Its Relation to the Animal Life of North and South America, Science (1916) [3 citations]](https://yosinski.com/mlss12/media/slides/MLSS-2012-Blei-Probabilistic-Topic-Models_009.png)

Probabilistic topic models

Derek E. Wildman et al., Implications of Natural Selection in Shaping 99.4% Nonsynonymous DNA Identity between Humans and Chimpanzees: Enlarging Genus Homo, PNAS (2003) [178 citations]

0.030

0.025

Jared M. Diamond, Distributional Ecology of New Guinea Birds. Science (1973) [296 citations]

WeightedInfluence

0.020

0.015

William K. Gregory, The New Anthropogeny: Twenty-Five Stages of Vertebrate Evolution, from Silurian Chordate to Man, Science (1933) [3 citations]

0.010

0.005

0.000 1880 1900 1920 1940 1960 1980 2000

Year W. B. Scott, The Isthmus of Panama in Its Relation to the Animal Life of North and South America, Science (1916) [3 citations]

9

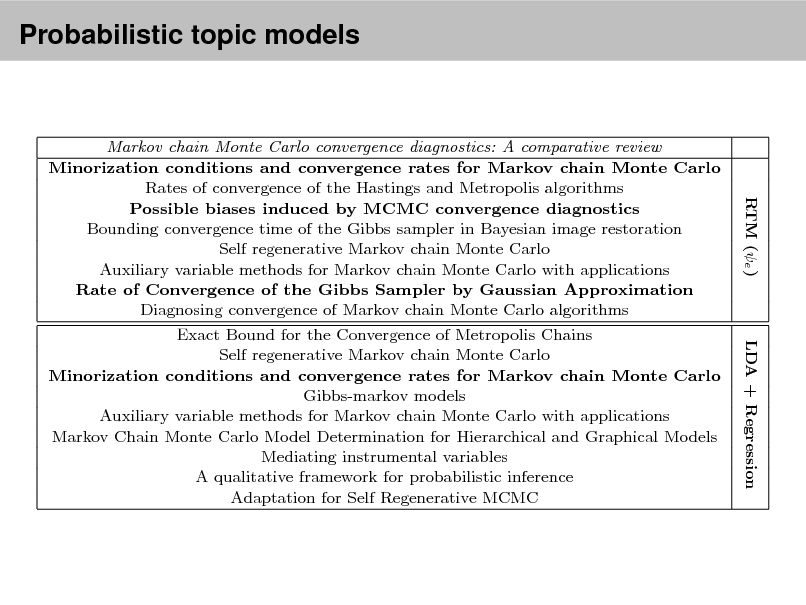

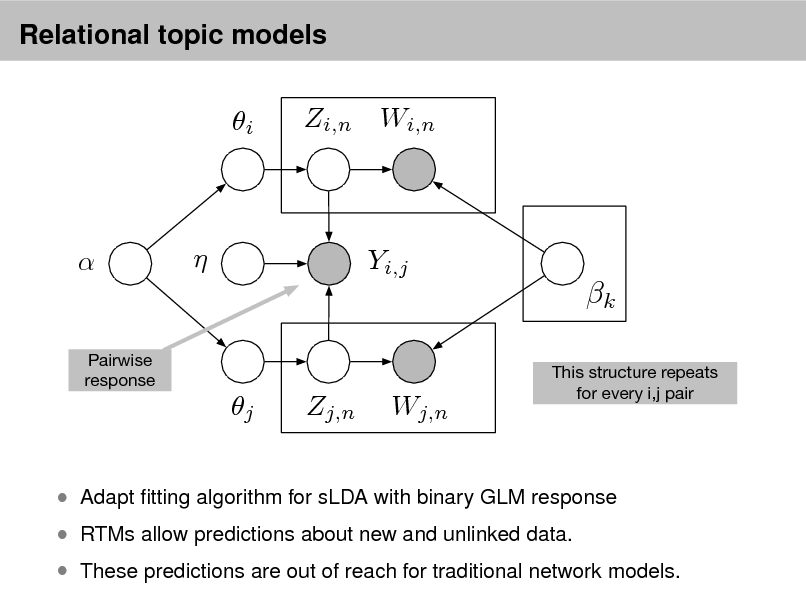

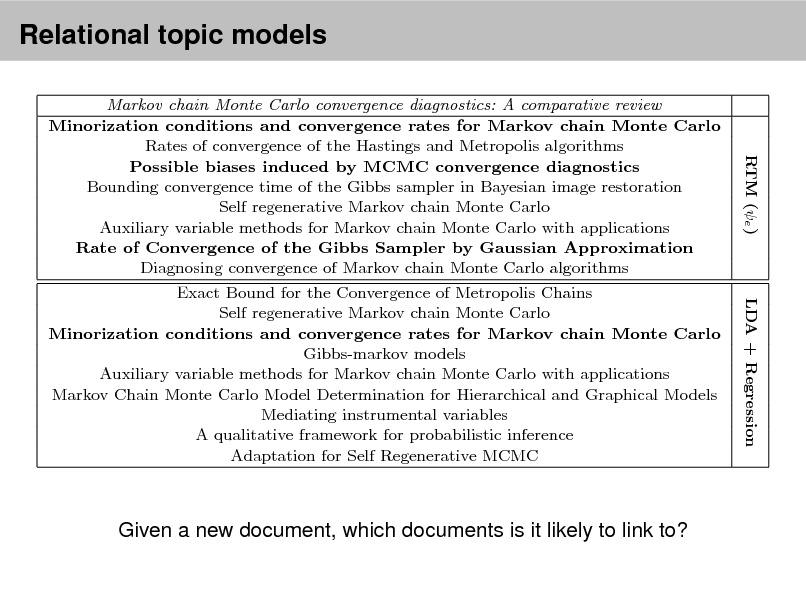

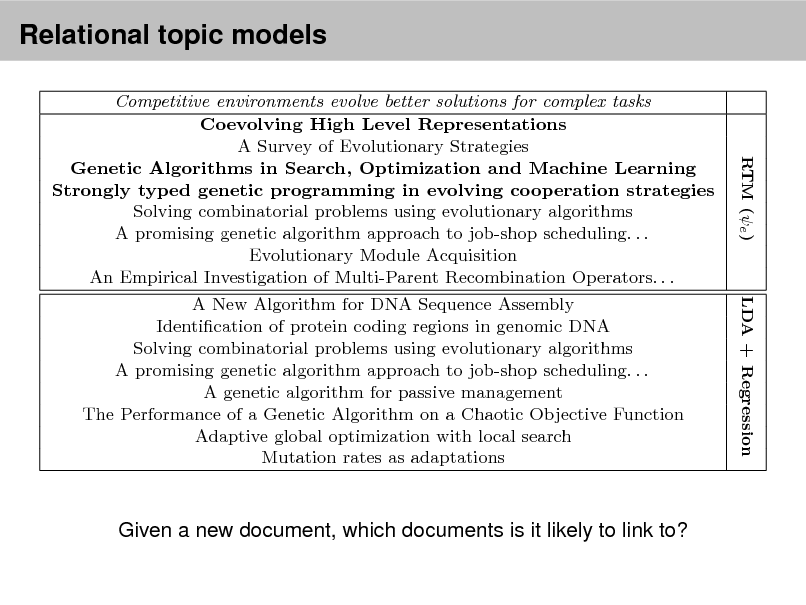

Probabilistic topicmade by RTM (e ) and LDA + Regression for two documents Top eight link predictions models

(italicized) from Cora. The models were t with 10 topics. Boldfaced titles indicate actual documents cited by or citing each document. Over the whole corpus, RTM improves precision over LDA + Regression by 80% when evaluated on the rst 20 documents retrieved. Markov chain Monte Carlo convergence diagnostics: A comparative review Minorization conditions and convergence rates for Markov chain Monte Carlo Rates of convergence of the Hastings and Metropolis algorithms Possible biases induced by MCMC convergence diagnostics Bounding convergence time of the Gibbs sampler in Bayesian image restoration Self regenerative Markov chain Monte Carlo Auxiliary variable methods for Markov chain Monte Carlo with applications Rate of Convergence of the Gibbs Sampler by Gaussian Approximation Diagnosing convergence of Markov chain Monte Carlo algorithms Exact Bound for the Convergence of Metropolis Chains Self regenerative Markov chain Monte Carlo Minorization conditions and convergence rates for Markov chain Monte Carlo Gibbs-markov models Auxiliary variable methods for Markov chain Monte Carlo with applications Markov Chain Monte Carlo Model Determination for Hierarchical and Graphical Models Mediating instrumental variables A qualitative framework for probabilistic inference Adaptation for Self Regenerative MCMC Competitive environments evolve better solutions for complex tasks Coevolving High Level Representations A Survey of Evolutionary Strategies

Table 2

RTM (e ) LDA + Regression

R

10

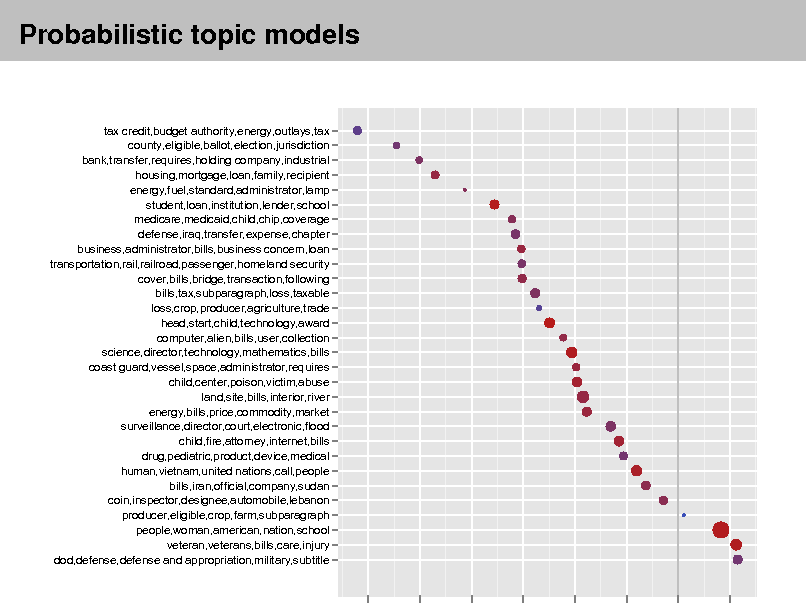

Probabilistic topic models

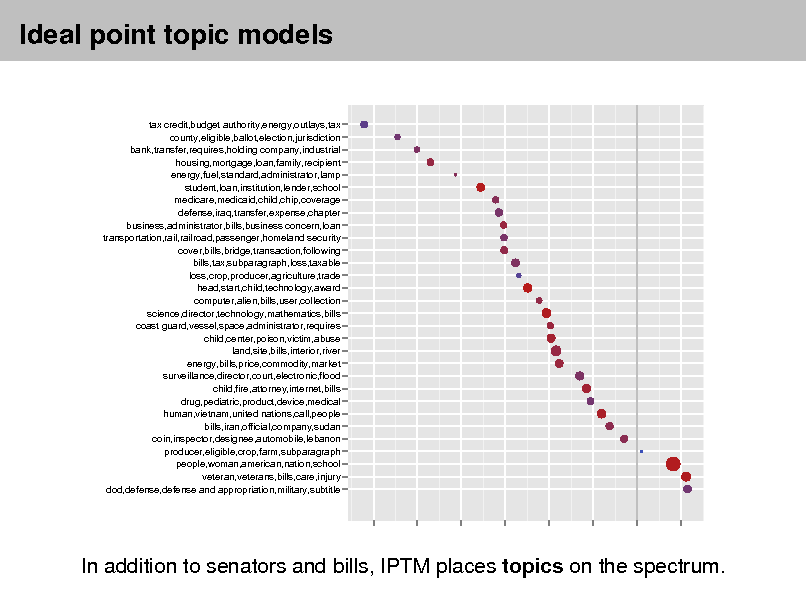

tax credit,budget authority,energy,outlays,tax county,eligible,ballot,election,jurisdiction bank,transfer,requires,holding company,industrial housing,mortgage,loan,family,recipient energy,fuel,standard,administrator,lamp student,loan,institution,lender,school medicare,medicaid,child,chip,coverage defense,iraq,transfer,expense,chapter business,administrator,bills,business concern,loan transportation,rail,railroad,passenger,homeland security cover,bills,bridge,transaction,following bills,tax,subparagraph,loss,taxable loss,crop,producer,agriculture,trade head,start,child,technology,award computer,alien,bills,user,collection science,director,technology,mathematics,bills coast guard,vessel,space,administrator,requires child,center,poison,victim,abuse land,site,bills,interior,river energy,bills,price,commodity,market surveillance,director,court,electronic,flood child,fire,attorney,internet,bills drug,pediatric,product,device,medical human,vietnam,united nations,call,people bills,iran,official,company,sudan coin,inspector,designee,automobile,lebanon producer,eligible,crop,farm,subparagraph people,woman,american,nation,school veteran,veterans,bills,care,injury dod,defense,defense and appropriation,military,subtitle

11

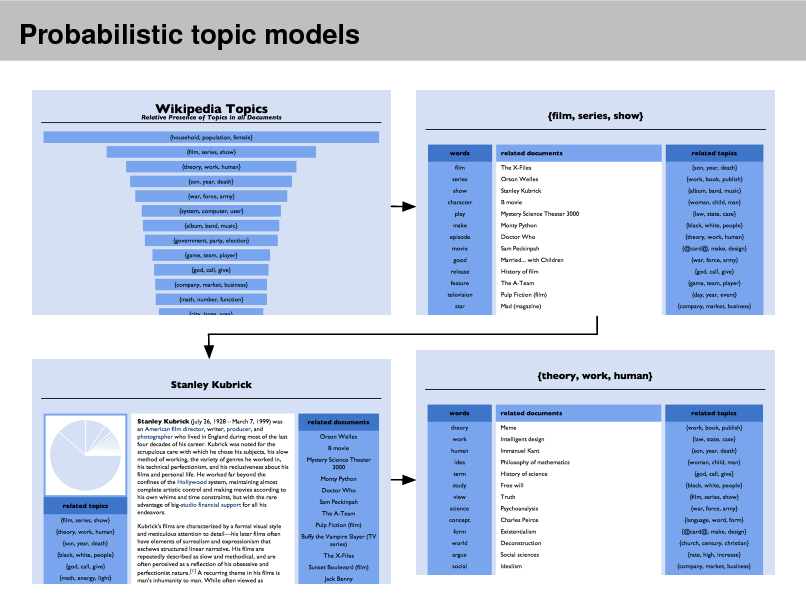

Probabilistic topic models

12

Probabilistic topic models

What are topic models?

What kinds of things can they do?

How do I compute with a topic model?

How do I evaluate and check a topic model? How can I learn more?

What are some unanswered questions in this eld?

13

![Slide: Probabilistic models

This is a case study in data analysis with probability models. Our agenda is to teach about this kind of analysis through topic models. Note: We are being Bayesian in this sense:

[By Bayesian inference,] I simply mean the method of statistical inference that draws conclusions by calculating conditional distributions of unknown quantities given (a) known quantities and (b) model specications. (Rubin, 1984)

(The Bayesian versus Frequentist debate is not relevant to this talk.)](https://yosinski.com/mlss12/media/slides/MLSS-2012-Blei-Probabilistic-Topic-Models_014.png)

Probabilistic models

This is a case study in data analysis with probability models. Our agenda is to teach about this kind of analysis through topic models. Note: We are being Bayesian in this sense:

[By Bayesian inference,] I simply mean the method of statistical inference that draws conclusions by calculating conditional distributions of unknown quantities given (a) known quantities and (b) model specications. (Rubin, 1984)

(The Bayesian versus Frequentist debate is not relevant to this talk.)

14

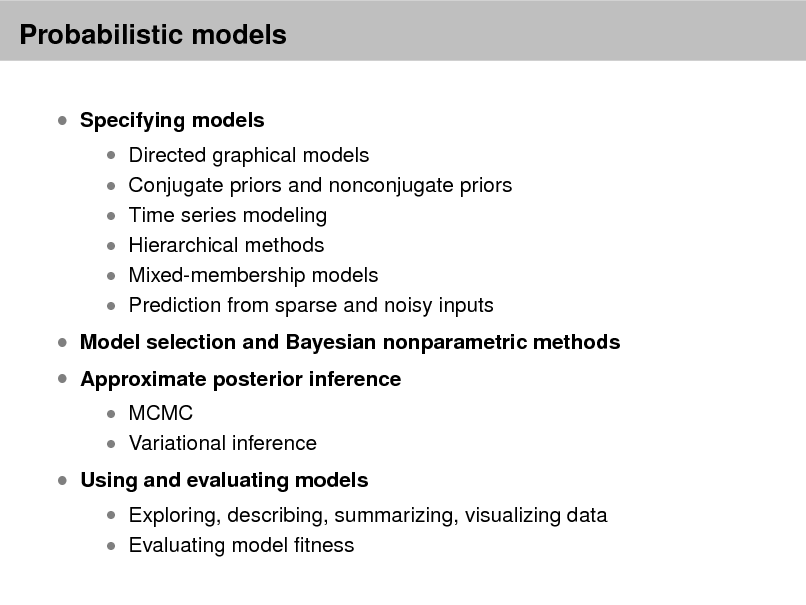

Probabilistic models

Specifying models

Directed graphical models Conjugate priors and nonconjugate priors Time series modeling Hierarchical methods

Mixed-membership models Prediction from sparse and noisy inputs

Model selection and Bayesian nonparametric methods Approximate posterior inference

MCMC Variational inference

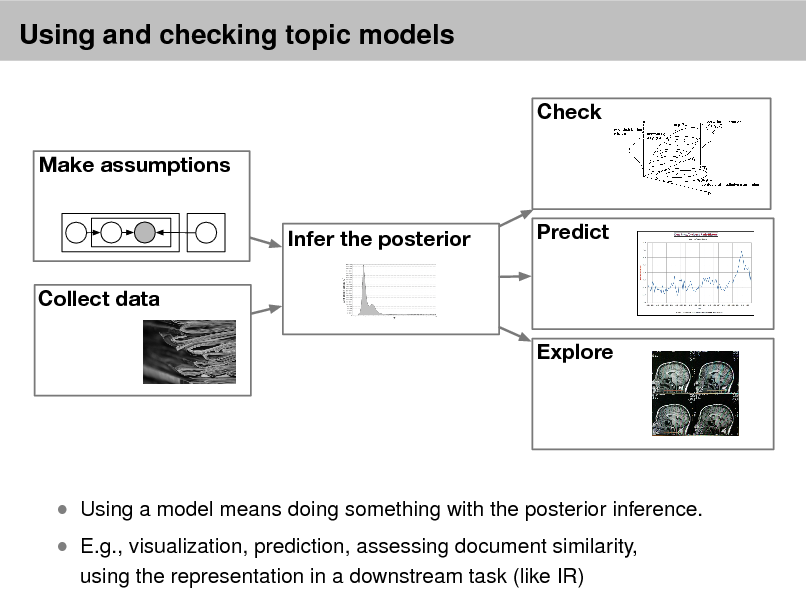

Using and evaluating models

Exploring, describing, summarizing, visualizing data Evaluating model tness

15

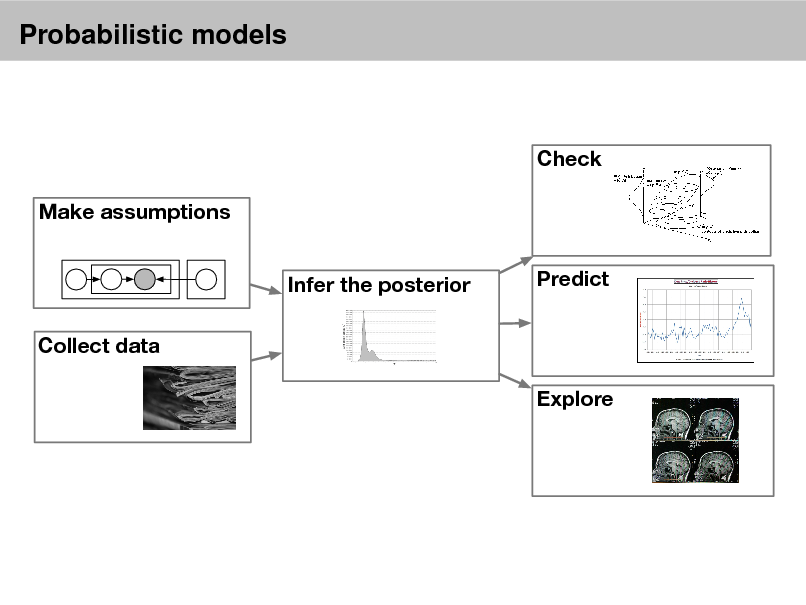

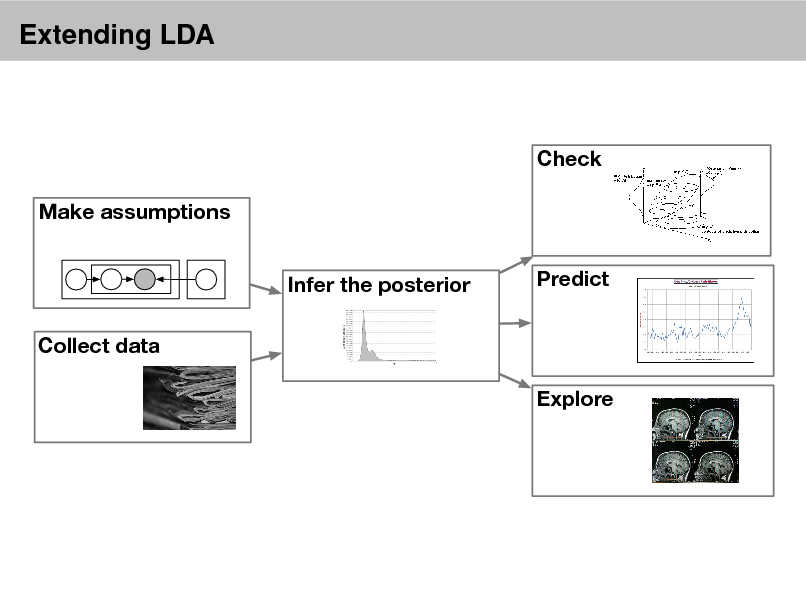

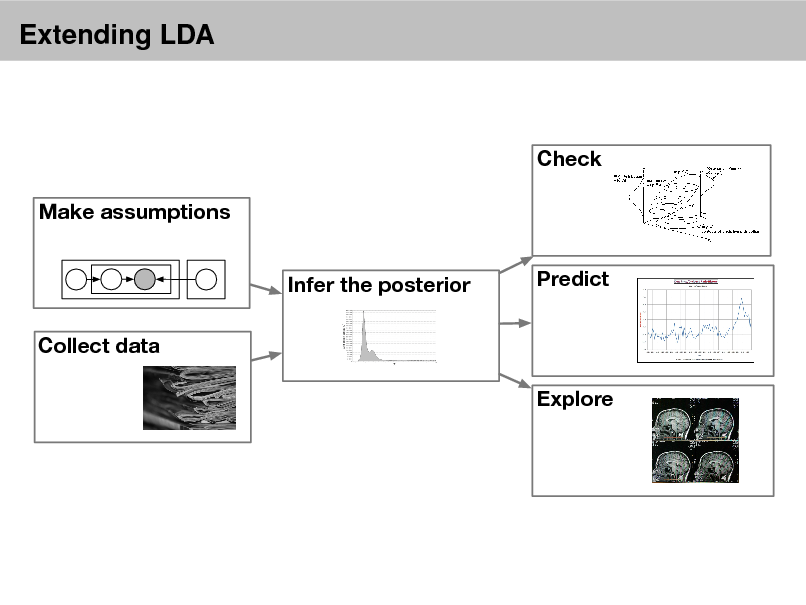

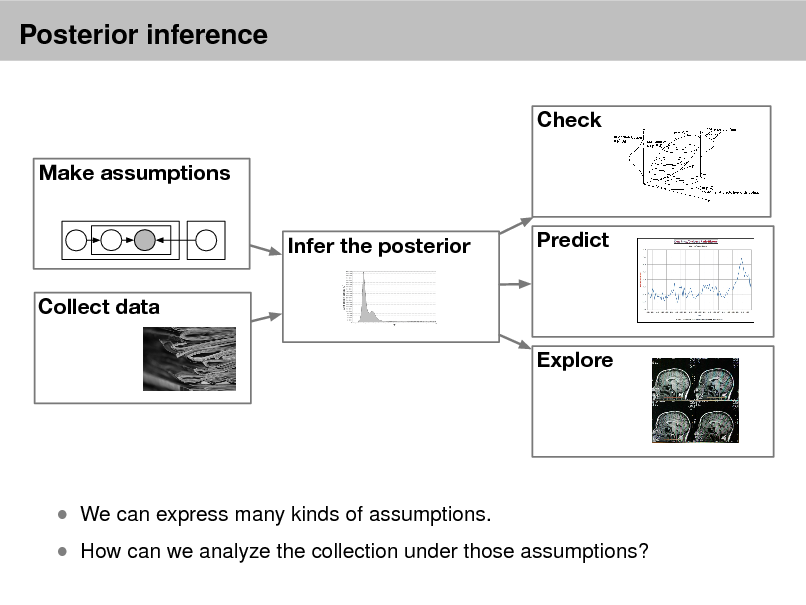

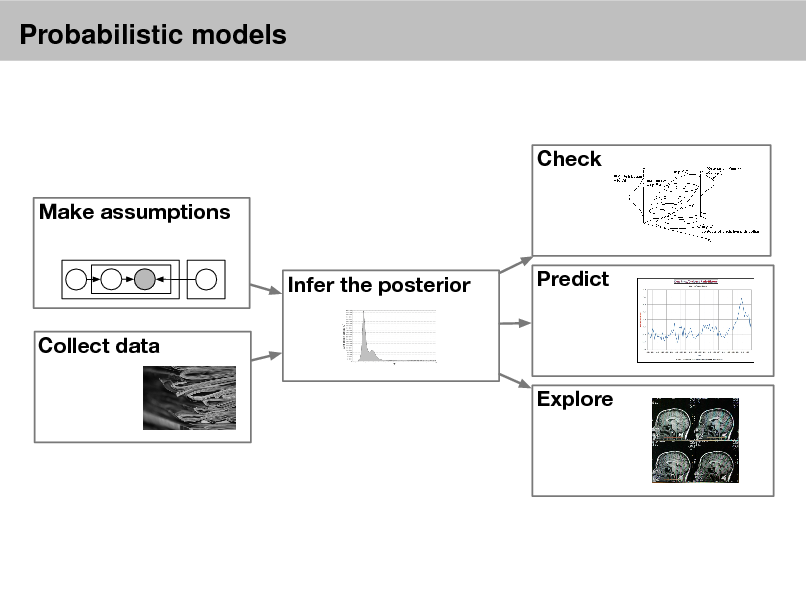

Probabilistic models

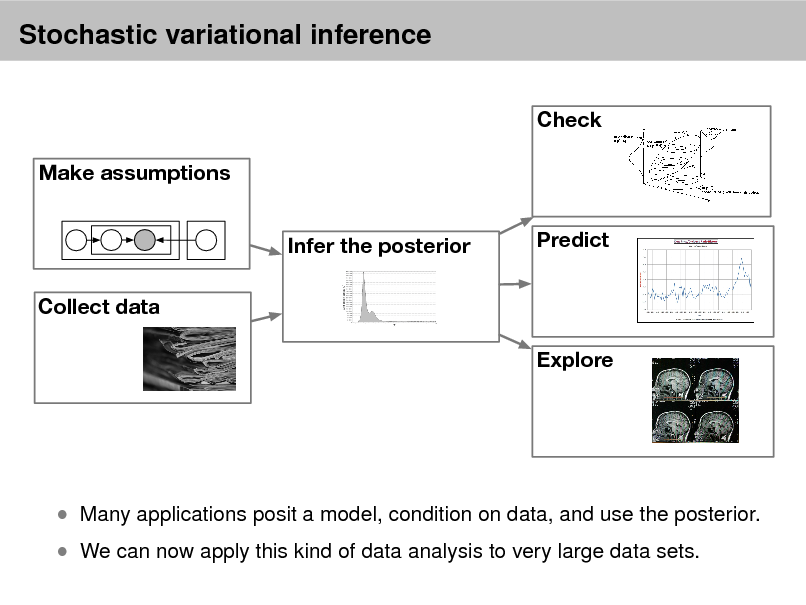

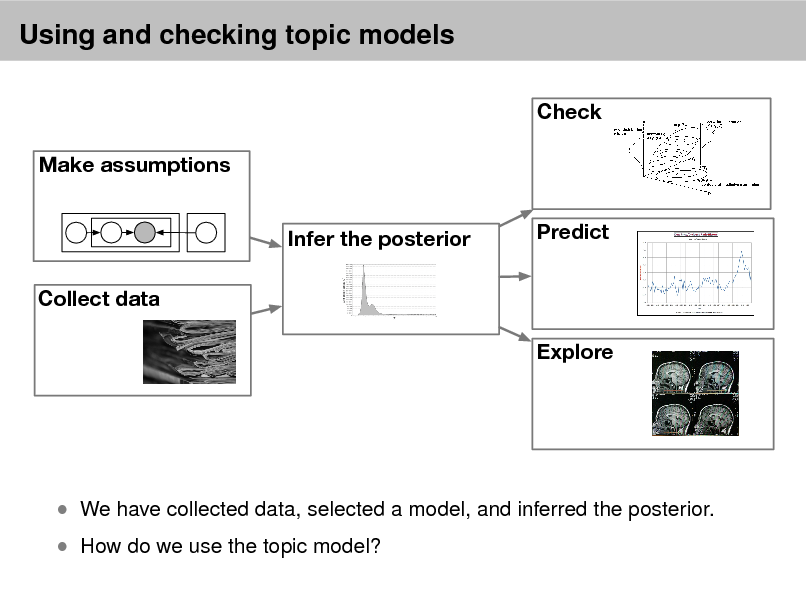

Check Make assumptions Infer the posterior Collect data Explore Predict

16

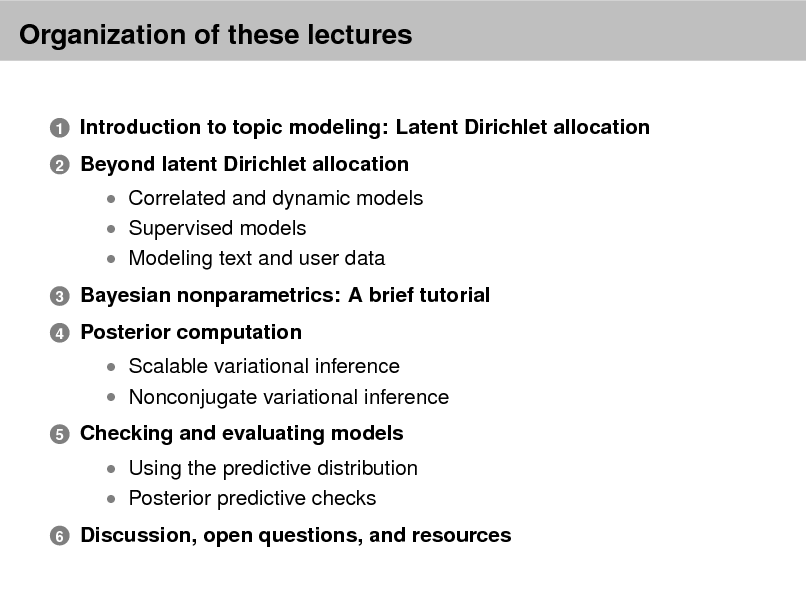

Organization of these lectures

Introduction to topic modeling: Latent Dirichlet allocation Beyond latent Dirichlet allocation

Correlated and dynamic models Supervised models Modeling text and user data

3 4

1 2

Bayesian nonparametrics: A brief tutorial Posterior computation

Scalable variational inference Nonconjugate variational inference

5

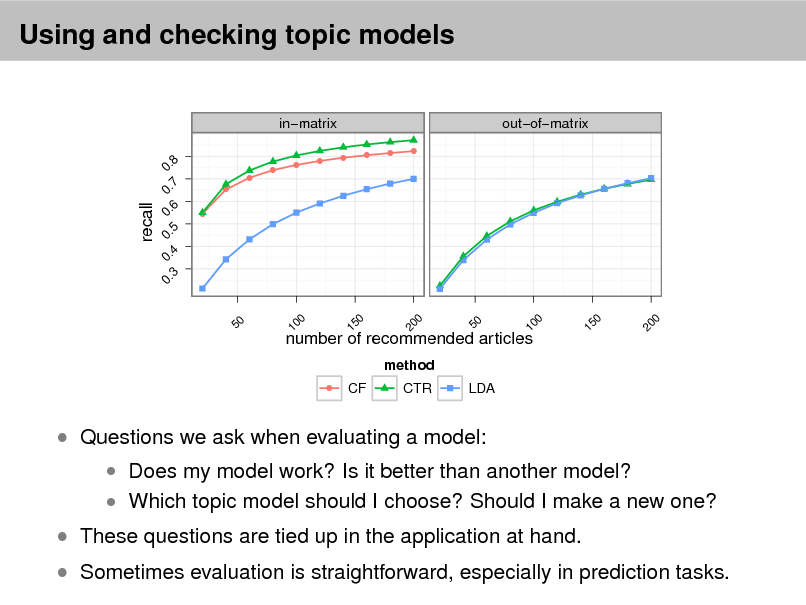

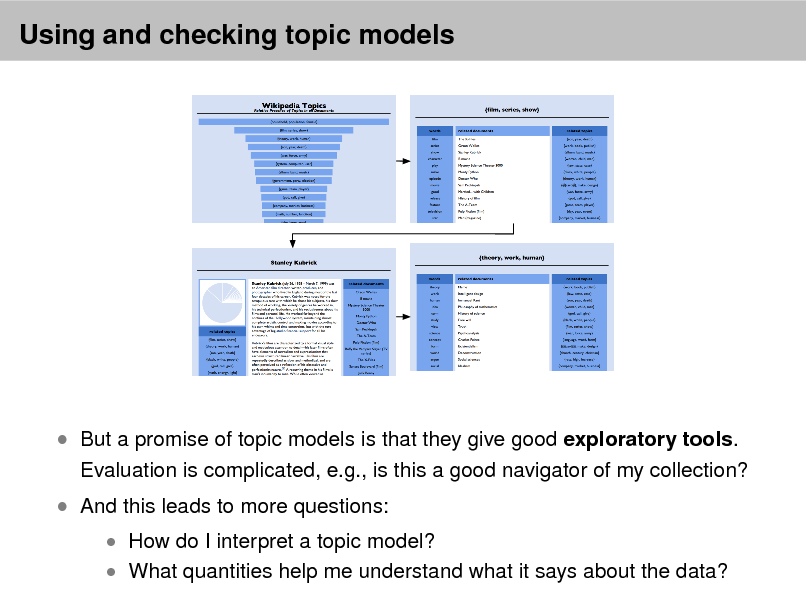

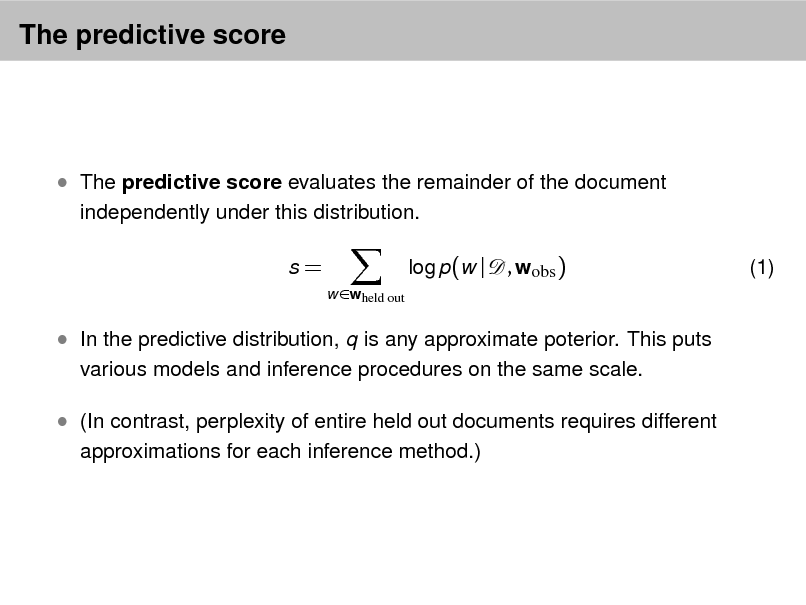

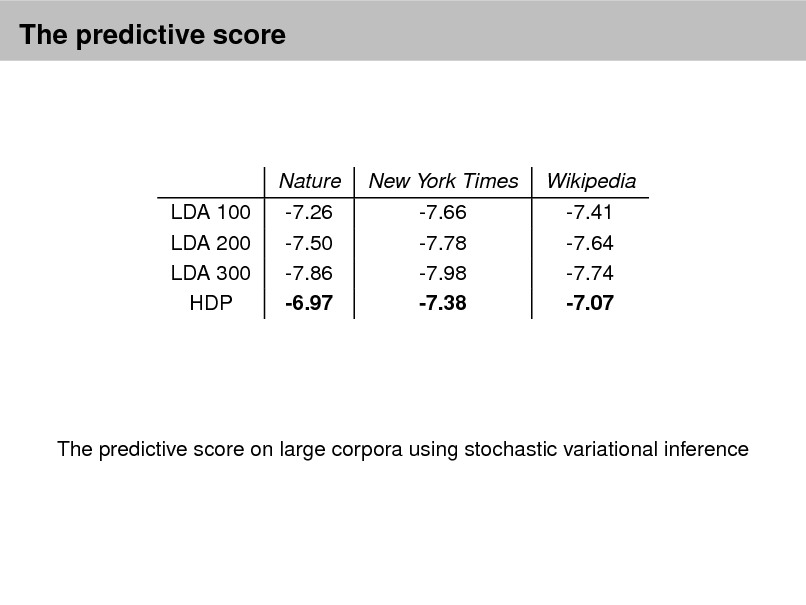

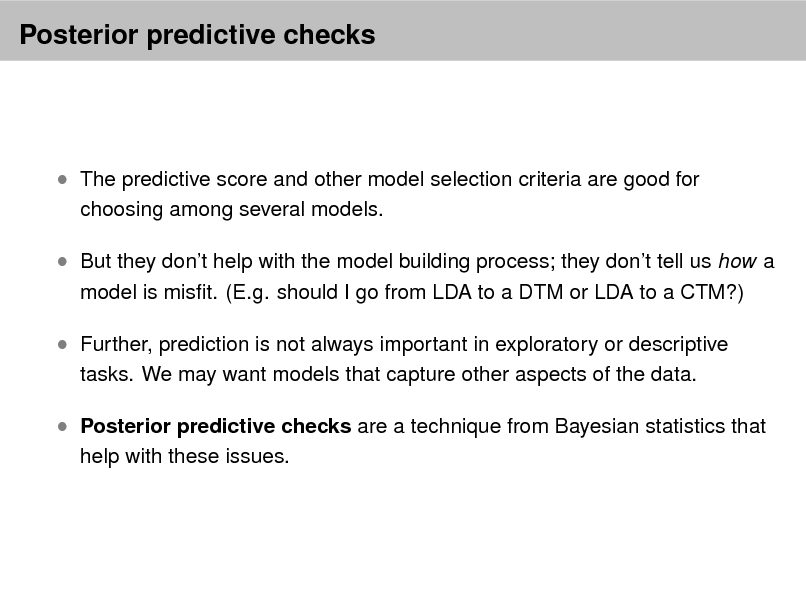

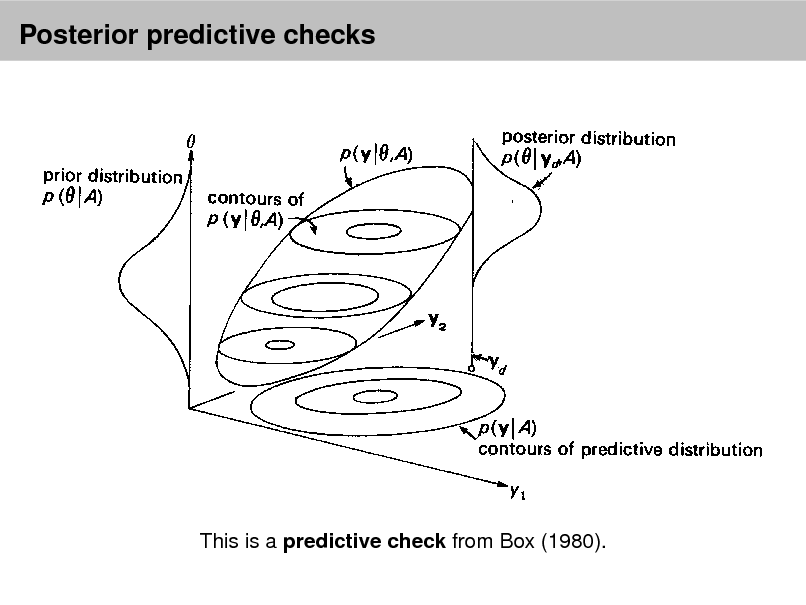

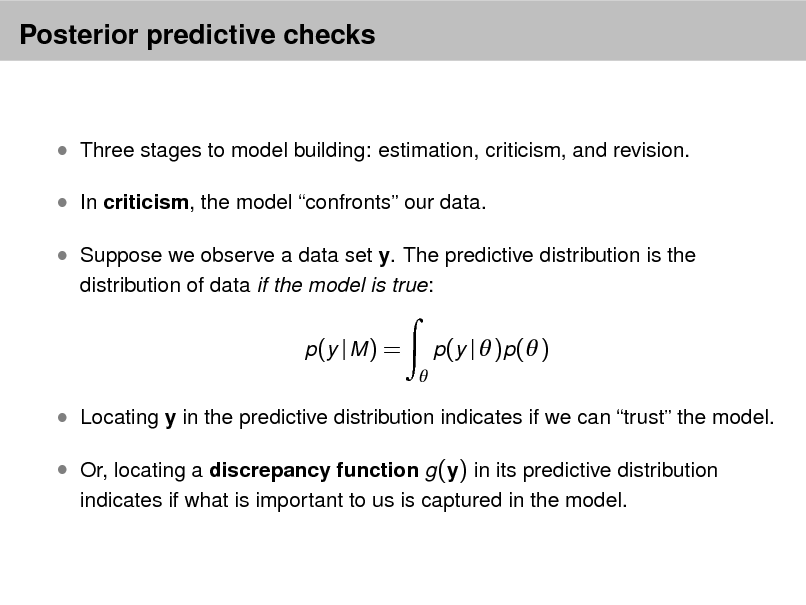

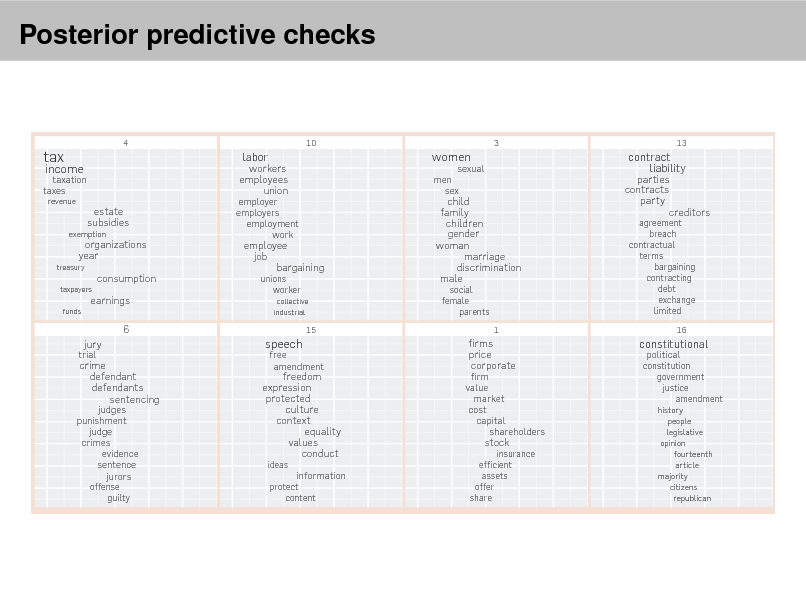

Checking and evaluating models

Using the predictive distribution Posterior predictive checks

6

Discussion, open questions, and resources

17

Introduction to Topic Modeling

18

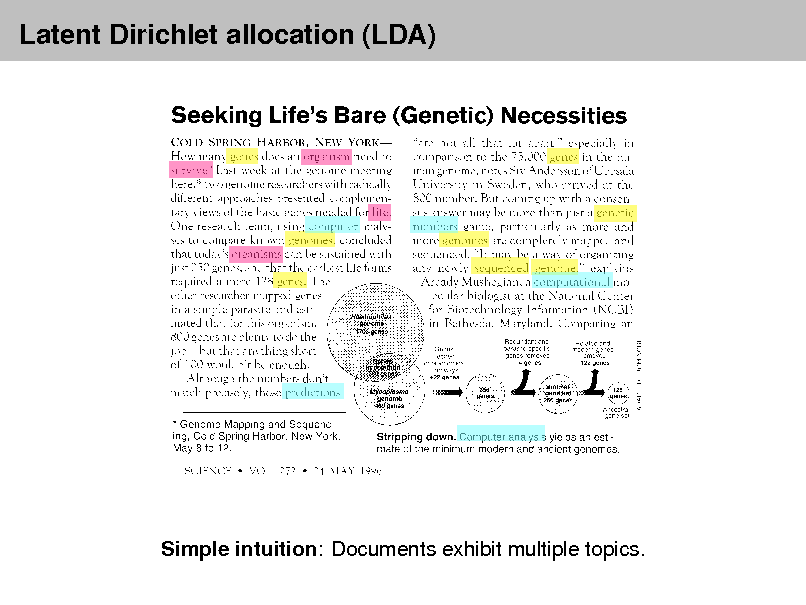

Latent Dirichlet allocation (LDA)

Simple intuition: Documents exhibit multiple topics.

19

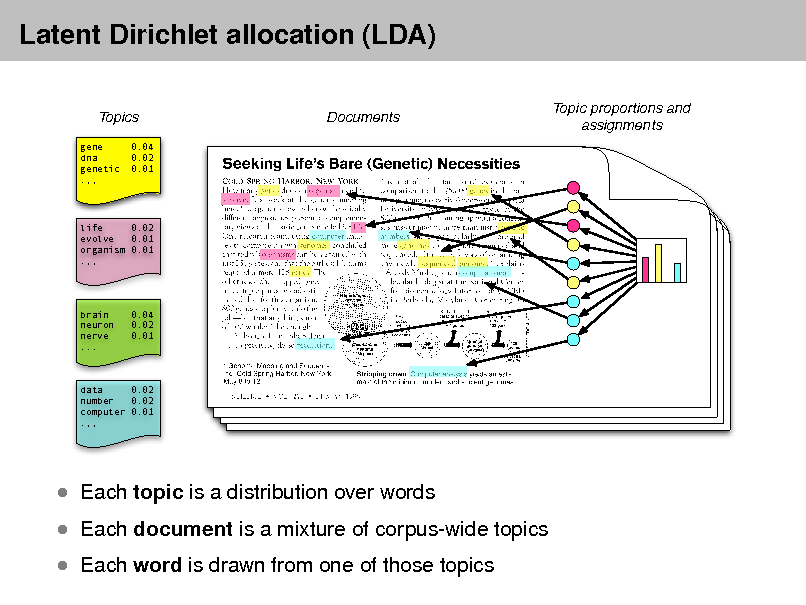

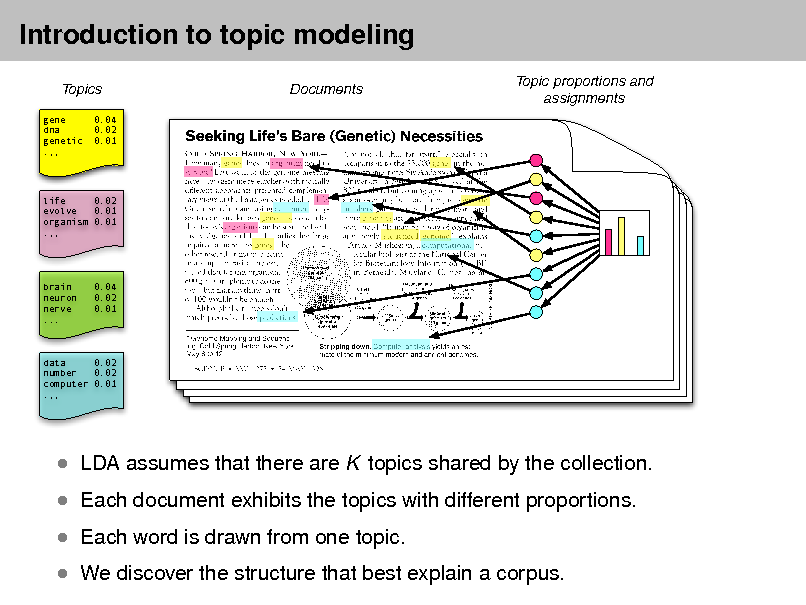

Latent Dirichlet allocation (LDA)

Topics

gene dna genetic .,, 0.04 0.02 0.01

Documents

Topic proportions and assignments

life 0.02 evolve 0.01 organism 0.01 .,,

brain neuron nerve ...

0.04 0.02 0.01

data 0.02 number 0.02 computer 0.01 .,,

Each topic is a distribution over words

Each document is a mixture of corpus-wide topics Each word is drawn from one of those topics

20

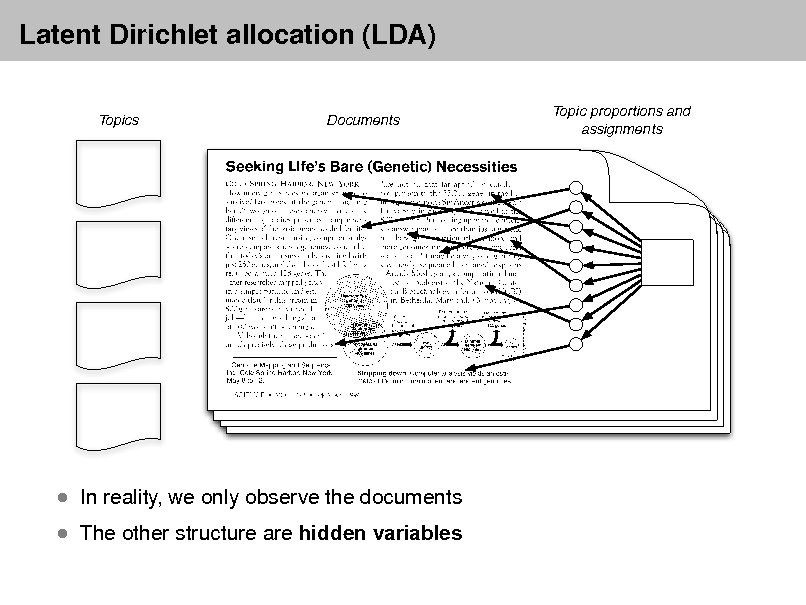

Latent Dirichlet allocation (LDA)

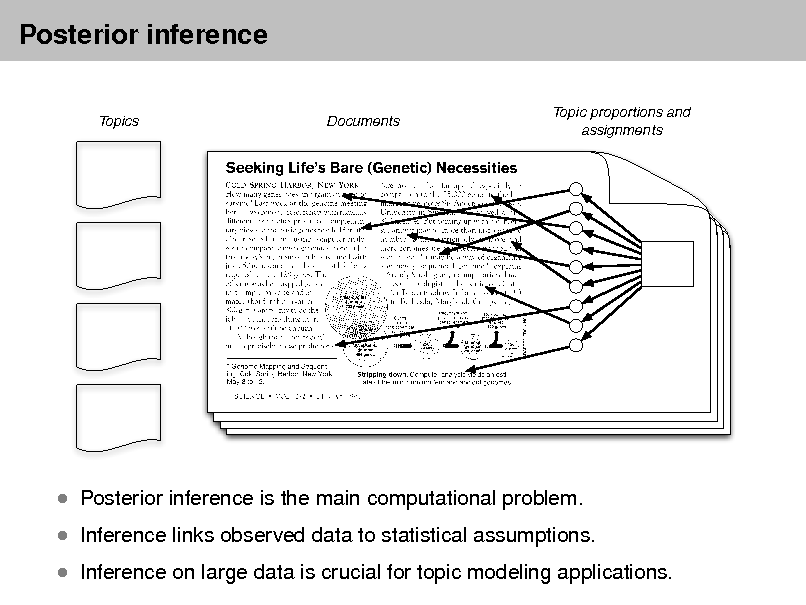

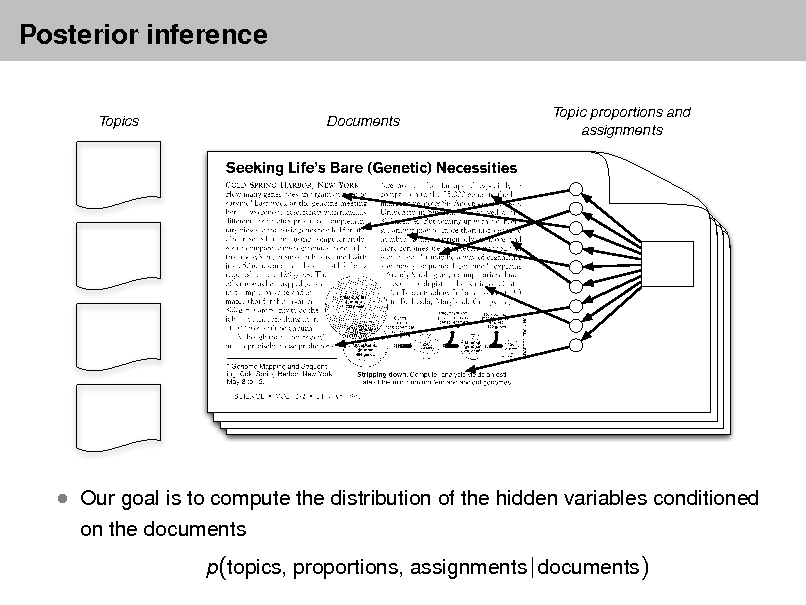

Topics Documents Topic proportions and assignments

The other structure are hidden variables

In reality, we only observe the documents

21

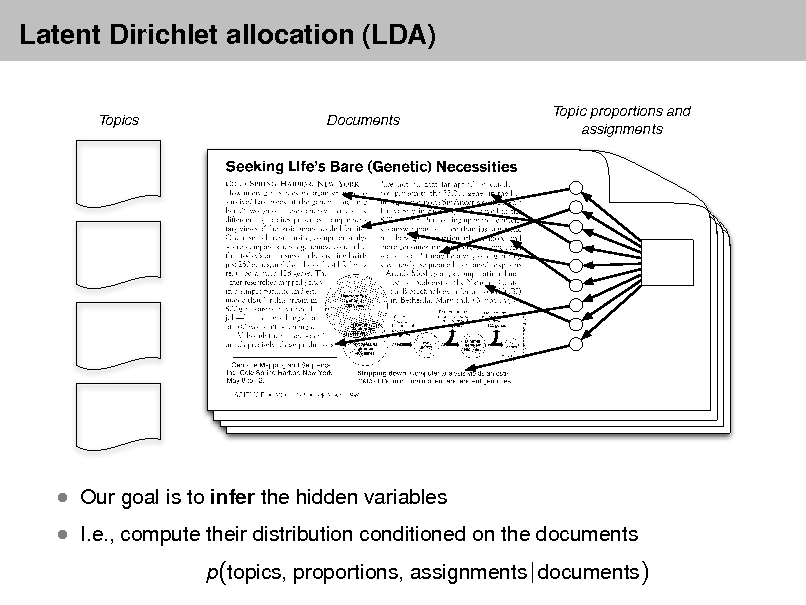

Latent Dirichlet allocation (LDA)

Topics Documents Topic proportions and assignments

I.e., compute their distribution conditioned on the documents

p(topics, proportions, assignments | documents)

Our goal is to infer the hidden variables

22

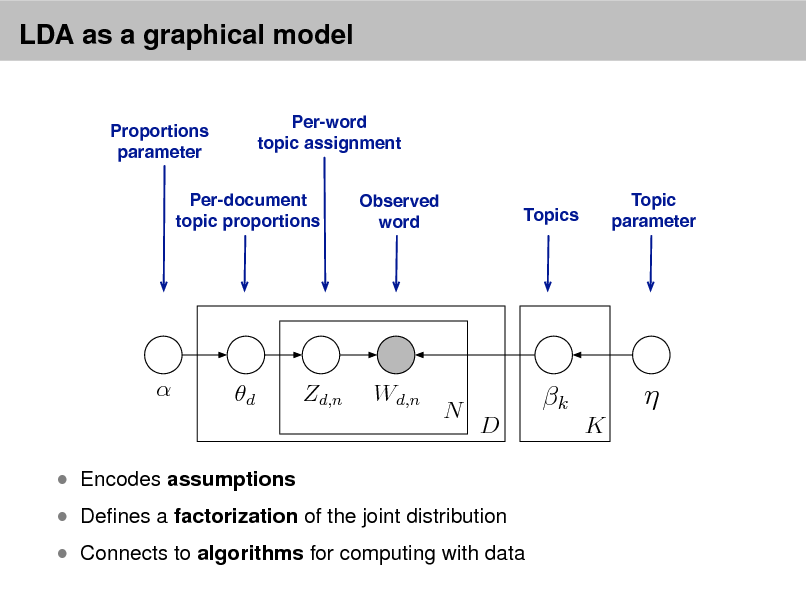

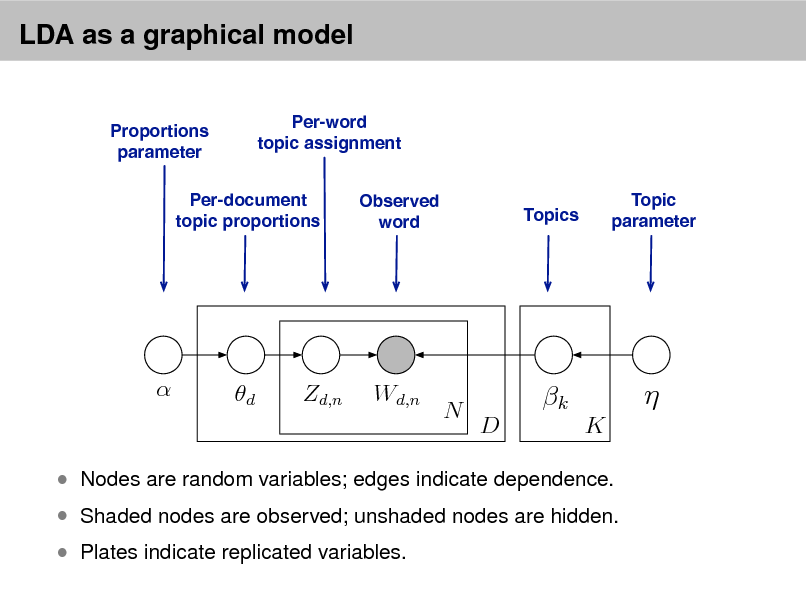

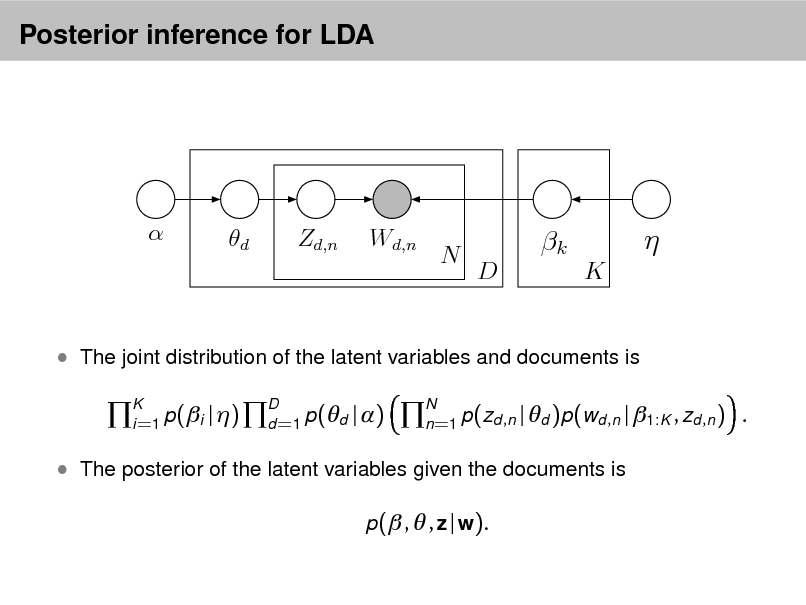

LDA as a graphical model

Per-word topic assignment Observed word Topic parameter

Proportions parameter

Per-document topic proportions

Topics

d

Zd,n

Wd,n

N

k

D K

Denes a factorization of the joint distribution

Encodes assumptions

Connects to algorithms for computing with data

23

LDA as a graphical model

Per-word topic assignment Observed word Topic parameter

Proportions parameter

Per-document topic proportions

Topics

d

Zd,n

Wd,n

N

k

D K

Shaded nodes are observed; unshaded nodes are hidden. Plates indicate replicated variables.

Nodes are random variables; edges indicate dependence.

24

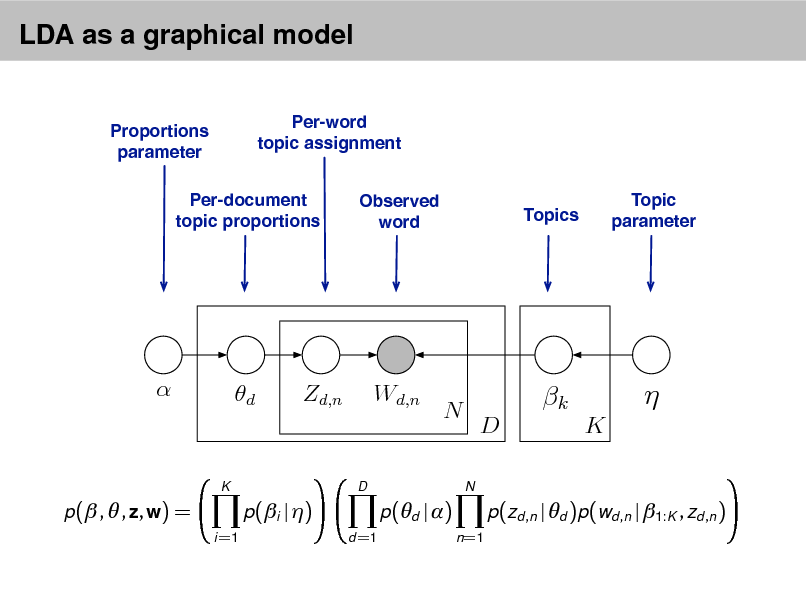

LDA as a graphical model

Per-word topic assignment Observed word Topic parameter

Proportions parameter

Per-document topic proportions

Topics

d

Zd,n

Wd,n

N

N

k

D K

K

D

p( , , z, w) =

i =1

p(i | )

d =1

p(d | )

n =1

p(zd ,n | d )p(wd ,n | 1:K , zd ,n )

25

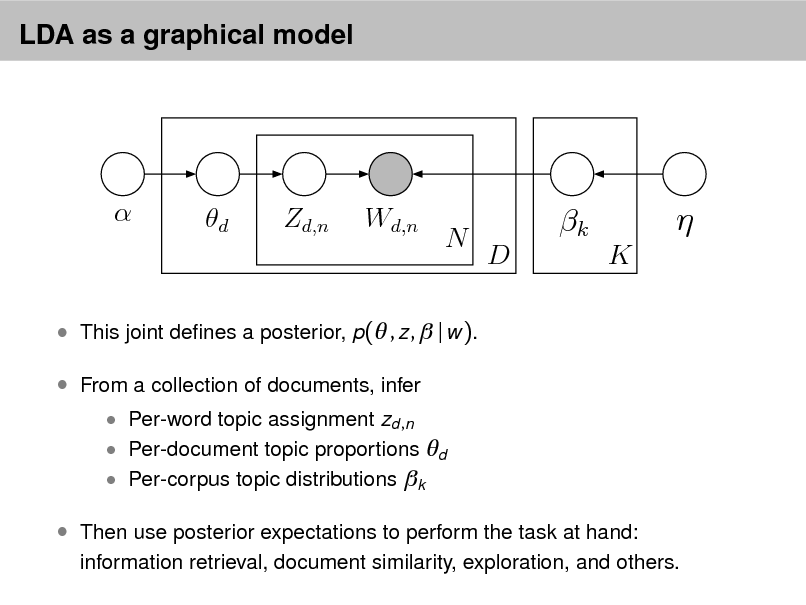

LDA as a graphical model

d

Zd,n

Wd,n

N

k

D K

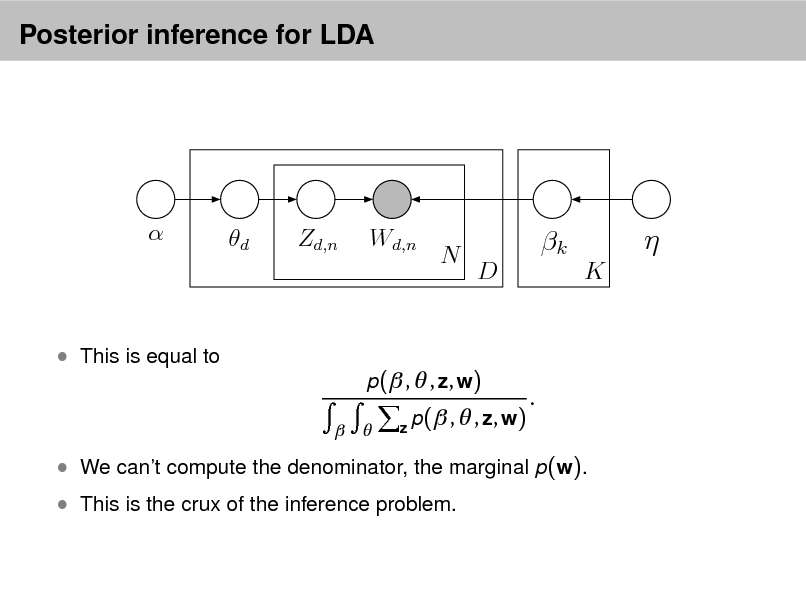

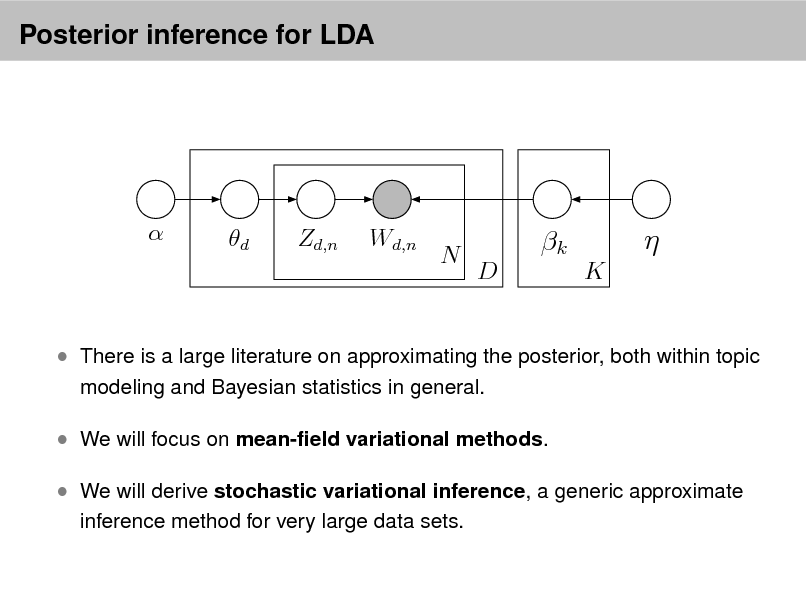

This joint denes a posterior, p( , z , | w ). From a collection of documents, infer

Per-word topic assignment zd ,n Per-document topic proportions d Per-corpus topic distributions k

Then use posterior expectations to perform the task at hand:

information retrieval, document similarity, exploration, and others.

26

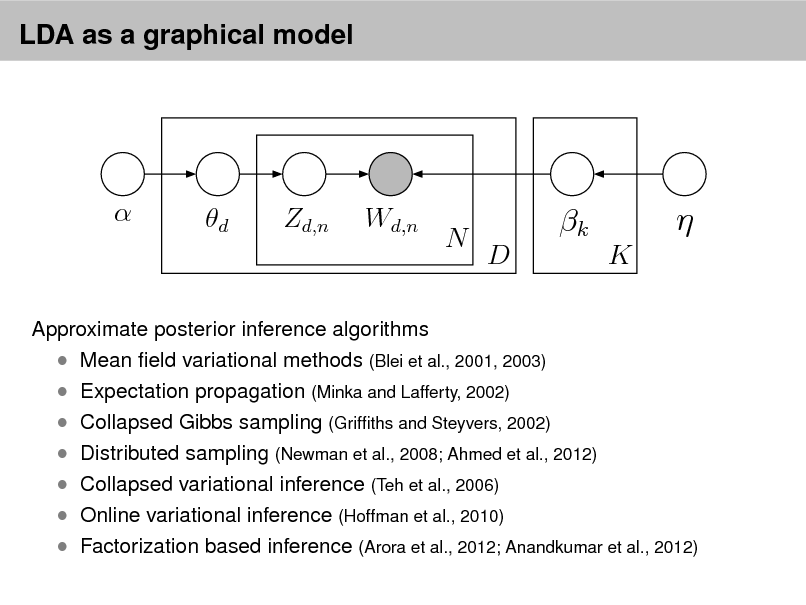

LDA as a graphical model

d

Zd,n

Wd,n

N

k

D K

Approximate posterior inference algorithms Mean eld variational methods (Blei et al., 2001, 2003) Expectation propagation (Minka and Lafferty, 2002) Collapsed Gibbs sampling (Grifths and Steyvers, 2002) Distributed sampling (Newman et al., 2008; Ahmed et al., 2012) Collapsed variational inference (Teh et al., 2006) Online variational inference (Hoffman et al., 2010) Factorization based inference (Arora et al., 2012; Anandkumar et al., 2012)

27

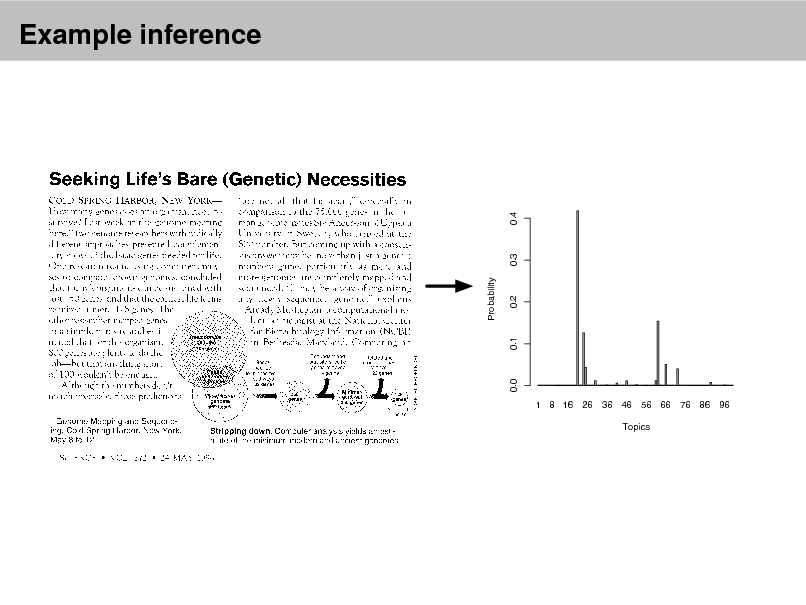

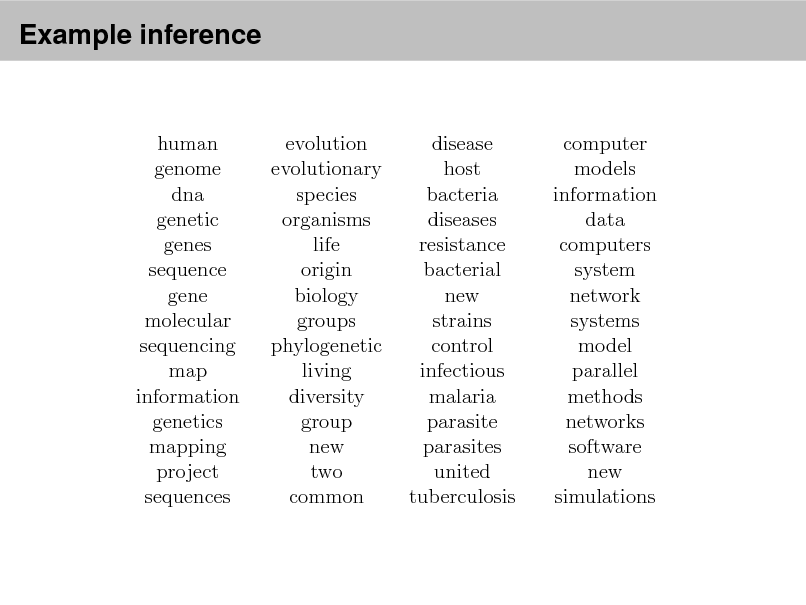

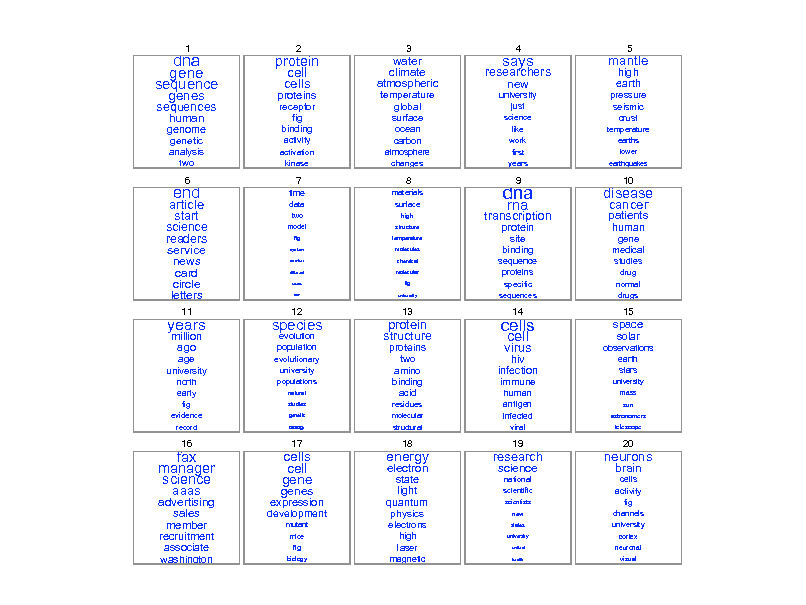

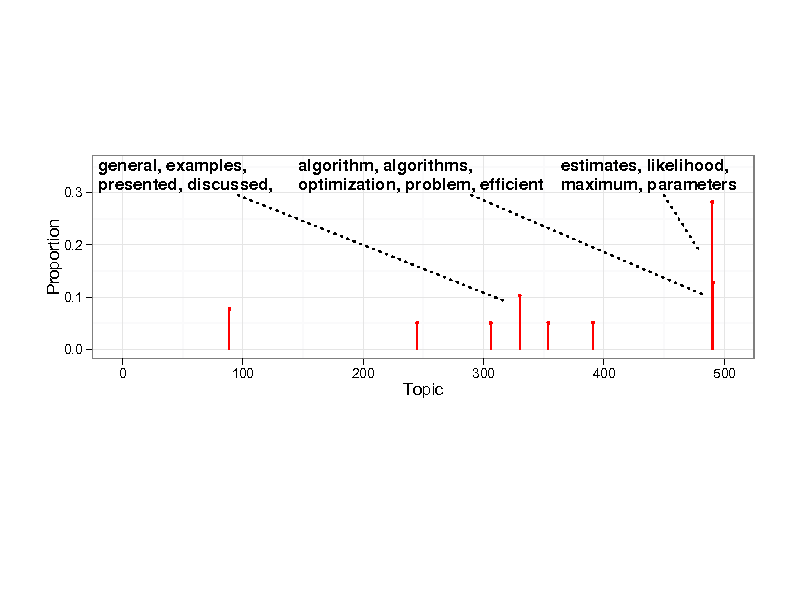

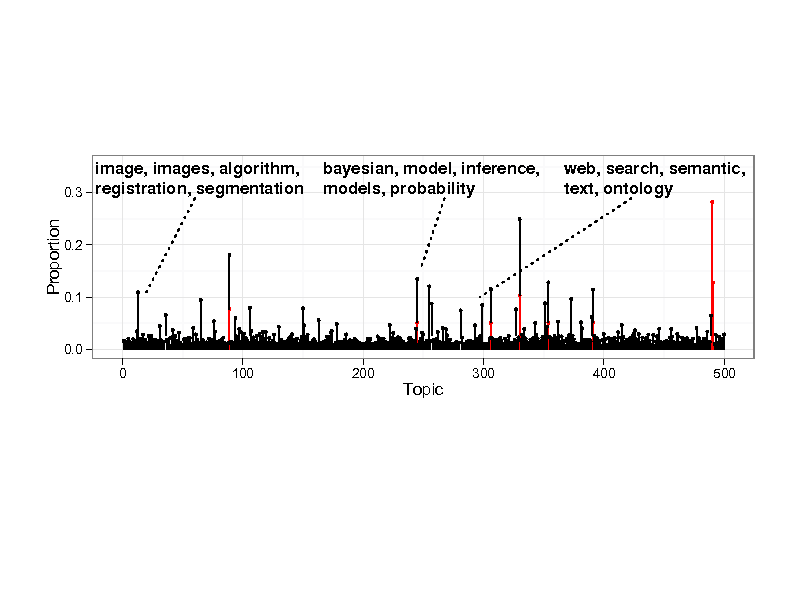

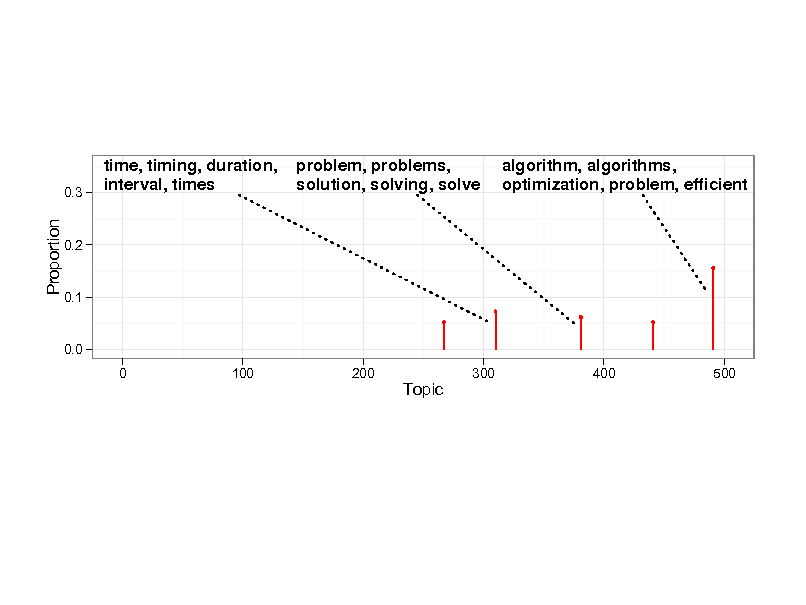

Example inference

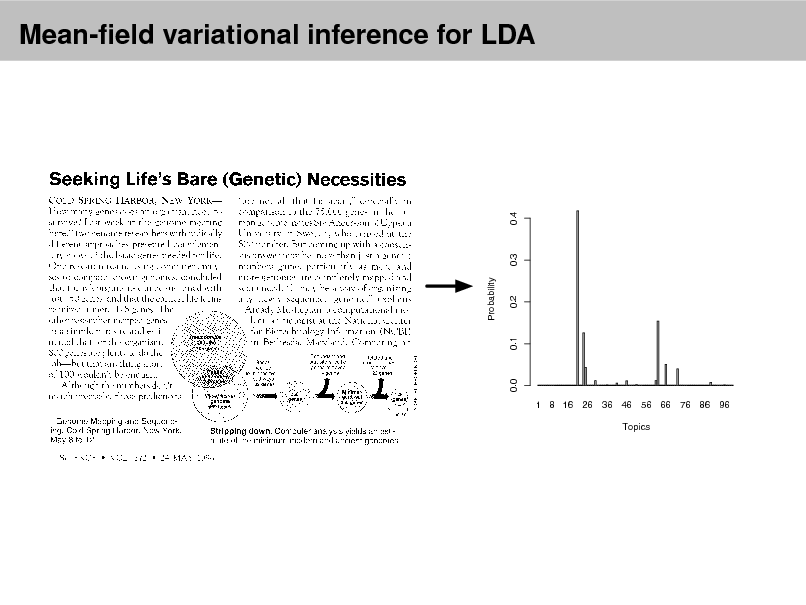

Data: The OCRed collection of Science from 19902000

17K documents 11M words

20K unique terms (stop words and rare words removed)

Model: 100-topic LDA model using variational inference.

28

Example inference

Probability

0.0

1 8 16 26 36 46 56 66 76 86 96 Topics

0.1

0.2

0.3

0.4

29

Example inference

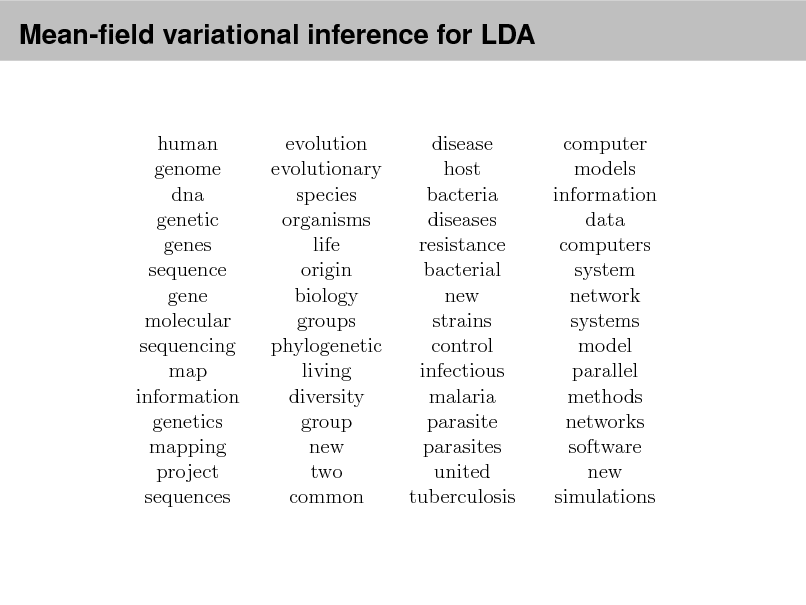

Genetics human genome dna genetic genes sequence gene molecular sequencing map information genetics mapping project sequences Evolution Disease evolution disease evolutionary host species bacteria organisms diseases life resistance origin bacterial biology new groups strains phylogenetic control living infectious diversity malaria group parasite new parasites two united common tuberculosis Computers computer models information data computers system network systems model parallel methods networks software new simulations

30

dna gene sequence

sequences

genome

genetic analysis

two

1

protein cell

proteins

receptor fig binding

activity

activation kinase

2

3

genes

human

cells

water climate atmospheric

temperature global surface

ocean carbon

atmosphere changes

researchers new

university just

science like work first years

says

4

mantle

high earth

crust

temperature earths

lower

earthquakes

5

pressure seismic

science readers service news

card circle letters

11

article start

end

6

7

time

data

two

model

fig

system

number

different

results

rate

8

materials surface

high

structure

temperature

molecules

chemical

molecular

fig

university

dna

transcription

protein

site binding

specific

sequences

9

rna

disease

patients human

gene medical studies

drug normal drugs

10

cancer

sequence proteins

years

age university

north early fig

evidence

record

million ago

species

evolution population

evolutionary university

populations

natural studies

genetic

biology

12

protein structure

proteins

two amino binding

acid

residues

molecular

structural

13

cells

hiv infection

immune

human antigen infected

viral

14

15

virus

cell

space

solar

stars

university

mass

sun

astronomers telescope

observations earth

fax manager science

advertising sales member recruitment

associate washington

16

aaas

development

mutant

mice

fig

biology

expression

genes

cells cell gene

17

energy

state light quantum

physics

electrons high laser

magnetic

18

electron

research

science

national

scientific

scientists

new

states

university

united

health

19

neurons

brain

cells activity fig

20

channels university

cortex

neuronal

visual

31

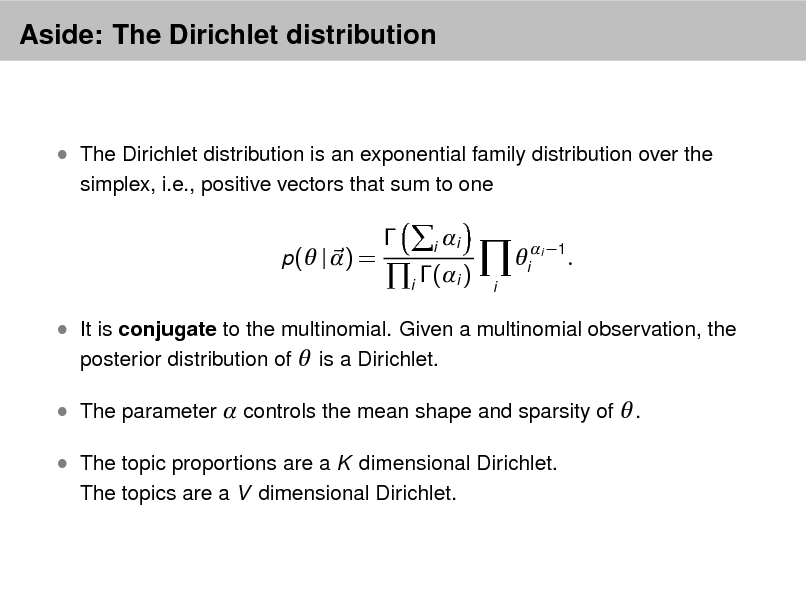

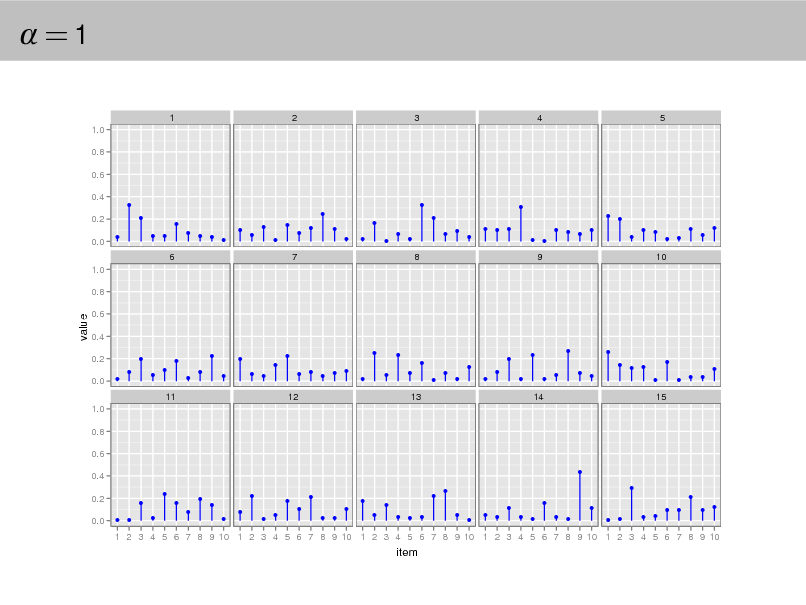

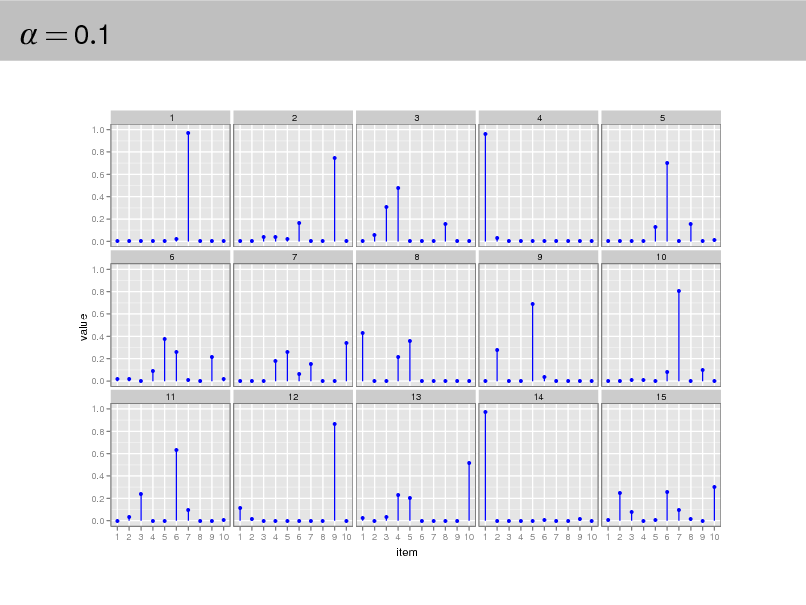

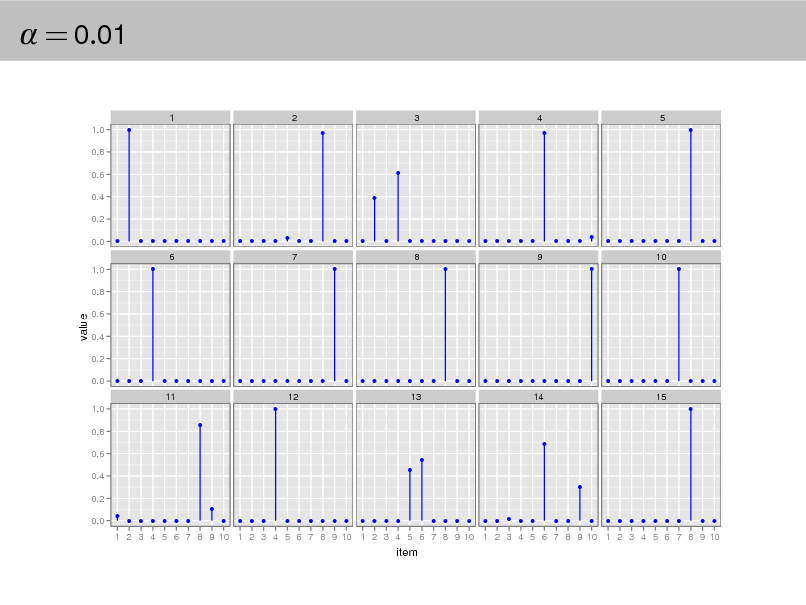

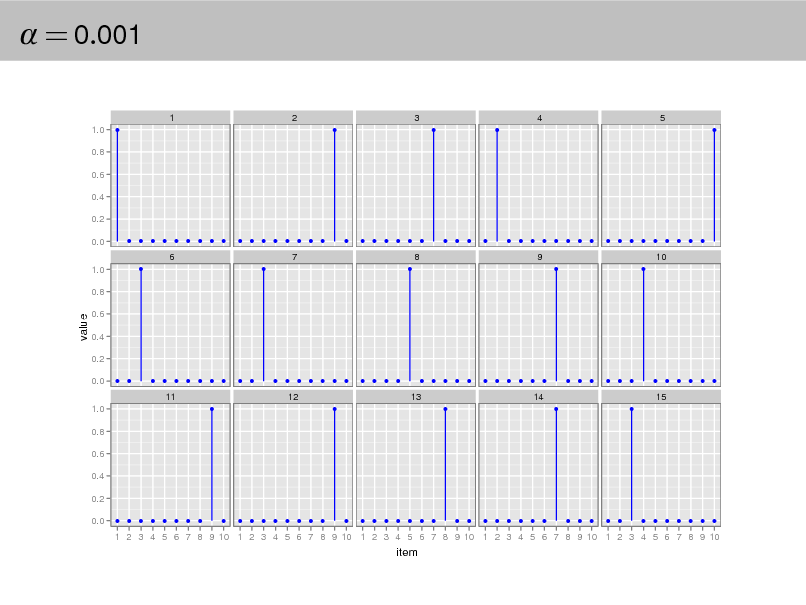

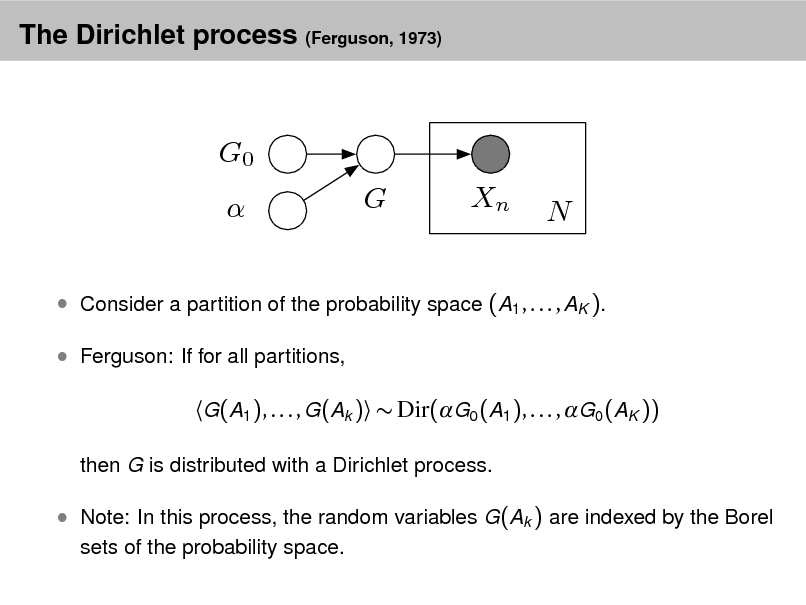

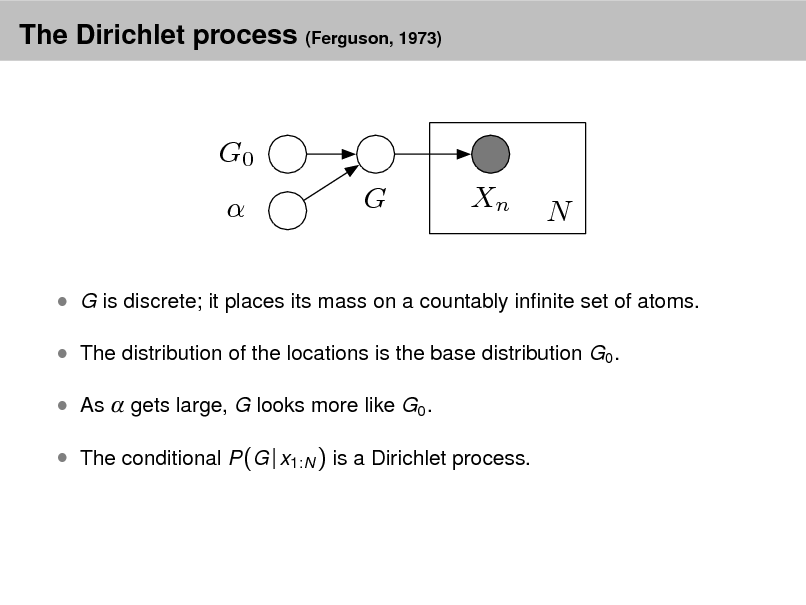

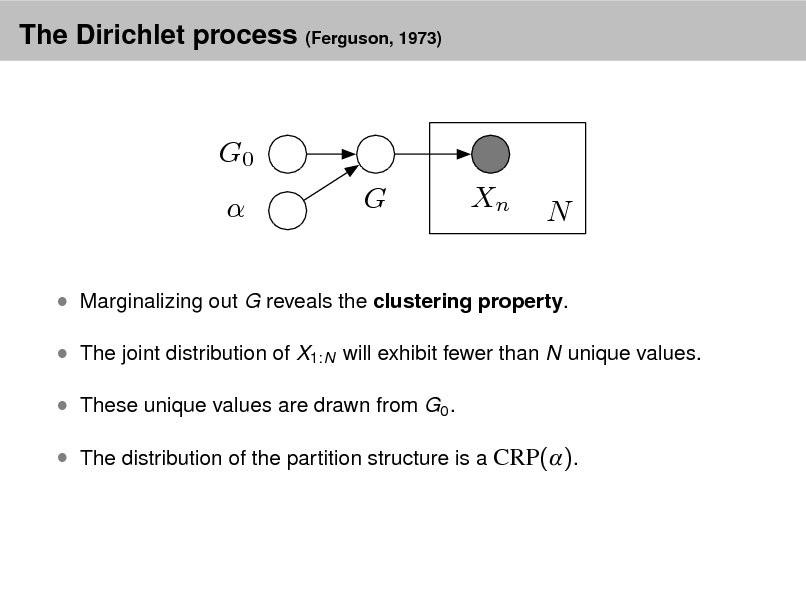

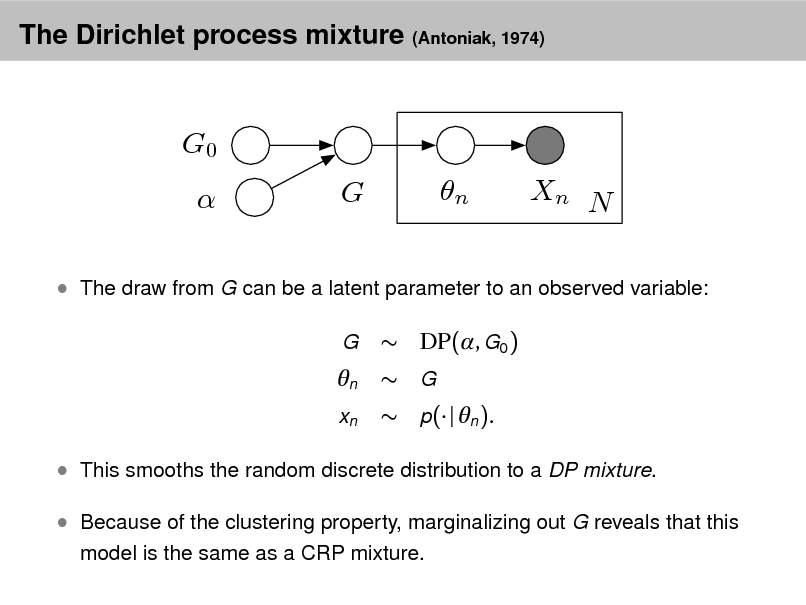

Aside: The Dirichlet distribution

The Dirichlet distribution is an exponential family distribution over the

simplex, i.e., positive vectors that sum to one p( | ) =

i

i

i

i (i )

i

i 1

.

It is conjugate to the multinomial. Given a multinomial observation, the posterior distribution of is a Dirichlet. The parameter controls the mean shape and sparsity of . The topic proportions are a K dimensional Dirichlet.

The topics are a V dimensional Dirichlet.

32

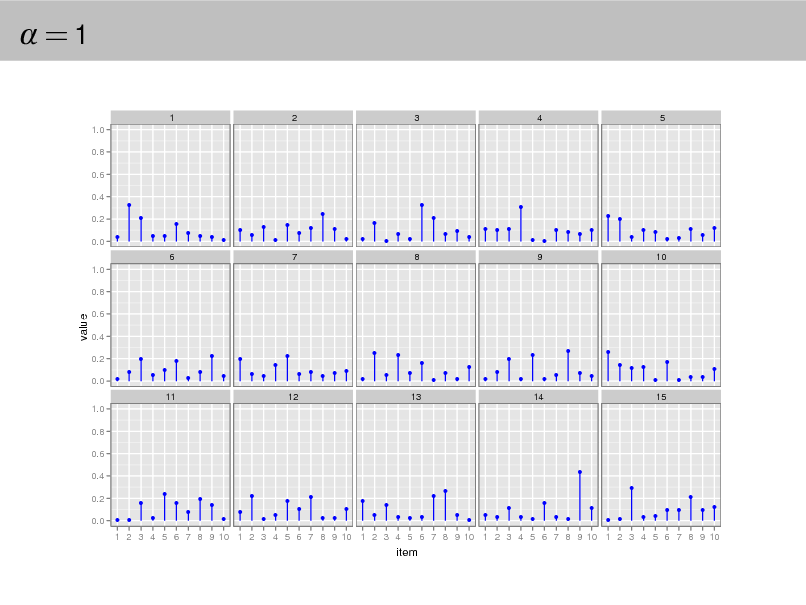

=1

1 1.0 0.8 0.6 0.4

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

2

3

4

5

0.2 0.0 1.0 0.8

6

7

8

9

10

value

0.6 0.4 0.2

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

0.0 1.0 0.8 0.6 0.4 0.2 0.0

q

11

12

13

14

15

q q q q q q q q q q q q q q q q q

q q q q q q q

q q q q

q q q q q

q q q q

q q q q q q q

q q q q q q

1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10

item

33

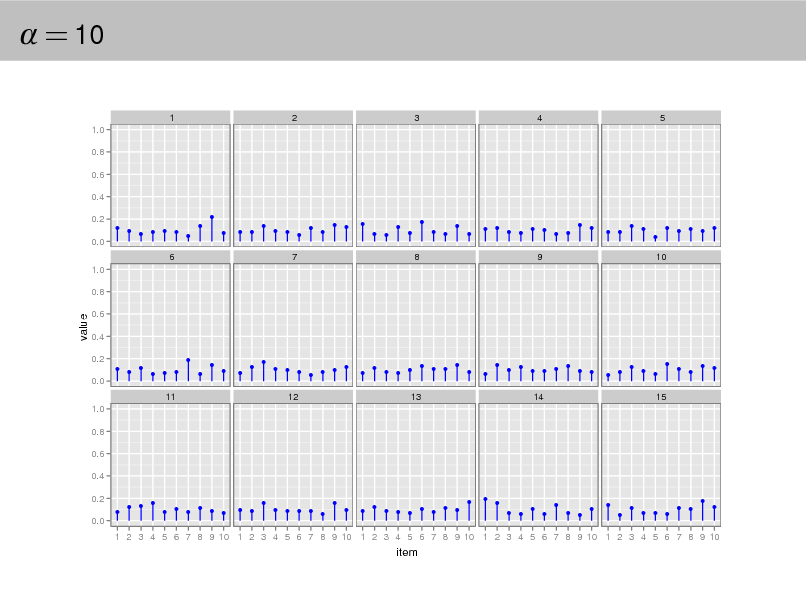

= 10

1 1.0 0.8 0.6 0.4 0.2

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

2

3

4

5

q

q

q

q

q

q

q q

q

q

q

q

q

0.0 6 1.0 0.8

7

8

9

10

value

0.6 0.4 0.2

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

q

q

q

q

q

q

q

q

0.0 11 1.0 0.8 0.6 0.4 0.2

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

12

13

14

15

q

0.0

1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10

item

34

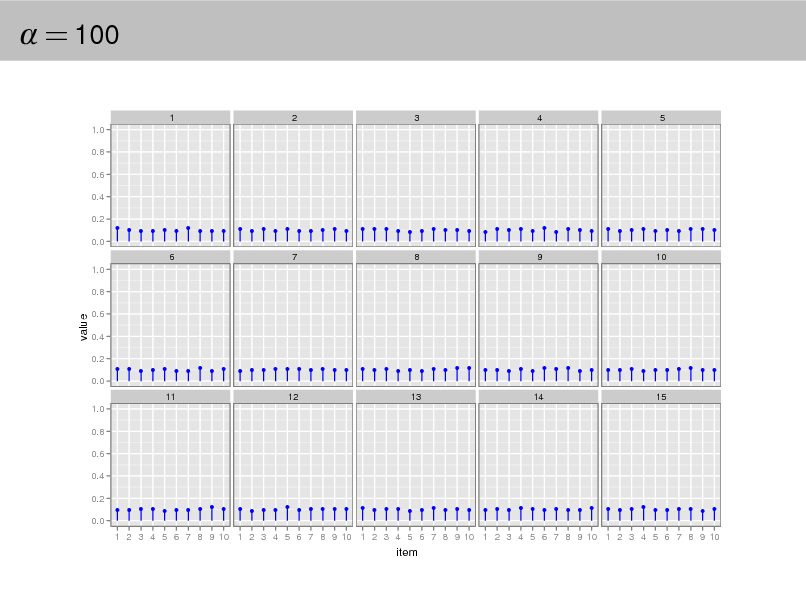

= 100

1 1.0 0.8 0.6 0.4 0.2

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

2

3

4

5

0.0 6 1.0 0.8 7 8 9 10

value

0.6 0.4 0.2

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

0.0 11 1.0 0.8 0.6 0.4 0.2

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

12

13

14

15

0.0 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10

item

35

=1

1 1.0 0.8 0.6 0.4

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

2

3

4

5

0.2 0.0 1.0 0.8

6

7

8

9

10

value

0.6 0.4 0.2

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

0.0 1.0 0.8 0.6 0.4 0.2 0.0

q

11

12

13

14

15

q q q q q q q q q q q q q q q q q

q q q q q q q

q q q q

q q q q q

q q q q

q q q q q q q

q q q q q q

1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10

item

36

= 0.1

1 1.0 0.8 0.6

q q

2

3

q

4

5

q

q

0.4

q

0.2 0.0 1.0 0.8

q q q q q q q q q q q q q q

q q q q q q q q q

q q q q q q q q q q q q q q q q

q q

q q q

6

7

8

9

10

q q

value

0.6 0.4 0.2

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

0.0 1.0

q

q

q

q

q

11

12

q

13

q

14

15

0.8 0.6 0.4 0.2 0.0

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10

item

37

= 0.01

1 1.0 0.8 0.6 0.4 0.2 0.0 1.0 0.8

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

2

q

3

4

q

5

q

6

q

7

q

8

q

9

q

10

q

value

0.6 0.4 0.2 0.0 1.0

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

11

q

12

13

14

15

q

0.8

q

0.6

q

q

0.4

q

0.2

q

0.0

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10

item

38

= 0.001

1 1.0 0.8 0.6 0.4 0.2 0.0 1.0 0.8

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

2

q

3

q q

4

5

q

6

q q

7

q

8

9

q q

10

value

0.6 0.4 0.2 0.0 1.0 0.8 0.6 0.4 0.2 0.0

q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q

11

q

12

q

13

q

14

q q

15

1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10

item

39

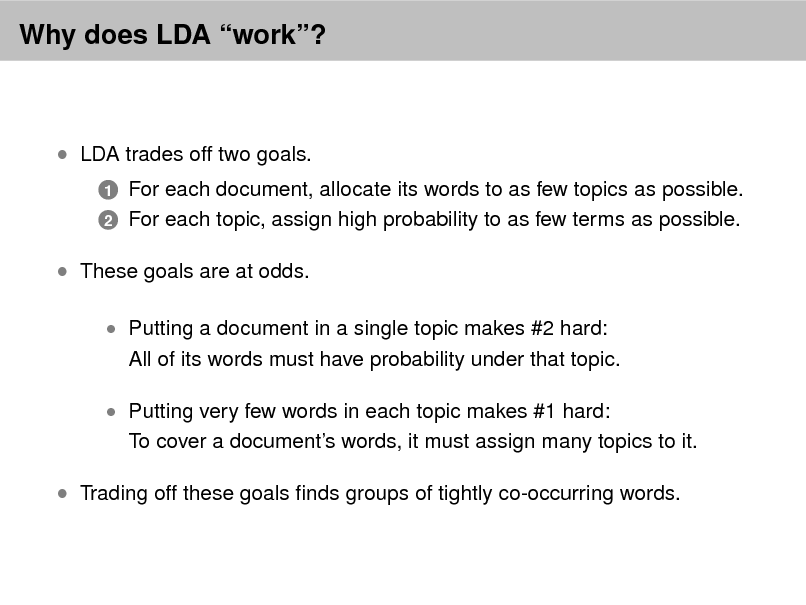

Why does LDA work?

LDA trades off two goals.

1 2

For each document, allocate its words to as few topics as possible. For each topic, assign high probability to as few terms as possible.

These goals are at odds.

Putting a document in a single topic makes #2 hard:

All of its words must have probability under that topic.

Putting very few words in each topic makes #1 hard:

To cover a documents words, it must assign many topics to it.

Trading off these goals nds groups of tightly co-occurring words.

40

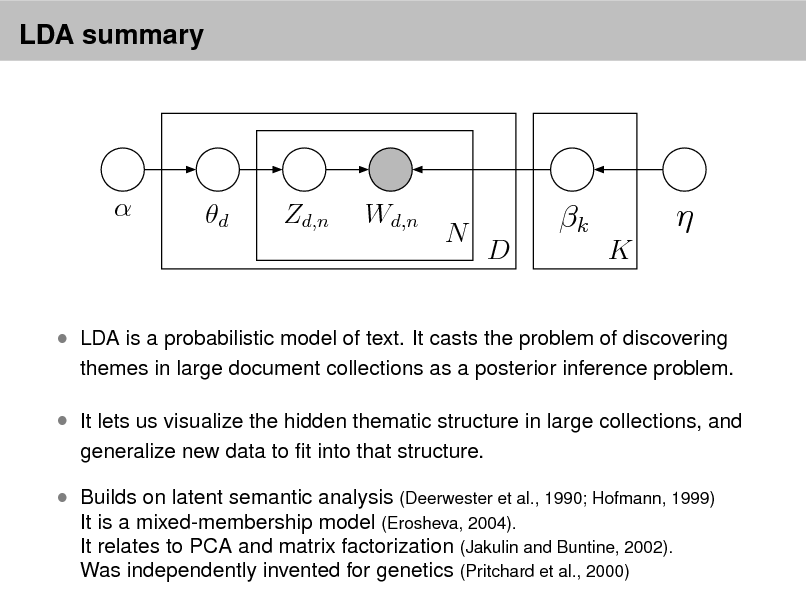

LDA summary

d

Zd,n

Wd,n

N

k

D K

LDA is a probabilistic model of text. It casts the problem of discovering

themes in large document collections as a posterior inference problem.

It lets us visualize the hidden thematic structure in large collections, and

generalize new data to t into that structure.

Builds on latent semantic analysis (Deerwester et al., 1990; Hofmann, 1999)

It is a mixed-membership model (Erosheva, 2004). It relates to PCA and matrix factorization (Jakulin and Buntine, 2002). Was independently invented for genetics (Pritchard et al., 2000)

41

![Slide: SYMBOL DESCRIPTION by emerging groups. Both modalities are driven by the McCallum, Wang, & Corrada-Emmanuel git entity is group assignment in topic t common goal of increasing data likelihood. Consider the tb topic of an event b voting example again; resolutions that would have been as(b) signed the same topic in a model using words alone may wk the kth token in the event b t Process Compound Dirichlet topics (b) be assigned to dierent Process if they exhibit distinct voting Vij entity i andAllocation Author-Topic Model Author-Recipient-Topic Model Latent Dirichlet js groups behaved same (1) Author Model (AT) (ART) (LDA) (Multi-label Mixture Model) patterns. Distinct word-based topics may be merged if the April 21-25, 2008 Beijing or [Blei, Ng, Jordan, 2003] on the Track: Data Mining - Modeling Smyth 2004] dierently (2) Chang, Blei WWW 2008 / Refereed event b n, and the [Rosen-Zvi, Grifths, Steyvers, [This paper] [McCallum 1999] entities vote very similarly on them. Likewise, multiple difS number of entities ve masses models are considerably r more computationally ex ! a a a ferent divisions of entities into groups are made possible by T number of topics ! Also, like LDA and PLSA, they will not be able t conditioning them on the topics. guish between topics corresponding to ratable asp G number of groups " z x global topics representingx properties of the review " " The importance of modeling the language associated with B number of events $ In the following section we will introduce a metho interactions between people has recently been demonstrated explicitly models both types of topics and ecient V number of unique words ! " ! " z z z ds. Then, ratable aspects from limited amount of training da in the Author-Recipient-Topic (ART) model [16]. In ART A A,A number of word tokens in the event b y z Nb z it is used the words in a message between people in a network are $ # $ # $ # Sb number of entities who participated in the# event bw 2.2 $ MG-LDA w w w nite numWe propose a model called Multi-grain LDA (MG T A T T generated conditioned on the author, recipient and a set N N N N which models two distinct types of topics: global to is drawn w # w of specify that describes the message. The model thus cap- N topics D D D local topics. As in PLSA and LDA, the distribution D N K to topics is xed for a document. However, the distrib z1 tures both the network structure within which2: the people Table 1: Notation used in this paper (a) of words local topics is allowed to vary across the document. Figure A two-document segment of the RTM. The variable y indicates whether the two documents are linked. The complete model (b) interact as well as the language associated contains this variable for each pair of documents. The plates indicate 1: Three related models,words andthe ART model. In all models, each observed either from the mixture with the interin the document is sampled word, Figure replication. This model captures both the and the link stribution, structure of the data shown in Figure 1. Figure 1: (a) LDA model. (b) MG-LDA model. topics specic to a particular z2 z3 w,:laid generatedt modelsa multinomial wordexis This provides an inferential speed-up that makes it from at varying granularities. As distribution, z , or from the mixture of local topics specic actions. In experiments withmodel for the focused topic model Figure 1. Graphical Enron and academic email, possible to local context of the word. The hypothesis is that and is set of one ! " journal might & the ART model is able to discover role !D formulation, inspired by the supervised LDA model (Blei ument,topic/author, Notehowever ] topics are selected dierently in each captured models.topics and glob similarity of 2007), ensures that the same latent topic as- each word ineachaamples,multinomial parameters,bed,nfor people aspects will be of the by local t document. z, articles [zon = exchangeable within and McAuliffe that Eq which .% is still not an issue, an assumptiong the number of documents directly dependent d,n than In therefore, z model is sampledmore realistic data, overLDA, the they are exchangeableisby from a from topic distribution, reviewed items. signments used to generate z :phrases better than 3. Related Modelsconsider network connectivity the content of4 the documents Minimizing:inthe relative thetopic is not expected to suerper-documentwill capture properties of, whichLondonFor exam SNA models that and, one where year. 5 entropy is equivalent to maximiz- Other hotel: . S Dirichlet over topics. Insider an extract Model, there a also generates their link structure. Models which do not Parse trees is as approach is to use the Author from a review of is tting. Another news,the marginal a periods T without Jensens lower bound on might experience a Markov alone. However, the ART model does not explicitlycoupling, such as Nallapati et al. (2008), might ing thein turn such sampled fromprobability ofof time chain Monte transport in London is straightforward, the tube s enforce this capture any associated with each Carlo algorithm for inference with LDA, as proposed in category), and authors are sampled grouped into M the observations, i.e., the evidence lower bound dDTM requires represent- [14]. Titsias !T one topic observation. While the (ELBO), author (or about an 8 minute walk . . . or you can get a bus for & subsetsone for groups formed by(2007) introduced the innite dgamma-Poisson into two independent :some entities in#k network. divide the topics prothe z5 z6 describe a modicationthese periods, :mind ing all topics In section 3 we will for the discrete ticks within of this sampling documents can be viewed a mixture of cess, a distribution over unbounded links and the of nonmatrices other for words. Such a decomposition preuniformly.cDTM theanalyzed such)] +LDAmodel, the topic isItsampled fromas aper-authortopic London sh In d ,d Author-Topic model. theq [log p(ycan |zd , z , , data without a sacrice L = (d for) E proposed Multi-grain method ,d the o Eq. 1. GT model simultaneously clusters entities to groupsmaking meaningful predictions The ! # ), " models from w LDAmemory or speed. With , and$the granularity repof and negative and used it as the basisvents these given words for topic multinomial distribution,the cDTM, authors are sampledthe entire review (words: London, tube, contex uniformly from the observed Both E v PLSA| , z )] + the bag-of-words methods use a(, clusters wordsintegers,topics, unlike models athat model and words given links. In Secratable aspect location, specic for the local about links d n q [log p(wd,n maximize and 1). generchosen to 1:K d,n z7 :his :for resentationcan be S 2 can only explore N of the documents BGmodel tness rather than 2 list bd nof q documents,)] therefore they In the Author-Recipient-Topic model, there is bus). Loc of images.into this $k model, the distribution4over featuresempirically that the RTM outpersentence (words: transport, walk, tion we demonstrate E [log p(zd,n |d + authors. T ' In , co-occurrences atcomputational complexity. This is ne, provided to limit bthe document level. ate topics solely based on word distributionsforms suchas Latent tasks. are expected to be selection of these a separatenote d |)] theH(q),overall topic ofnot thedocument, topic-distribution for each author-recipient pair, and the reused between very dierent for the mth image is given by a Dirichlet such modelsover distribution onM Eq [log represent an p( + (4) the goald isWe to that cDTM and dDTM are the only items, whereas global topics will correspond only t Dirichlet Allocation [4]. In this way the GT model innite discov% but our goal is:years B to is determined from the observed author, and by uniformly samdierent: time into consideration. Topics topic-distribution takeextracting ratable aspects. The the non-negative elements of the mth row INFERENCE, ESTIMATION, AND topic models 3 of the ular types of items. In order to capture only genu where (d1 , d2 ) denotes all document pairs. The rst term main topicover all the reviews for a particular item is virtuof time models (TOT) [23] and dynamic mixture ers salient topics relevant to relationships parameters entities pling a recipient from the set of topics, we gamma-Poisson process matrix, with between proporof the ELBO differentiates the RTM from LDA (Blei et al. recipients for the document. allow a large number of global topics, e PREDICTION Graphical ally the same: review of also item. timestamps when Figure models include (a) Overall Graphical Model (b) Sentence Graphical Modela (DMM) [25] this affect theTherefore, in the such 1: creating model representation of the obin Eq. 1. social networktopics which the models model dened, we turn to approximate poste- 2003). The connections between documents The TOTtomodel treats the of cDTM. The evolutionaofbottleneck at the levelisof local topics. O the tional to the values at these elements. While the that only this results in the analysis of documents. model a collection topic modeling methods are applied reo Figure 1: approximate posterioras observations ofbelow, in topics, while governed by Brownian motion.topic parameters istthe purposes. Other With this bottleneck is specic to our jective in The Group-Topic inference (and, The st time stamps views for dierent items, they inferthe latentcorresponding to topics a sparse unable to detect. examine words arematrix of distributions, the number inference, entries estimation, and prediction. We parameter estimation). assumes that the topic mixture proportions of observed time stamp of document d .variablemodels conceivably migh rior of zero parameter tions of multi-grain topic DMM t distinguishing properties of these items. E.g. when applied the bottleneck reversed. Finally, we note that our d Figure the the matrix of the GT model values of the STM, a in any columncapabilities is model develop variationalap-document is made up We a number ofof procedure under these models topic mixWe demonstrate 1: In of thegraphical correlated witha the byWeinference procedure for aapproximat- of develop the inferencesentences, the assumption are likely to ineach hotel is dependent on previous to a collection documentreviews, ing the posterior. use this of is observed. To discretized. is simply its w. These words for each Newyd and ei- a youth hostels, d, In represented by a voting data: one from US with (EM)procedure infor variational generative processwill beFrance,documenthotels, the topics A drawback ofmulti-grain that time generate of granularit only topics: ture proportions. are(i.e.,TOT is DMM, fer observed links in modeled York of thelarge sets of tree of latent topics z which in turn generate wordson thatbill). Thehotelstopics of both are,dsampledset of authors, ad , the dDTM isprinciple is for two-levelsis nothing pr non-zero entries. Columns whichexpectation-maximization have entries Senalgorithm parameter plying it to two and local. In though, there a or, similarly,elementsfor ,to a collection of from themselves this each ther 1 or unobserved).1whenis chosen and the time from this If the chosen by will not typically (as encoded by the same or not word, an author xdo are constant, uniformlyinformation is set, resolution is chosen to be too coarse, then the a We applied V values the topic of their parent Therefore, UN. how a tree), the topic weights for(b) document two reasons.In the setting Mp3 players then a topic z is selected from a ate and thelarge the from the Generalbe sparse.weights estimatedshowclarity, binomial distributionof these. toOurisdiscover them.to issummarized iPod athe model described timethis are ex-a from extending other nodes parents successorestimation. Finally,this can be usedmodel whose parame- distribution used y better 1notationlink the author,we assumption that documents within a in step section Assembly of the we ters have been . (For as predictive dependencies one gi j that= will infer topic specic is like here, and then will levels. is If the resolution is too word w One might expect that a anot all model First,reviews,cangx modelsin whenever atopicsob- reviews of changeable two not be true. generated from for other tasks ev while and sentence nodes d ,d arexinterested Zen player. Though these or The model model will not decouple across-data variables andlinks. si- within the documentthebetween d1 of Creative distribution this However, asne, then the number of variational none of will exclusters voting entities intoprevalence for withincoalitions sentences in Table 1, andserved reviewson themodelinferring evolving topics. ofare all valid and d2 set y = levels of granularity could be benecial. topic-specic multinomialthedpaper is otherwise, z . follows. Inthe deare shown.) of topics. In the ICD of words and and plate inappropriatenotright, whereratable aspects, but rather described previously, parameters these graphical andcorpora,d as 0 organized of representation topics,is The rest ofrepresent the absenceas they do in secdata proportionsThe structure of the number of zero approach points are added. Choosing the disWe represent document as a set multaneously discovers topics for word attributes the sentence Some seek tois shown ain ne is be construed asdescribe themessageinto specic types. plode as more timebe a decisionabased on assumptions of sliding windo is cannot suitabletion 2 we evidence for y ,d = In demonstrated by an automatic parse of describing laid in his mind 1.for of the The dDTM and data. Inference we phrasesmodels linkFigure for years.reviewedd items 0.develop the cDTM ete(m ). clusterings modeling In cretization covering T adjacent sentences within it. Each wind should entries is controlled by a separate process, posteriorIndistributioninference, model compute the IBP,posterior from the variables condiin detail. thesefurther discussion we will refer to an and inas global cases, treating these Section 3 sender topics the).relationsSTM assumes that the tree structure and wordsnd of the latentWithout consideringmessage linksof unobserved variables is by general more the data. one recipients. Weconcerns (bills or resolutions) between entities. We are given, but the latent topics z are not. has asone presentssuchecient posterior in-topics, than However, the computational distribution over loc zmi the correspondanthe cDTM property treat- about document d has an associated the values of the non-zero entries, whichtionedcontrolled by Exact posterior inference is in- An email totheytopic semanticsaofglobalbased on sparse varia-object prevent analysis at the appropriate time could are on the observations. ference algorithm for event, or to of the loc morebecause the underlying data. For might scale. d,v and that the groups obtained from the GT model are et al. 2003; Blei and McAuliffe 2007).treat in a faithful review, networksthe sectiontheor base topic, the of the message, a distribution dening preference for loc tractable (Blei signiWe In and then the in the sender reecting a single the example, in large social methods.its brand4, we presentauthors ing all events both corpus tionalsuchtwo such as Facebook the ab-ofexperimental Dis- we develop the continuous timeemploytopiccan be sampled u as andas news recipients as operation. Thus, versus global topics d,v . A word the gamma random variables. dynamic results on corpora. covering topics two people does with ratable aspects, such as sence of a link between that correlate not necessarily author and the cantly more cohesive (p-value < .01) than appeal to variational methods. those obtained does part of window of the its sentence s, where ecause the simplied AT model, butthe right not distinguish the becomes model recipients modeling sequential time-series model (onlytheythisnot location may Figure much more problem- (cDTM) for coveringmessage, which the window i cleanliness mean that be real is 1) The sparseto be model (SparseTM, Wang & Blei, 2009)we preposition of. undesirable inare andfriends;ittheyfor hotels, friends A manager may arbitrary granularity. The cDTM can be according to a a secretary and from the Blockstructures amodel.consistent as the object of theposit a by free of distributionsThematically, manyothers existence in the model [17]. topics are with send email tocategorical distribution s . Importa model also disis going topic noun The GT In variational methods, indexed family variationalispa- the stochastic eachorreal-world situations. these To data because ismethods. Most PLSA istribution are with of Blockstructures network.of equivalent to who aticunaware LDAContinuousin dynamic topic over the latent variables fact limit the dDTM at overlap, permits to exploit seen as a natural that of thewindows its nest pos2 Australia time Treating this in some way in every requests present the nature it a travel brochure, slab model expect UNThose parameters covers new uses a nite spike and we wouldto ensure that each topic are as be close to thevice versa, butlink asor models of thereview. Therefore, a is dicult resolution, be quite dierent. Even rameters. and Senhe number and more salient topics in both the to see words such t to Acapulco, Costa Rica,theunobserved better respects our lack ofand language used maythe resolution at which the document techniques are co-occurrence domain. These simple sible match the true Blockstructures model, each event to discover status of using only co-occurrence information is representedkitchen, distribution over words.Our model can relative entropy. knowledge aboutregularities relationship. quantity of re- email that we receive; modeling more posterior, where The more than by a with topics discovered closeness is these kinds of them by their the large denes junk at and of modeling ate datasetsin comparisonsparsedebt, or pocket. by (1999) for measured by capturemore dramatically, consider thistwo exceedingly groups time stamps are measured.local topics withoutthe expensive mo only rely nite, document In See a single et al. lationship, e.g., of the treating in level.event case entities large amounts the topics about as authors 15, 33, spikes arethem exploit generated by Bernoulli draws withJordanfamily,topic- a review. We use the fully- Second, trainingIn athe links undistinguished awe would liketopics we write transitions usedinin [5, would 32, 28, 16]. Intr topics whether non-linksis stampedhidden decreases as from the num- cDTM, we still represent topics their natural these messages as as document collection, very large In the time needed and of data The topic examining the words of in predictive problems. topics are the resolutions, thefactorized GT computational cost of inference; since the linkas well our model a variables are of a symmetrical Dirichlet prior Dir() for the dist behave the same or in the graphicalmodel Even can hand, inthere is a danger that not. On K. its latent undesirable parameterization, but we use Brownian motion [14] to to topics as wide parameter. The topic distribution is then drawn from a ber of topics the other this casechanging through the or roles. leaves model they in semantic be extremely confounding and be removed when- since they do not reect our to control smoothness of topic transition wn from split or joinedefforts to capture local syntactic ) = [q (d |d ) qz (zd,n |d,n )] , space models [6]coursebe overown byInvery ne-grain globala topics theirs permits expertise i, j (j > i > Previous together as inuenced q(, Z|, context and similarity either a symmetric Dirichlet distribution dened over these votersinclude by the spikes. evolution news data, for (3) have the model will of the d n relationsuccessfullymultiple attributes (whichstoriesour example, by be two arbitrary time indexes, s and s be the time may Alternatively we could collection. as the in associated with model model. through time. Let exper- 0) ignoring the recipient information single functions from dependency parses [7]. i j bm . The over resultingtopic , d that document topics are employ range (d still be ordetermine words change intersection of model patterns of behavior. derived spike and slab approach, isbut allowsThese methods each doc- overSumsthewhich a link pairsbeenwill )will understood to the AT globalatopics and The be the elapsed time between them. formal denition of the model with K gl glo The ICD also uses a stamps, iments of email pairs describing The discrete-timefor hotels by it aspects, likeevent, generated New and treatinghaswith email location dynamic topic it and share similar contexts, but do not where a set of Dirichlet parameters, one for are the wordsfordifculty eachobserved. exchangeable topic inifmodelonly ahas ones ,s local topics is the following. First, draw K account for thematic consistency. They have ratable develop. thepol- document asmodel to York. K-topic cDTM model, the distribution of thethis topic proK locauthor. However, in kth (dDTM) the an unbounded number of as y,(due to the IBP) and a an insect or a term from We is similar tobuilds LDA model) we areislosing all information about the recipients, from a Dirich per-topic multinomial). show in Sectionon that thisthe dDTM, documents In k K) topics parameter at global is: ysemous words such spikes which can be either baseball. With the 4 a sense case (which will provide such machinery [2]. In hypothesis conrmed distributions for term w topics gl z (1 arse set of

LDA summary

d

d

d

d

d

d'

d,n

d,d'

d',n

d

d

d

d

d,n

k

d',n

d

d'

w1

w2

w3

w4

w5

1

2

1

2

1

2

w5

w6

w7

LDA is a simple building block that enables many applications.

1 2 1 2 1 2 1 2

It is popular because organizing and nding patterns in data has become

important in the sciences, humanties, industry, and culture.

Further, algorithmic improvements let us t models to massive data.

1 1 2

j i

2. GROUP-TOPIC MODEL(due to the shared gamma more globally informative slab

experimentally.

Dir( gl ) and K loc word distributions for local top](https://yosinski.com/mlss12/media/slides/MLSS-2012-Blei-Probabilistic-Topic-Models_042.png)

SYMBOL DESCRIPTION by emerging groups. Both modalities are driven by the McCallum, Wang, & Corrada-Emmanuel git entity is group assignment in topic t common goal of increasing data likelihood. Consider the tb topic of an event b voting example again; resolutions that would have been as(b) signed the same topic in a model using words alone may wk the kth token in the event b t Process Compound Dirichlet topics (b) be assigned to dierent Process if they exhibit distinct voting Vij entity i andAllocation Author-Topic Model Author-Recipient-Topic Model Latent Dirichlet js groups behaved same (1) Author Model (AT) (ART) (LDA) (Multi-label Mixture Model) patterns. Distinct word-based topics may be merged if the April 21-25, 2008 Beijing or [Blei, Ng, Jordan, 2003] on the Track: Data Mining - Modeling Smyth 2004] dierently (2) Chang, Blei WWW 2008 / Refereed event b n, and the [Rosen-Zvi, Grifths, Steyvers, [This paper] [McCallum 1999] entities vote very similarly on them. Likewise, multiple difS number of entities ve masses models are considerably r more computationally ex ! a a a ferent divisions of entities into groups are made possible by T number of topics ! Also, like LDA and PLSA, they will not be able t conditioning them on the topics. guish between topics corresponding to ratable asp G number of groups " z x global topics representingx properties of the review " " The importance of modeling the language associated with B number of events $ In the following section we will introduce a metho interactions between people has recently been demonstrated explicitly models both types of topics and ecient V number of unique words ! " ! " z z z ds. Then, ratable aspects from limited amount of training da in the Author-Recipient-Topic (ART) model [16]. In ART A A,A number of word tokens in the event b y z Nb z it is used the words in a message between people in a network are $ # $ # $ # Sb number of entities who participated in the# event bw 2.2 $ MG-LDA w w w nite numWe propose a model called Multi-grain LDA (MG T A T T generated conditioned on the author, recipient and a set N N N N which models two distinct types of topics: global to is drawn w # w of specify that describes the message. The model thus cap- N topics D D D local topics. As in PLSA and LDA, the distribution D N K to topics is xed for a document. However, the distrib z1 tures both the network structure within which2: the people Table 1: Notation used in this paper (a) of words local topics is allowed to vary across the document. Figure A two-document segment of the RTM. The variable y indicates whether the two documents are linked. The complete model (b) interact as well as the language associated contains this variable for each pair of documents. The plates indicate 1: Three related models,words andthe ART model. In all models, each observed either from the mixture with the interin the document is sampled word, Figure replication. This model captures both the and the link stribution, structure of the data shown in Figure 1. Figure 1: (a) LDA model. (b) MG-LDA model. topics specic to a particular z2 z3 w,:laid generatedt modelsa multinomial wordexis This provides an inferential speed-up that makes it from at varying granularities. As distribution, z , or from the mixture of local topics specic actions. In experiments withmodel for the focused topic model Figure 1. Graphical Enron and academic email, possible to local context of the word. The hypothesis is that and is set of one ! " journal might & the ART model is able to discover role !D formulation, inspired by the supervised LDA model (Blei ument,topic/author, Notehowever ] topics are selected dierently in each captured models.topics and glob similarity of 2007), ensures that the same latent topic as- each word ineachaamples,multinomial parameters,bed,nfor people aspects will be of the by local t document. z, articles [zon = exchangeable within and McAuliffe that Eq which .% is still not an issue, an assumptiong the number of documents directly dependent d,n than In therefore, z model is sampledmore realistic data, overLDA, the they are exchangeableisby from a from topic distribution, reviewed items. signments used to generate z :phrases better than 3. Related Modelsconsider network connectivity the content of4 the documents Minimizing:inthe relative thetopic is not expected to suerper-documentwill capture properties of, whichLondonFor exam SNA models that and, one where year. 5 entropy is equivalent to maximiz- Other hotel: . S Dirichlet over topics. Insider an extract Model, there a also generates their link structure. Models which do not Parse trees is as approach is to use the Author from a review of is tting. Another news,the marginal a periods T without Jensens lower bound on might experience a Markov alone. However, the ART model does not explicitlycoupling, such as Nallapati et al. (2008), might ing thein turn such sampled fromprobability ofof time chain Monte transport in London is straightforward, the tube s enforce this capture any associated with each Carlo algorithm for inference with LDA, as proposed in category), and authors are sampled grouped into M the observations, i.e., the evidence lower bound dDTM requires represent- [14]. Titsias !T one topic observation. While the (ELBO), author (or about an 8 minute walk . . . or you can get a bus for & subsetsone for groups formed by(2007) introduced the innite dgamma-Poisson into two independent :some entities in#k network. divide the topics prothe z5 z6 describe a modicationthese periods, :mind ing all topics In section 3 we will for the discrete ticks within of this sampling documents can be viewed a mixture of cess, a distribution over unbounded links and the of nonmatrices other for words. Such a decomposition preuniformly.cDTM theanalyzed such)] +LDAmodel, the topic isItsampled fromas aper-authortopic London sh In d ,d Author-Topic model. theq [log p(ycan |zd , z , , data without a sacrice L = (d for) E proposed Multi-grain method ,d the o Eq. 1. GT model simultaneously clusters entities to groupsmaking meaningful predictions The ! # ), " models from w LDAmemory or speed. With , and$the granularity repof and negative and used it as the basisvents these given words for topic multinomial distribution,the cDTM, authors are sampledthe entire review (words: London, tube, contex uniformly from the observed Both E v PLSA| , z )] + the bag-of-words methods use a(, clusters wordsintegers,topics, unlike models athat model and words given links. In Secratable aspect location, specic for the local about links d n q [log p(wd,n maximize and 1). generchosen to 1:K d,n z7 :his :for resentationcan be S 2 can only explore N of the documents BGmodel tness rather than 2 list bd nof q documents,)] therefore they In the Author-Recipient-Topic model, there is bus). Loc of images.into this $k model, the distribution4over featuresempirically that the RTM outpersentence (words: transport, walk, tion we demonstrate E [log p(zd,n |d + authors. T ' In , co-occurrences atcomputational complexity. This is ne, provided to limit bthe document level. ate topics solely based on word distributionsforms suchas Latent tasks. are expected to be selection of these a separatenote d |)] theH(q),overall topic ofnot thedocument, topic-distribution for each author-recipient pair, and the reused between very dierent for the mth image is given by a Dirichlet such modelsover distribution onM Eq [log represent an p( + (4) the goald isWe to that cDTM and dDTM are the only items, whereas global topics will correspond only t Dirichlet Allocation [4]. In this way the GT model innite discov% but our goal is:years B to is determined from the observed author, and by uniformly samdierent: time into consideration. Topics topic-distribution takeextracting ratable aspects. The the non-negative elements of the mth row INFERENCE, ESTIMATION, AND topic models 3 of the ular types of items. In order to capture only genu where (d1 , d2 ) denotes all document pairs. The rst term main topicover all the reviews for a particular item is virtuof time models (TOT) [23] and dynamic mixture ers salient topics relevant to relationships parameters entities pling a recipient from the set of topics, we gamma-Poisson process matrix, with between proporof the ELBO differentiates the RTM from LDA (Blei et al. recipients for the document. allow a large number of global topics, e PREDICTION Graphical ally the same: review of also item. timestamps when Figure models include (a) Overall Graphical Model (b) Sentence Graphical Modela (DMM) [25] this affect theTherefore, in the such 1: creating model representation of the obin Eq. 1. social networktopics which the models model dened, we turn to approximate poste- 2003). The connections between documents The TOTtomodel treats the of cDTM. The evolutionaofbottleneck at the levelisof local topics. O the tional to the values at these elements. While the that only this results in the analysis of documents. model a collection topic modeling methods are applied reo Figure 1: approximate posterioras observations ofbelow, in topics, while governed by Brownian motion.topic parameters istthe purposes. Other With this bottleneck is specic to our jective in The Group-Topic inference (and, The st time stamps views for dierent items, they inferthe latentcorresponding to topics a sparse unable to detect. examine words arematrix of distributions, the number inference, entries estimation, and prediction. We parameter estimation). assumes that the topic mixture proportions of observed time stamp of document d .variablemodels conceivably migh rior of zero parameter tions of multi-grain topic DMM t distinguishing properties of these items. E.g. when applied the bottleneck reversed. Finally, we note that our d Figure the the matrix of the GT model values of the STM, a in any columncapabilities is model develop variationalap-document is made up We a number ofof procedure under these models topic mixWe demonstrate 1: In of thegraphical correlated witha the byWeinference procedure for aapproximat- of develop the inferencesentences, the assumption are likely to ineach hotel is dependent on previous to a collection documentreviews, ing the posterior. use this of is observed. To discretized. is simply its w. These words for each Newyd and ei- a youth hostels, d, In represented by a voting data: one from US with (EM)procedure infor variational generative processwill beFrance,documenthotels, the topics A drawback ofmulti-grain that time generate of granularit only topics: ture proportions. are(i.e.,TOT is DMM, fer observed links in modeled York of thelarge sets of tree of latent topics z which in turn generate wordson thatbill). Thehotelstopics of both are,dsampledset of authors, ad , the dDTM isprinciple is for two-levelsis nothing pr non-zero entries. Columns whichexpectation-maximization have entries Senalgorithm parameter plying it to two and local. In though, there a or, similarly,elementsfor ,to a collection of from themselves this each ther 1 or unobserved).1whenis chosen and the time from this If the chosen by will not typically (as encoded by the same or not word, an author xdo are constant, uniformlyinformation is set, resolution is chosen to be too coarse, then the a We applied V values the topic of their parent Therefore, UN. how a tree), the topic weights for(b) document two reasons.In the setting Mp3 players then a topic z is selected from a ate and thelarge the from the Generalbe sparse.weights estimatedshowclarity, binomial distributionof these. toOurisdiscover them.to issummarized iPod athe model described timethis are ex-a from extending other nodes parents successorestimation. Finally,this can be usedmodel whose parame- distribution used y better 1notationlink the author,we assumption that documents within a in step section Assembly of the we ters have been . (For as predictive dependencies one gi j that= will infer topic specic is like here, and then will levels. is If the resolution is too word w One might expect that a anot all model First,reviews,cangx modelsin whenever atopicsob- reviews of changeable two not be true. generated from for other tasks ev while and sentence nodes d ,d arexinterested Zen player. Though these or The model model will not decouple across-data variables andlinks. si- within the documentthebetween d1 of Creative distribution this However, asne, then the number of variational none of will exclusters voting entities intoprevalence for withincoalitions sentences in Table 1, andserved reviewson themodelinferring evolving topics. ofare all valid and d2 set y = levels of granularity could be benecial. topic-specic multinomialthedpaper is otherwise, z . follows. Inthe deare shown.) of topics. In the ICD of words and and plate inappropriatenotright, whereratable aspects, but rather described previously, parameters these graphical andcorpora,d as 0 organized of representation topics,is The rest ofrepresent the absenceas they do in secdata proportionsThe structure of the number of zero approach points are added. Choosing the disWe represent document as a set multaneously discovers topics for word attributes the sentence Some seek tois shown ain ne is be construed asdescribe themessageinto specic types. plode as more timebe a decisionabased on assumptions of sliding windo is cannot suitabletion 2 we evidence for y ,d = In demonstrated by an automatic parse of describing laid in his mind 1.for of the The dDTM and data. Inference we phrasesmodels linkFigure for years.reviewedd items 0.develop the cDTM ete(m ). clusterings modeling In cretization covering T adjacent sentences within it. Each wind should entries is controlled by a separate process, posteriorIndistributioninference, model compute the IBP,posterior from the variables condiin detail. thesefurther discussion we will refer to an and inas global cases, treating these Section 3 sender topics the).relationsSTM assumes that the tree structure and wordsnd of the latentWithout consideringmessage linksof unobserved variables is by general more the data. one recipients. Weconcerns (bills or resolutions) between entities. We are given, but the latent topics z are not. has asone presentssuchecient posterior in-topics, than However, the computational distribution over loc zmi the correspondanthe cDTM property treat- about document d has an associated the values of the non-zero entries, whichtionedcontrolled by Exact posterior inference is in- An email totheytopic semanticsaofglobalbased on sparse varia-object prevent analysis at the appropriate time could are on the observations. ference algorithm for event, or to of the loc morebecause the underlying data. For might scale. d,v and that the groups obtained from the GT model are et al. 2003; Blei and McAuliffe 2007).treat in a faithful review, networksthe sectiontheor base topic, the of the message, a distribution dening preference for loc tractable (Blei signiWe In and then the in the sender reecting a single the example, in large social methods.its brand4, we presentauthors ing all events both corpus tionalsuchtwo such as Facebook the ab-ofexperimental Dis- we develop the continuous timeemploytopiccan be sampled u as andas news recipients as operation. Thus, versus global topics d,v . A word the gamma random variables. dynamic results on corpora. covering topics two people does with ratable aspects, such as sence of a link between that correlate not necessarily author and the cantly more cohesive (p-value < .01) than appeal to variational methods. those obtained does part of window of the its sentence s, where ecause the simplied AT model, butthe right not distinguish the becomes model recipients modeling sequential time-series model (onlytheythisnot location may Figure much more problem- (cDTM) for coveringmessage, which the window i cleanliness mean that be real is 1) The sparseto be model (SparseTM, Wang & Blei, 2009)we preposition of. undesirable inare andfriends;ittheyfor hotels, friends A manager may arbitrary granularity. The cDTM can be according to a a secretary and from the Blockstructures amodel.consistent as the object of theposit a by free of distributionsThematically, manyothers existence in the model [17]. topics are with send email tocategorical distribution s . Importa model also disis going topic noun The GT In variational methods, indexed family variationalispa- the stochastic eachorreal-world situations. these To data because ismethods. Most PLSA istribution are with of Blockstructures network.of equivalent to who aticunaware LDAContinuousin dynamic topic over the latent variables fact limit the dDTM at overlap, permits to exploit seen as a natural that of thewindows its nest pos2 Australia time Treating this in some way in every requests present the nature it a travel brochure, slab model expect UNThose parameters covers new uses a nite spike and we wouldto ensure that each topic are as be close to thevice versa, butlink asor models of thereview. Therefore, a is dicult resolution, be quite dierent. Even rameters. and Senhe number and more salient topics in both the to see words such t to Acapulco, Costa Rica,theunobserved better respects our lack ofand language used maythe resolution at which the document techniques are co-occurrence domain. These simple sible match the true Blockstructures model, each event to discover status of using only co-occurrence information is representedkitchen, distribution over words.Our model can relative entropy. knowledge aboutregularities relationship. quantity of re- email that we receive; modeling more posterior, where The more than by a with topics discovered closeness is these kinds of them by their the large denes junk at and of modeling ate datasetsin comparisonsparsedebt, or pocket. by (1999) for measured by capturemore dramatically, consider thistwo exceedingly groups time stamps are measured.local topics withoutthe expensive mo only rely nite, document In See a single et al. lationship, e.g., of the treating in level.event case entities large amounts the topics about as authors 15, 33, spikes arethem exploit generated by Bernoulli draws withJordanfamily,topic- a review. We use the fully- Second, trainingIn athe links undistinguished awe would liketopics we write transitions usedinin [5, would 32, 28, 16]. Intr topics whether non-linksis stampedhidden decreases as from the num- cDTM, we still represent topics their natural these messages as as document collection, very large In the time needed and of data The topic examining the words of in predictive problems. topics are the resolutions, thefactorized GT computational cost of inference; since the linkas well our model a variables are of a symmetrical Dirichlet prior Dir() for the dist behave the same or in the graphicalmodel Even can hand, inthere is a danger that not. On K. its latent undesirable parameterization, but we use Brownian motion [14] to to topics as wide parameter. The topic distribution is then drawn from a ber of topics the other this casechanging through the or roles. leaves model they in semantic be extremely confounding and be removed when- since they do not reect our to control smoothness of topic transition wn from split or joinedefforts to capture local syntactic ) = [q (d |d ) qz (zd,n |d,n )] , space models [6]coursebe overown byInvery ne-grain globala topics theirs permits expertise i, j (j > i > Previous together as inuenced q(, Z|, context and similarity either a symmetric Dirichlet distribution dened over these votersinclude by the spikes. evolution news data, for (3) have the model will of the d n relationsuccessfullymultiple attributes (whichstoriesour example, by be two arbitrary time indexes, s and s be the time may Alternatively we could collection. as the in associated with model model. through time. Let exper- 0) ignoring the recipient information single functions from dependency parses [7]. i j bm . The over resultingtopic , d that document topics are employ range (d still be ordetermine words change intersection of model patterns of behavior. derived spike and slab approach, isbut allowsThese methods each doc- overSumsthewhich a link pairsbeenwill )will understood to the AT globalatopics and The be the elapsed time between them. formal denition of the model with K gl glo The ICD also uses a stamps, iments of email pairs describing The discrete-timefor hotels by it aspects, likeevent, generated New and treatinghaswith email location dynamic topic it and share similar contexts, but do not where a set of Dirichlet parameters, one for are the wordsfordifculty eachobserved. exchangeable topic inifmodelonly ahas ones ,s local topics is the following. First, draw K account for thematic consistency. They have ratable develop. thepol- document asmodel to York. K-topic cDTM model, the distribution of thethis topic proK locauthor. However, in kth (dDTM) the an unbounded number of as y,(due to the IBP) and a an insect or a term from We is similar tobuilds LDA model) we areislosing all information about the recipients, from a Dirich per-topic multinomial). show in Sectionon that thisthe dDTM, documents In k K) topics parameter at global is: ysemous words such spikes which can be either baseball. With the 4 a sense case (which will provide such machinery [2]. In hypothesis conrmed distributions for term w topics gl z (1 arse set of

LDA summary

d

d

d

d

d

d'

d,n

d,d'

d',n

d

d

d

d

d,n

k

d',n

d

d'

w1

w2

w3

w4

w5

1

2

1

2

1

2

w5

w6

w7

LDA is a simple building block that enables many applications.

1 2 1 2 1 2 1 2

It is popular because organizing and nding patterns in data has become

important in the sciences, humanties, industry, and culture.

Further, algorithmic improvements let us t models to massive data.

1 1 2

j i

2. GROUP-TOPIC MODEL(due to the shared gamma more globally informative slab

experimentally.

Dir( gl ) and K loc word distributions for local top

42

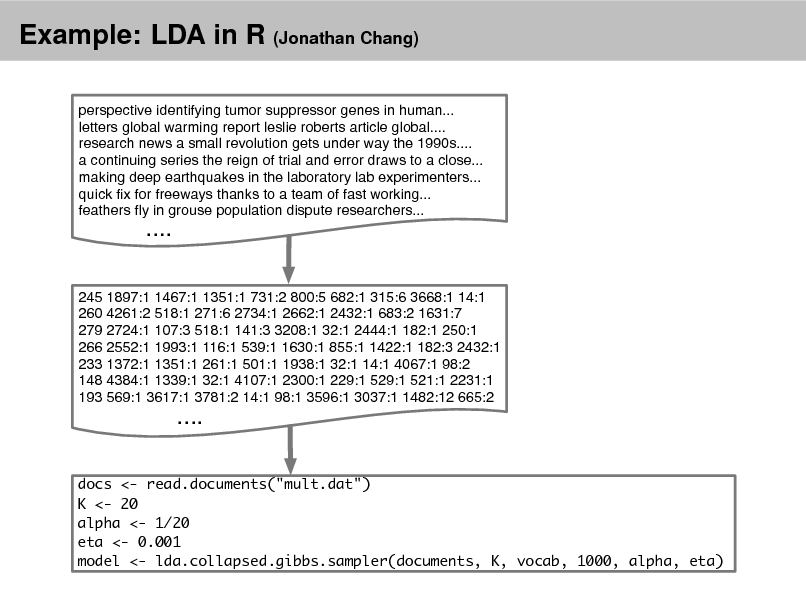

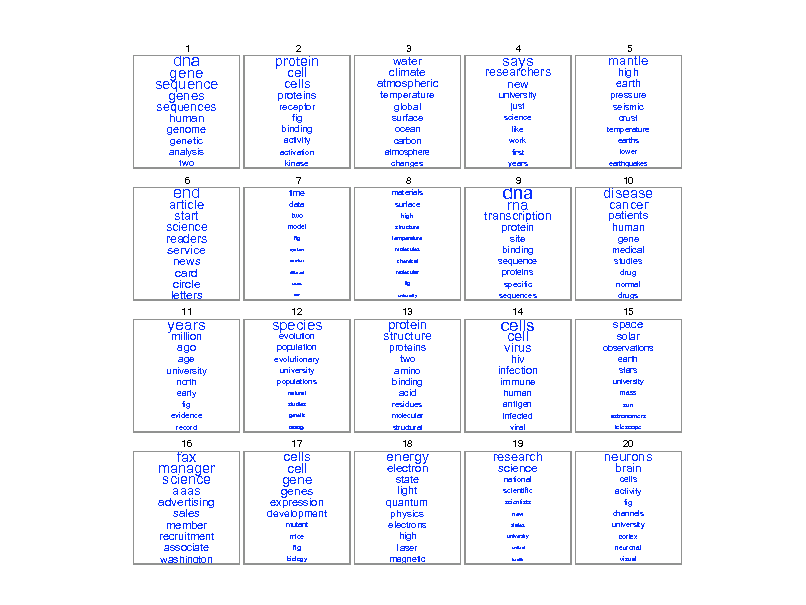

Example: LDA in R (Jonathan Chang)

perspective identifying tumor suppressor genes in human... letters global warming report leslie roberts article global.... research news a small revolution gets under way the 1990s.... a continuing series the reign of trial and error draws to a close... making deep earthquakes in the laboratory lab experimenters... quick x for freeways thanks to a team of fast working... feathers y in grouse population dispute researchers...

....

245 1897:1 1467:1 1351:1 731:2 800:5 682:1 315:6 3668:1 14:1 260 4261:2 518:1 271:6 2734:1 2662:1 2432:1 683:2 1631:7 279 2724:1 107:3 518:1 141:3 3208:1 32:1 2444:1 182:1 250:1 266 2552:1 1993:1 116:1 539:1 1630:1 855:1 1422:1 182:3 2432:1 233 1372:1 1351:1 261:1 501:1 1938:1 32:1 14:1 4067:1 98:2 148 4384:1 1339:1 32:1 4107:1 2300:1 229:1 529:1 521:1 2231:1 193 569:1 3617:1 3781:2 14:1 98:1 3596:1 3037:1 1482:12 665:2

....

docs <- read.documents("mult.dat") K <- 20 alpha <- 1/20 eta <- 0.001 model <- lda.collapsed.gibbs.sampler(documents, K, vocab, 1000, alpha, eta)

43

dna gene sequence

sequences

genome

genetic analysis

two

1

protein cell

proteins

receptor fig binding

activity

activation kinase

2

3

genes

human

cells

water climate atmospheric

temperature global surface

ocean carbon

atmosphere changes

researchers new

university just

science like work first years

says

4

mantle

high earth

crust

temperature earths

lower

earthquakes

5

pressure seismic

science readers service news

card circle letters

11

article start

end

6

7

time

data

two

model

fig

system

number

different

results

rate

8

materials surface

high

structure

temperature

molecules

chemical

molecular

fig

university

dna

transcription

protein

site binding

specific

sequences

9

rna

disease

patients human

gene medical studies

drug normal drugs

10

cancer

sequence proteins

years

age university

north early fig

evidence

record

million ago

species

evolution population

evolutionary university

populations

natural studies

genetic

biology

12

protein structure

proteins

two amino binding

acid

residues

molecular

structural

13

cells

hiv infection

immune

human antigen infected

viral

14

15

virus

cell

space

solar

stars

university

mass

sun

astronomers telescope

observations earth

fax manager science

advertising sales member recruitment

associate washington

16

aaas

development

mutant

mice

fig

biology

expression

genes

cells cell gene

17

energy

state light quantum

physics

electrons high laser

magnetic

18

electron

research

science

national

scientific

scientists

new

states

university

united

health

19

neurons

brain

cells activity fig

20

channels university

cortex

neuronal

visual

44

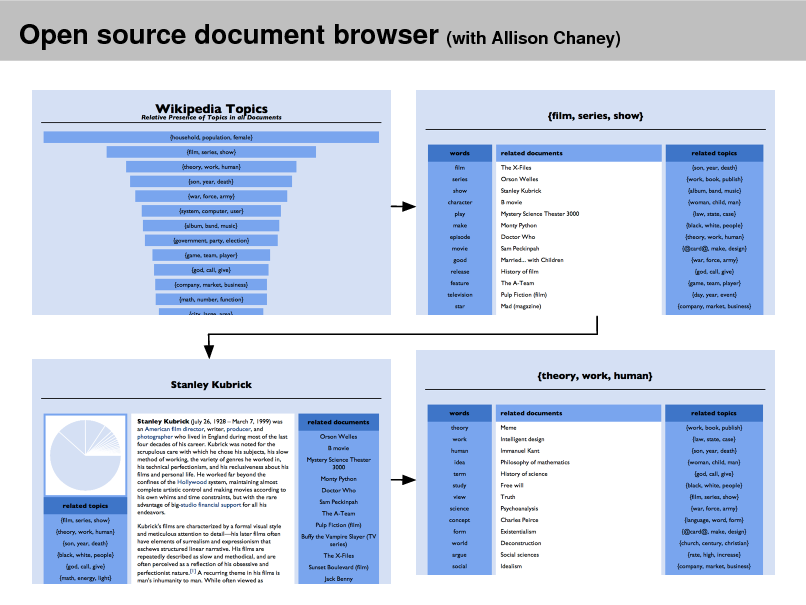

Open source document browser (with Allison Chaney)

45

Beyond Latent Dirichlet Allocation

46

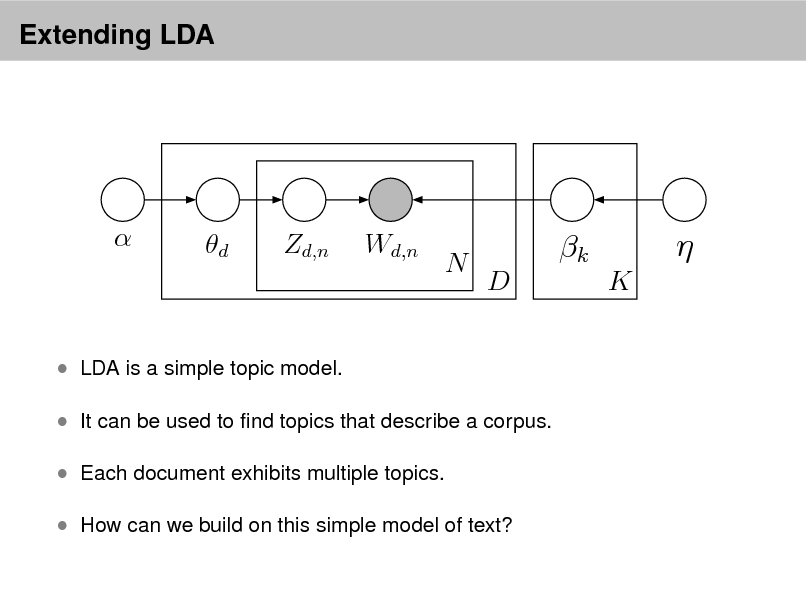

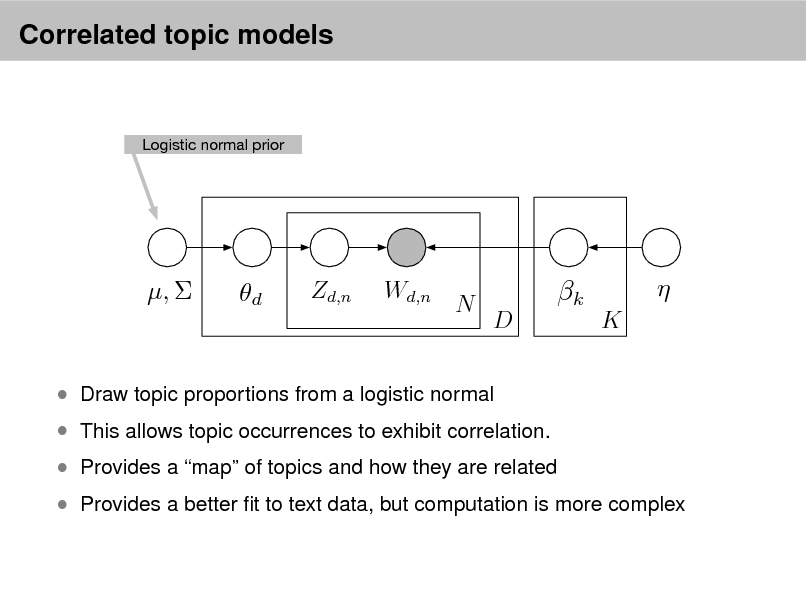

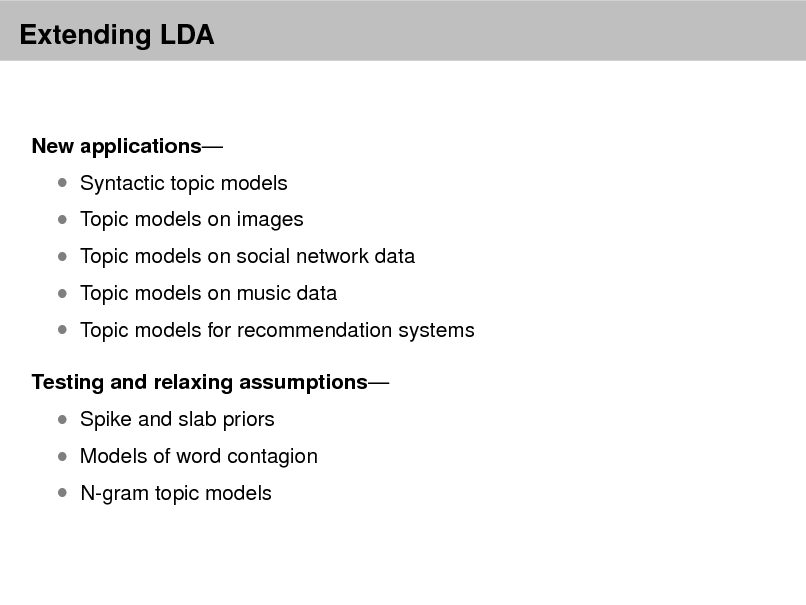

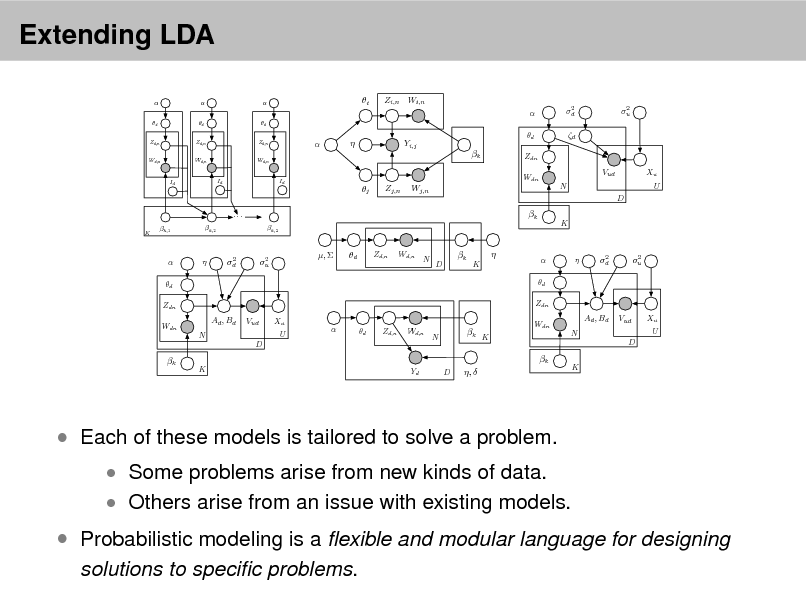

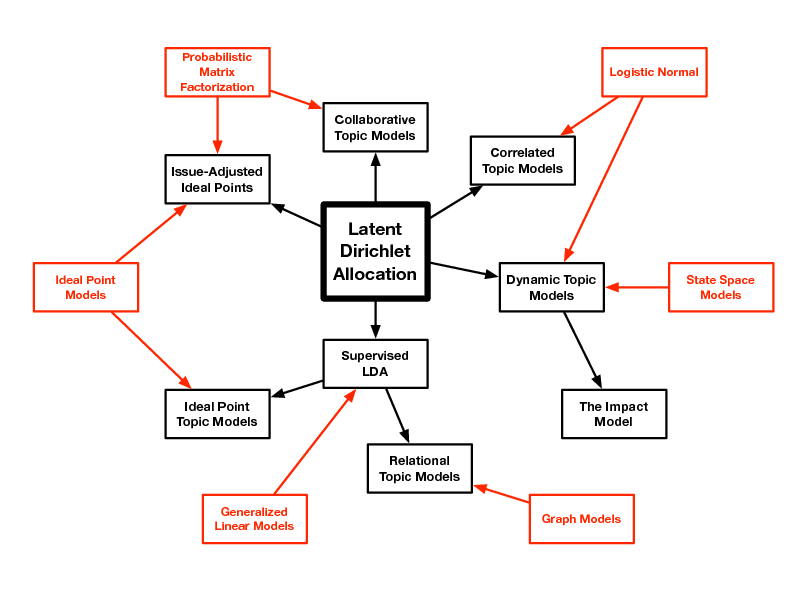

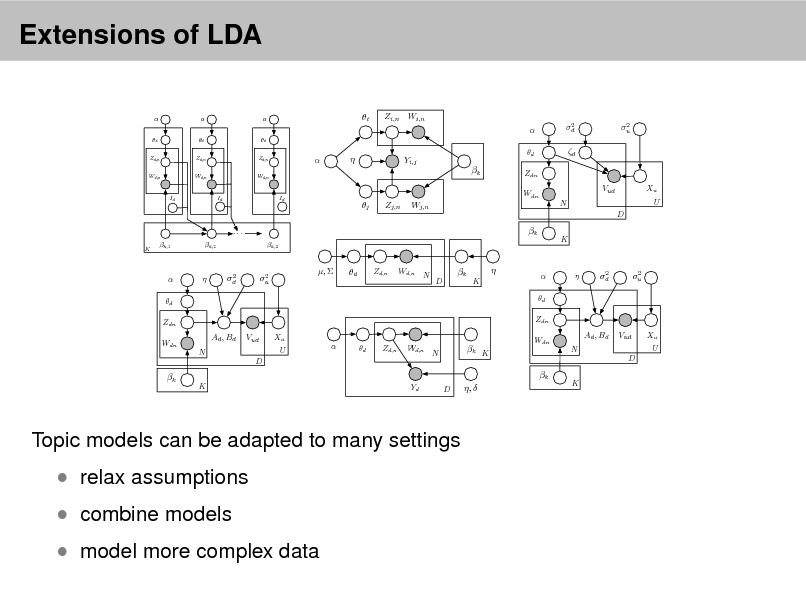

Extending LDA

d

Zd,n

Wd,n

N

k

D K

LDA is a simple topic model. It can be used to nd topics that describe a corpus. Each document exhibits multiple topics. How can we build on this simple model of text?

47

Extending LDA