Plug & Play Generative Networks: Conditional Iterative Generation of Images in Latent Space

Quick links: arXiv (pdf) | code | project page

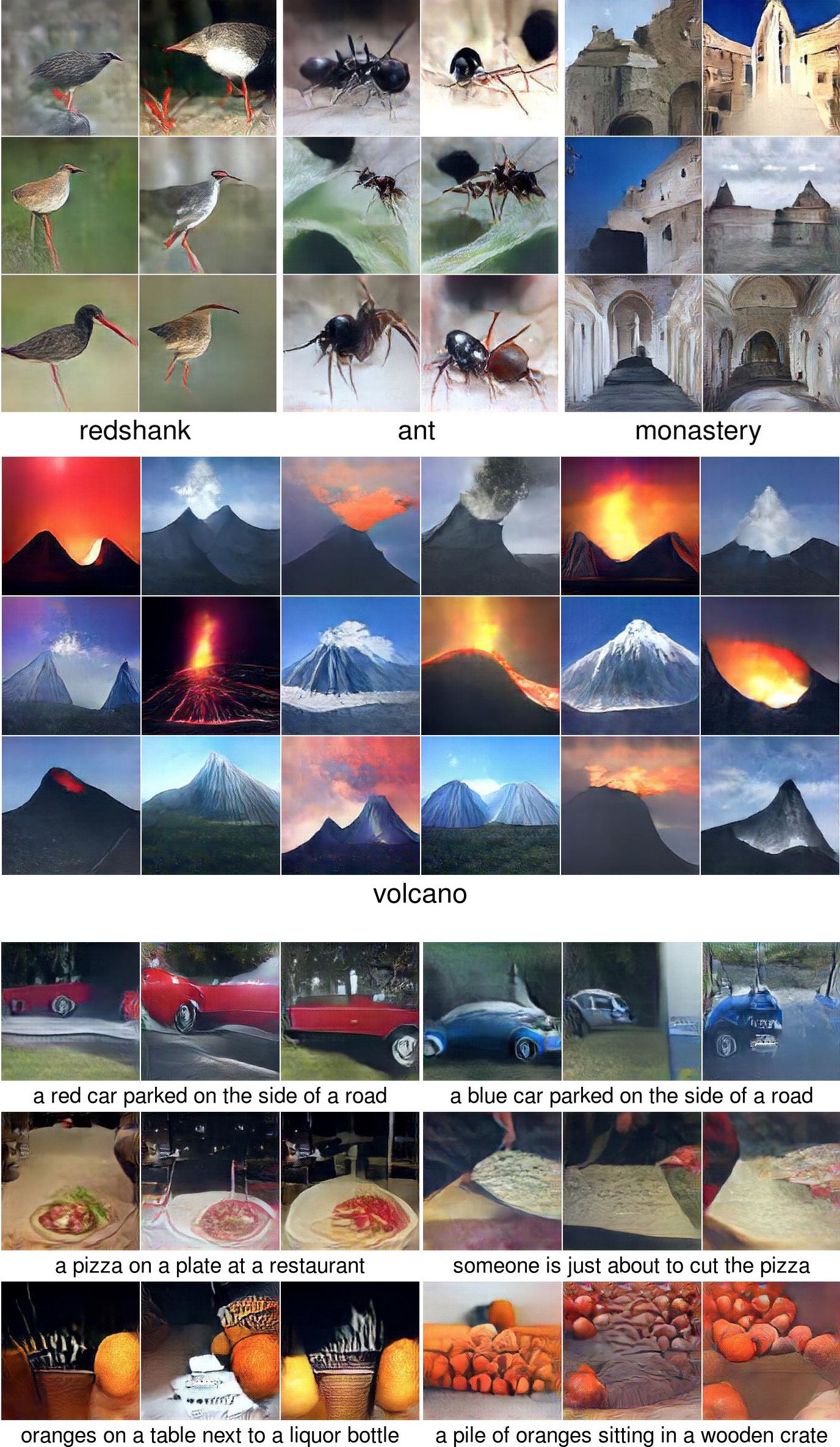

PPGNs can be used to generate images for classes from a dataset like ImageNet (top) and can also be used to turn an image → text caption model into a text → image inverse caption model (bottom).

Casual Abstract

Following the gradient of a classifier model — a model that learns p(y|x) — in image space results in adversarial or fooling images (Szegedy et al, 2013, Nguyen et al, 2014). Adding a p(x) prior containing just a few simple, hand-coded terms can produce images that are much more recognizable (Yosinski et al, 2015), but there are three problems that prevent this approach from being a Magnificent and Consistent Generative Model:

- (a) the colors (and other image stats) are all wrong,

- (b) the images produced have little diversity, and

- (c) the model is not consistent, because the p(x) was just hacked together (not learned), and because the sampler was also just a hacked together for loop.

For the last two years we’ve been trying to solve these problems. Our NIPS 2016 paper “Synthesizing the preferred inputs for neurons in neural networks via deep generator networks (Nguyen et al. 2016)”, solves problem (a) by using the Generator network of Dosovitskiy et al, 2016, trained with DeepSim loss (image reconstruction + code reconstruction + GAN loss), which results in much more realistic colors and images.

This paper — PPGN — solves (b) and (c) by formalizing the sampling procedure as an approximate Langevin MCMC sampler, revealing that a noise term should be added and giving which exact gradients are appropriate: grad log p(x) and grad log p(y|x), the latter term slightly different from the logit gradient that has been used in the past. It turns out that using the correct terms, albeit with manually tuned ratios, results in even better samples. The chain mixes quickly too (see video below), which is a bit weird and surprising.

The approach is dubbed “Plug & Play Generative Networks” because the p(x) and p(y|x) halves can be decoupled, allowing one to transform any classifier p’(y|x) into a generative network by combining it with the p(x) generator half. Intuitively, the performance of the resulting PPGN will be good if the x distributions between the two training sets match and worse to the extent that they do not match. However, as the general success of transfer learning would suggest, it seems to work fairly well even when transferring a generator learned on ImageNet to classifiers learned on different datasets. This even holds when transferring to a caption model, a particularly compelling use case that allows one to transform an image → text caption model into a text → image model without any extra training.

Fast mixing

Each frame is one step in the Markov chain defined by the approximate Langevin sampler defined in the paper. See a few other videos in this playlist.

Posted December 4, 2016.