Sequential Monte Carlo Methods for Bayesian Computation

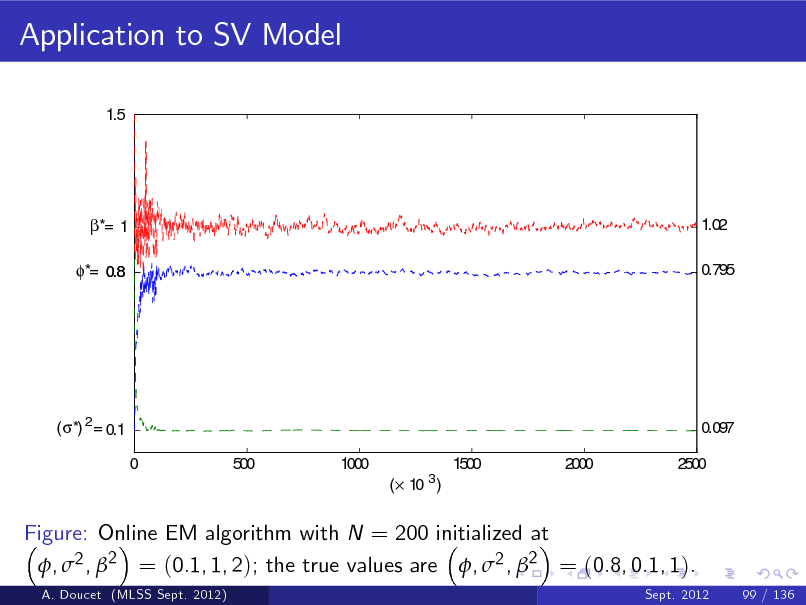

A. Doucet

Kyoto

Sept. 2012

A. Doucet (MLSS Sept. 2012)

Sept. 2012

1 / 136

Sequential Monte Carlo are a powerful class of numerical methods used to sample from any arbitrary sequence of probability distributions. We will discuss how Sequential Monte Carlo methods can be used to perform successfully Bayesian inference in non-linear non-Gaussian state-space models, Bayesian non-parametric time series, graphical models, phylogenetic trees etc. Additionally we will present various recent techniques combining Markov chain Monte Carlo methods with Sequential Monte Carlo methods which allow us to address complex inference models that were previously out of reach.

Scroll with j/k | | | Size

Sequential Monte Carlo Methods for Bayesian Computation

A. Doucet

Kyoto

Sept. 2012

A. Doucet (MLSS Sept. 2012)

Sept. 2012

1 / 136

1

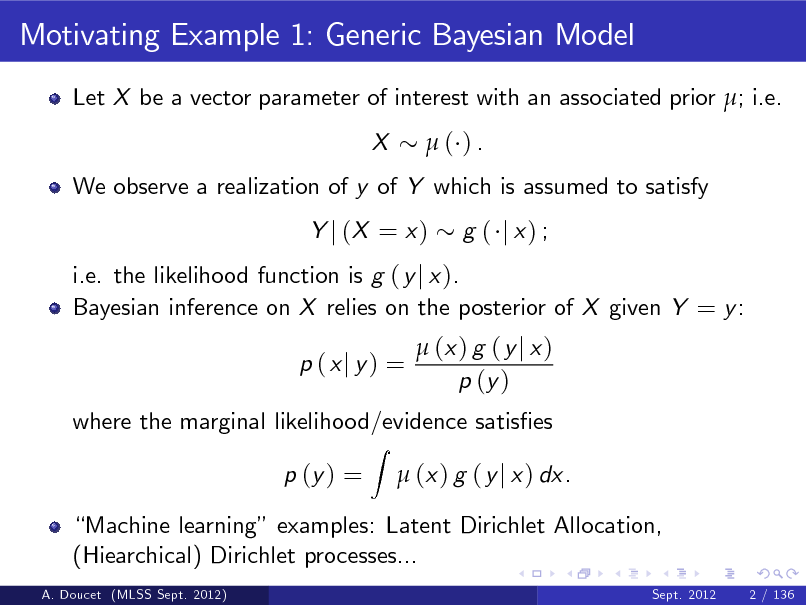

Motivating Example 1: Generic Bayesian Model

Let X be a vector parameter of interest with an associated prior ; i.e. X ( ).

A. Doucet (MLSS Sept. 2012)

Sept. 2012

2 / 136

2

Motivating Example 1: Generic Bayesian Model

Let X be a vector parameter of interest with an associated prior ; i.e. X ( ).

We observe a realization of y of Y which is assumed to satisfy Y j (X = x ) i.e. the likelihood function is g ( y j x ). g ( j x) ;

A. Doucet (MLSS Sept. 2012)

Sept. 2012

2 / 136

3

Motivating Example 1: Generic Bayesian Model

Let X be a vector parameter of interest with an associated prior ; i.e. X ( ).

We observe a realization of y of Y which is assumed to satisfy Y j (X = x ) g ( j x) ;

i.e. the likelihood function is g ( y j x ). Bayesian inference on X relies on the posterior of X given Y = y : p (xj y) =

Z

(x ) g ( y j x ) p (y )

where the marginal likelihood/evidence satises p (y ) = (x ) g ( y j x ) dx.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

2 / 136

4

Motivating Example 1: Generic Bayesian Model

Let X be a vector parameter of interest with an associated prior ; i.e. X ( ).

We observe a realization of y of Y which is assumed to satisfy Y j (X = x ) g ( j x) ;

i.e. the likelihood function is g ( y j x ). Bayesian inference on X relies on the posterior of X given Y = y : p (xj y) =

Z

(x ) g ( y j x ) p (y )

where the marginal likelihood/evidence satises p (y ) = (x ) g ( y j x ) dx.

Machine learning examples: Latent Dirichlet Allocation, (Hiearchical) Dirichlet processes...

A. Doucet (MLSS Sept. 2012) Sept. 2012 2 / 136

5

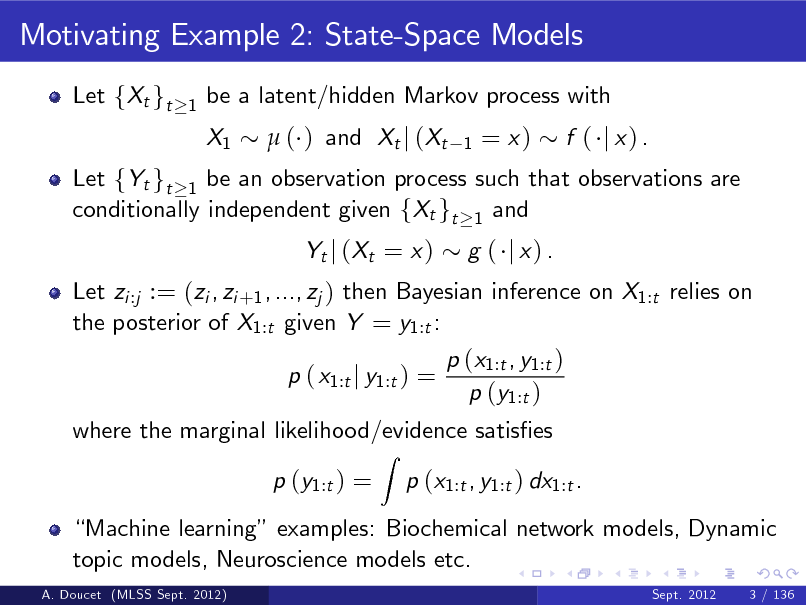

Motivating Example 2: State-Space Models

Let fXt gt

1

be a latent/hidden Markov process with X1 ( ) and Xt j (Xt

1

= x)

f ( j x) .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

3 / 136

6

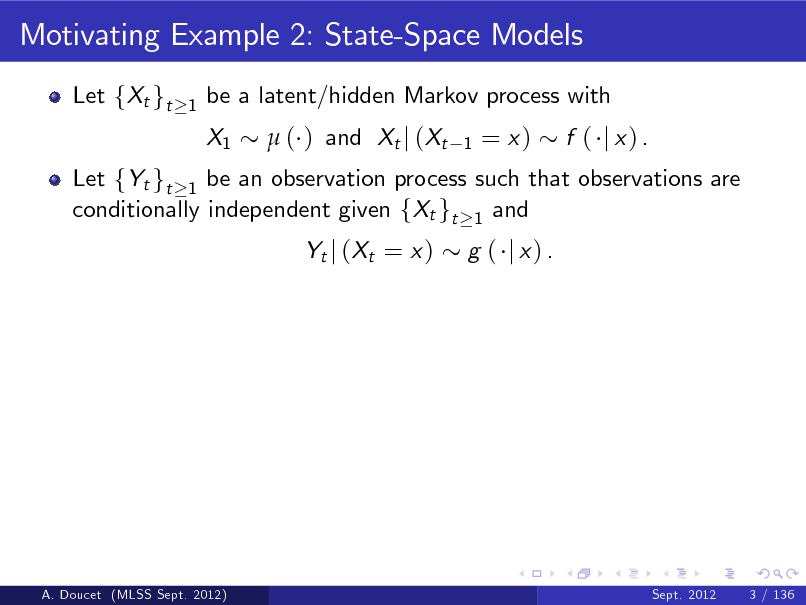

Motivating Example 2: State-Space Models

Let fXt gt

1

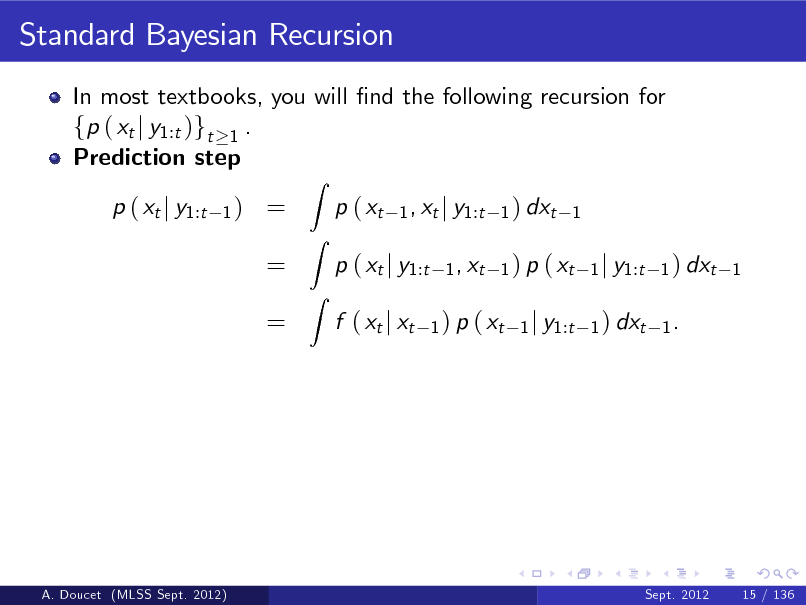

be a latent/hidden Markov process with X1 ( ) and Xt j (Xt

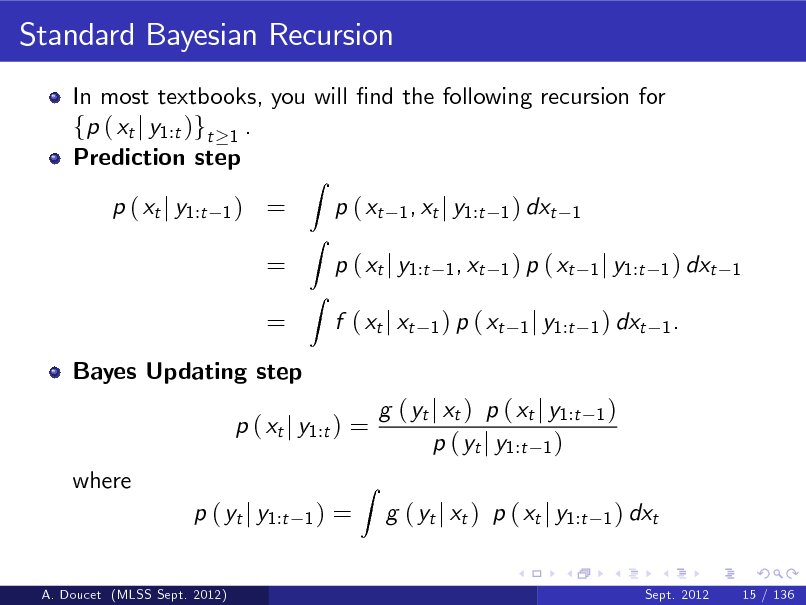

1

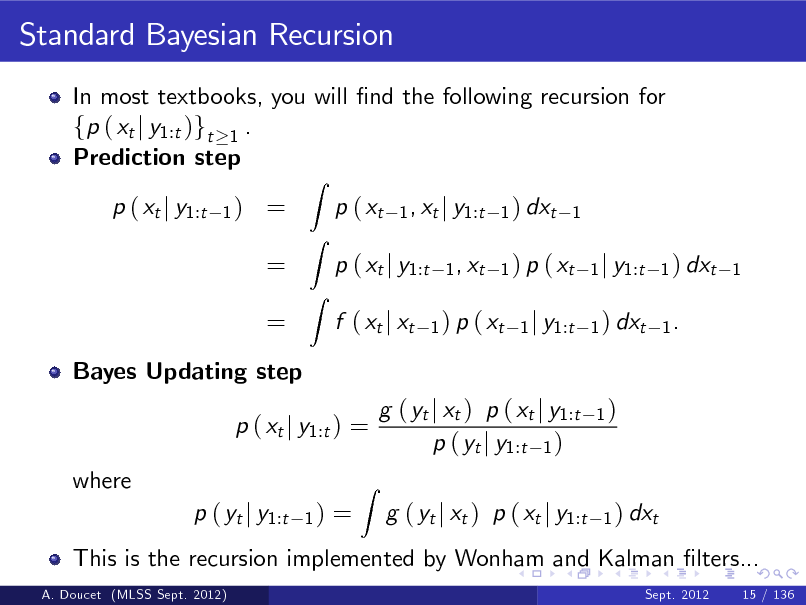

= x)

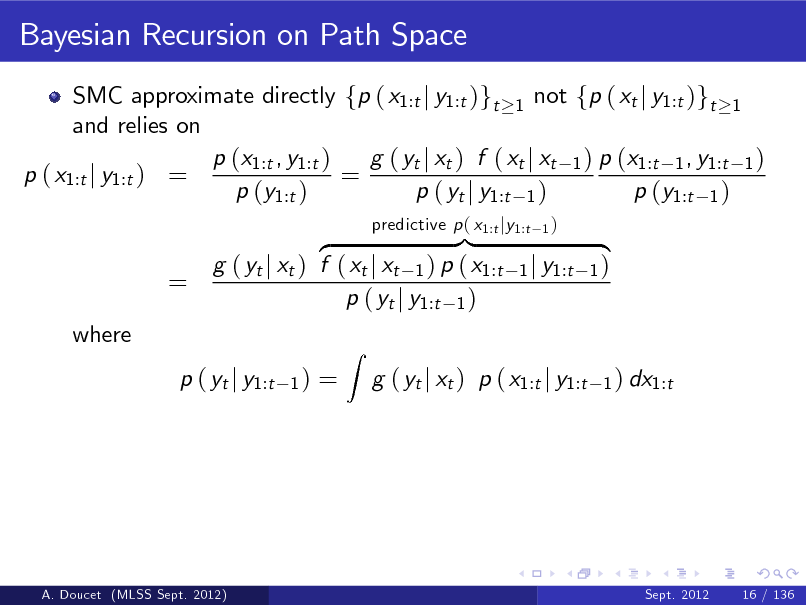

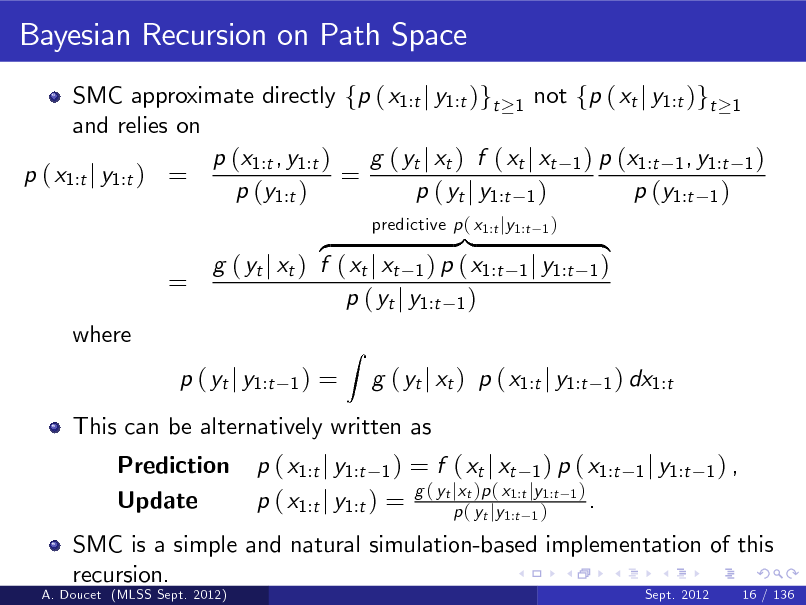

Let fYt gt 1 be an observation process such that observations are conditionally independent given fXt gt 1 and Yt j ( Xt = x ) g ( j x) .

f ( j x) .

A. Doucet (MLSS Sept. 2012)

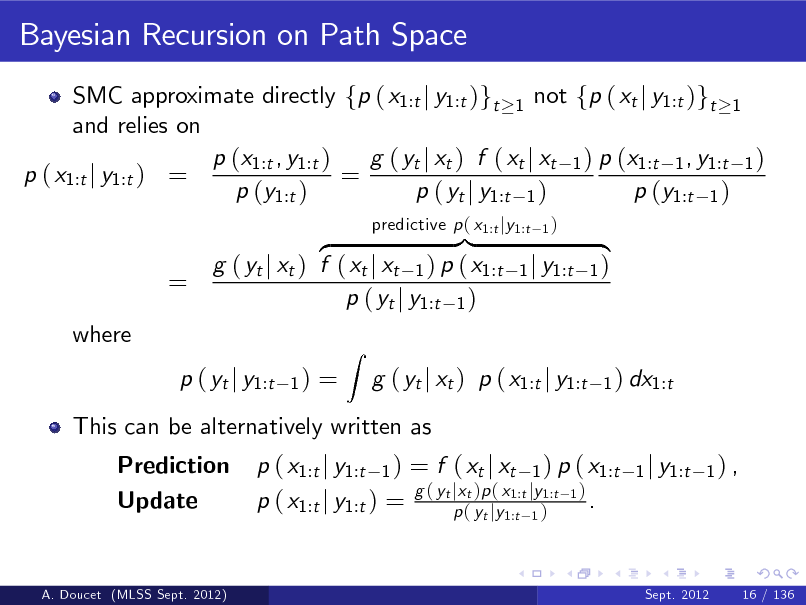

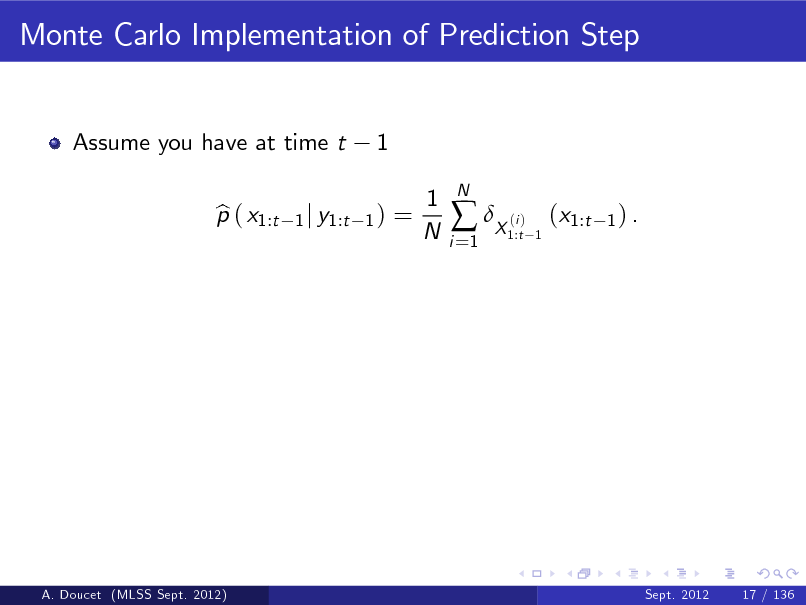

Sept. 2012

3 / 136

7

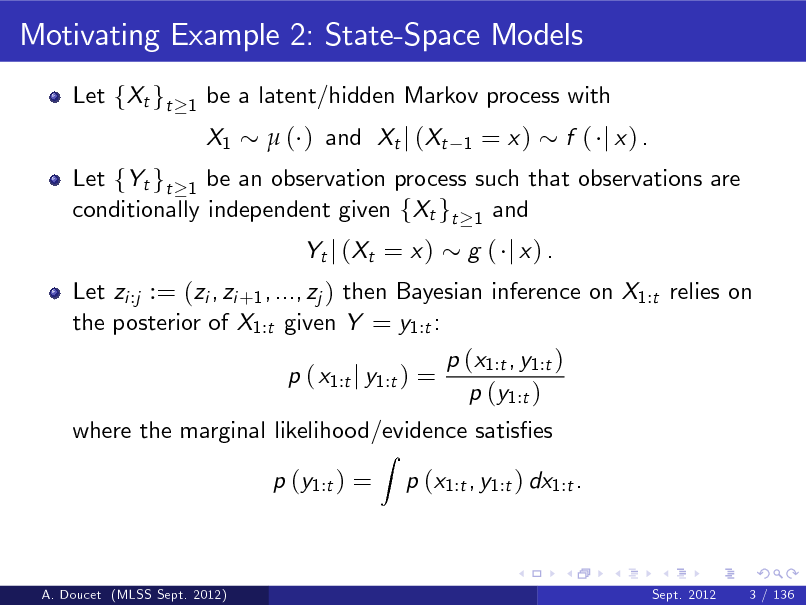

Motivating Example 2: State-Space Models

Let fXt gt

1

be a latent/hidden Markov process with X1 ( ) and Xt j (Xt

1

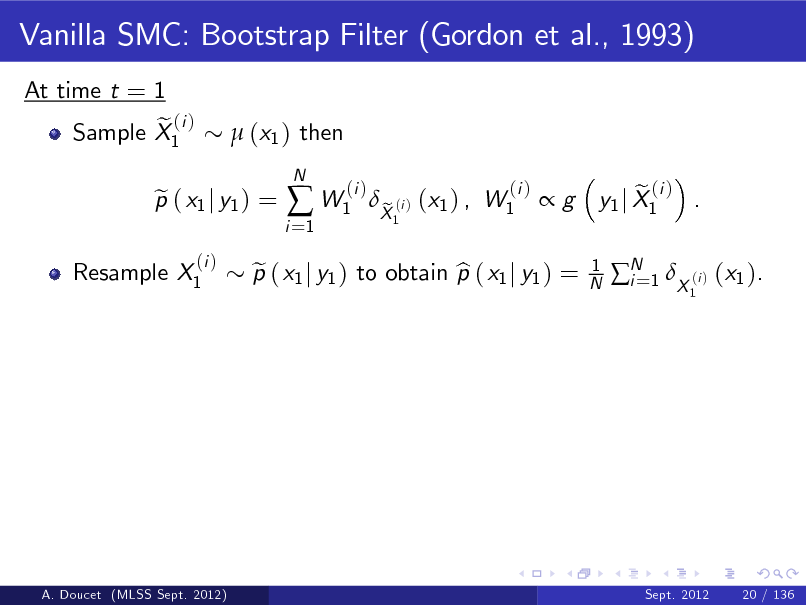

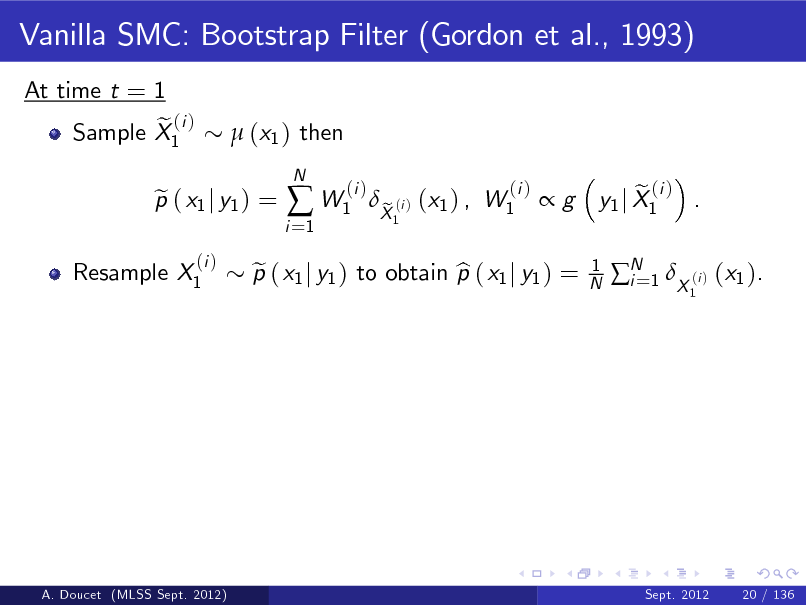

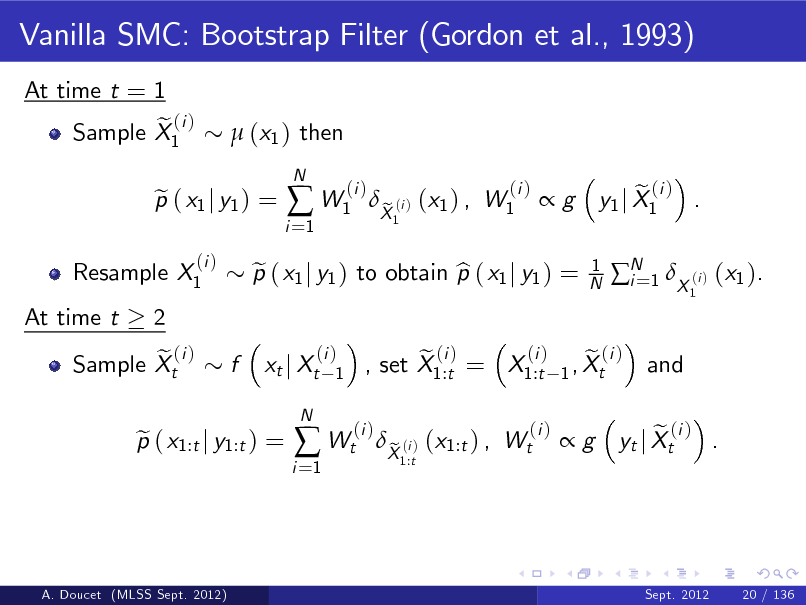

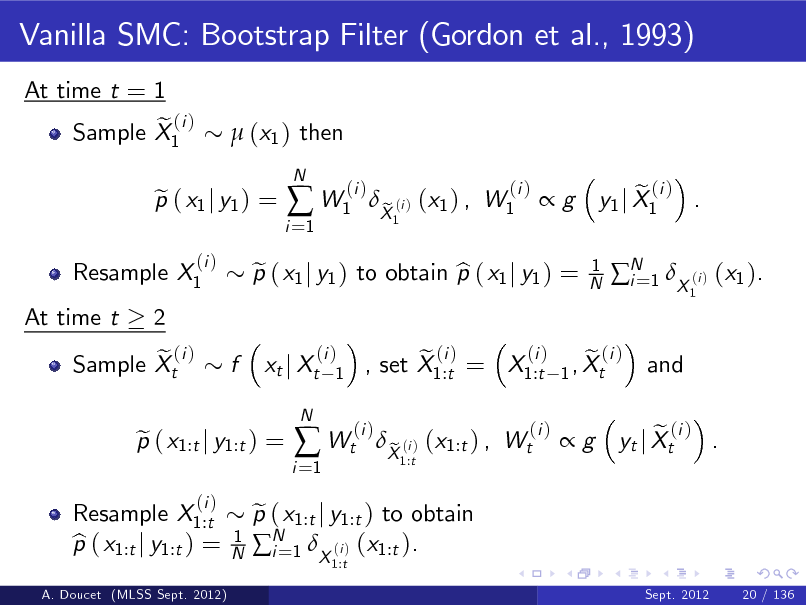

= x)

Let fYt gt 1 be an observation process such that observations are conditionally independent given fXt gt 1 and Let zi :j := (zi , zi +1 , ..., zj ) then Bayesian inference on X1:t relies on the posterior of X1:t given Y = y1:t : p ( x1:t j y1:t ) =

Z

f ( j x) .

Yt j ( Xt = x )

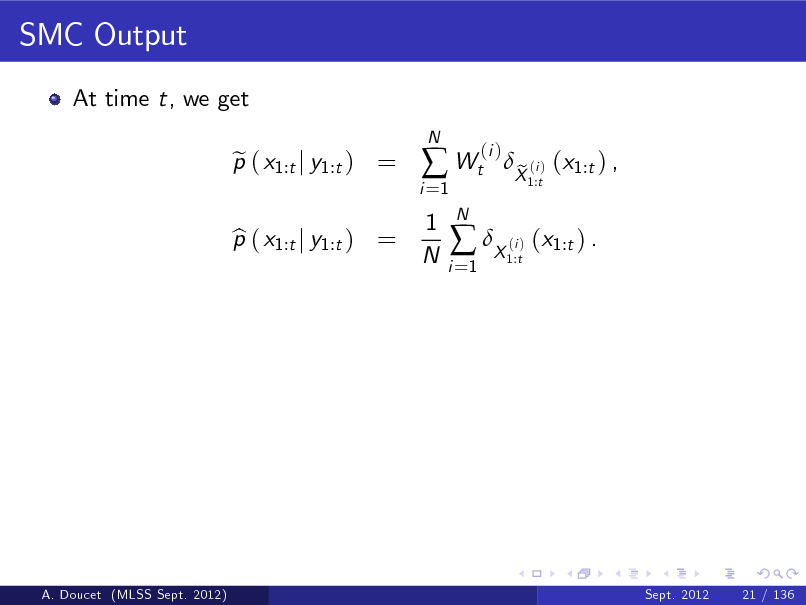

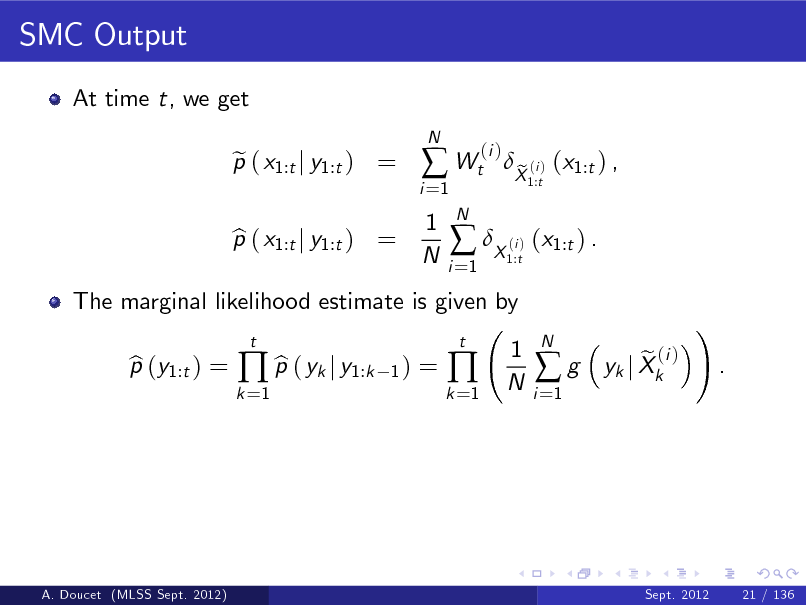

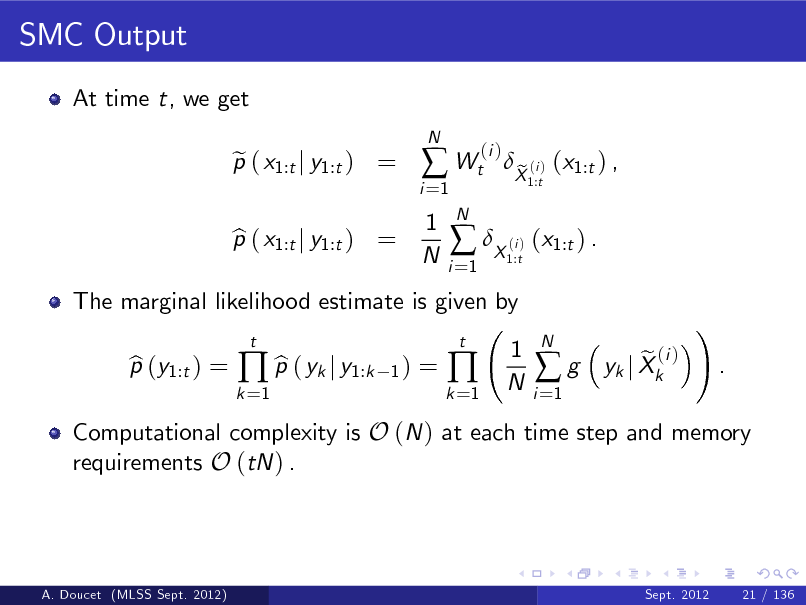

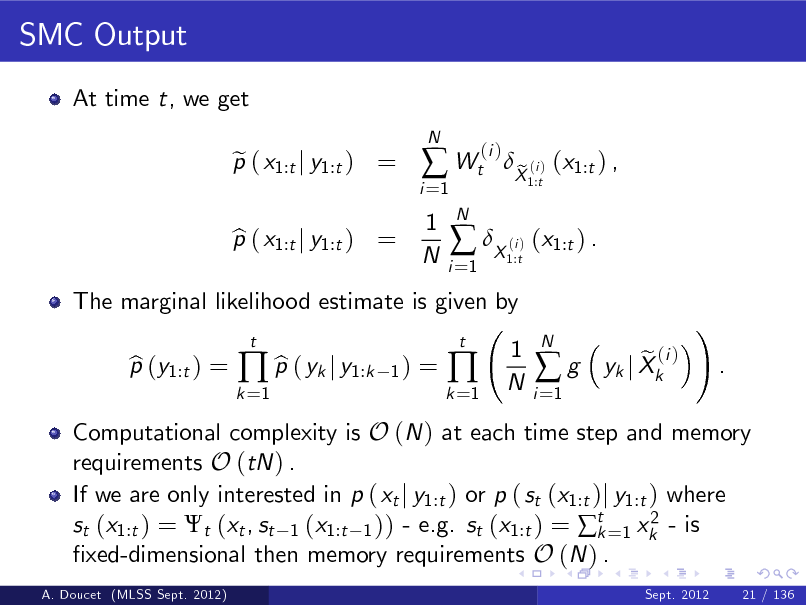

g ( j x) .

p (x1:t , y1:t ) p (y1:t )

where the marginal likelihood/evidence satises p (y1:t ) = p (x1:t , y1:t ) dx1:t .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

3 / 136

8

Motivating Example 2: State-Space Models

Let fXt gt

1

be a latent/hidden Markov process with X1 ( ) and Xt j (Xt

1

= x)

Let fYt gt 1 be an observation process such that observations are conditionally independent given fXt gt 1 and Let zi :j := (zi , zi +1 , ..., zj ) then Bayesian inference on X1:t relies on the posterior of X1:t given Y = y1:t : p ( x1:t j y1:t ) =

Z

f ( j x) .

Yt j ( Xt = x )

g ( j x) .

p (x1:t , y1:t ) p (y1:t )

where the marginal likelihood/evidence satises p (y1:t ) = p (x1:t , y1:t ) dx1:t .

Machine learning examples: Biochemical network models, Dynamic topic models, Neuroscience models etc.

A. Doucet (MLSS Sept. 2012) Sept. 2012 3 / 136

9

Bayesian Inference and Machine Learning

Bayesian approaches have been adopted by a large part of the ML community.

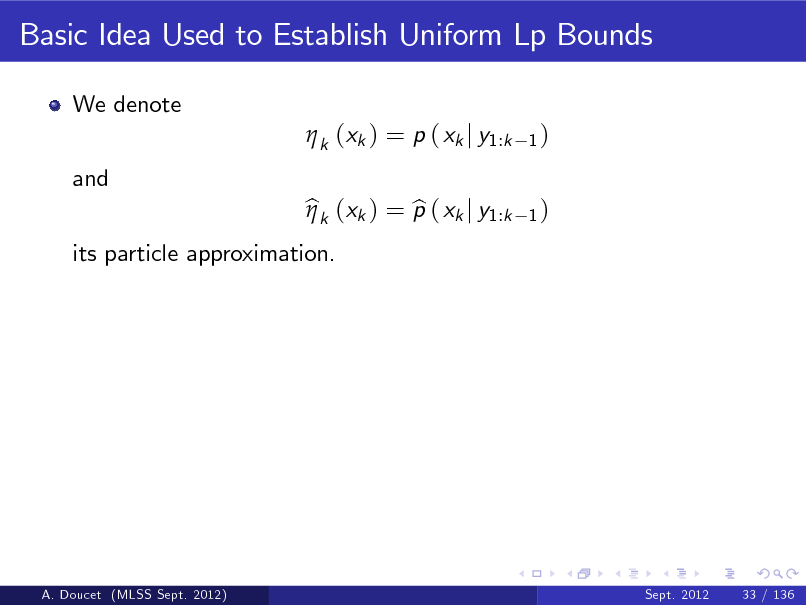

A. Doucet (MLSS Sept. 2012)

Sept. 2012

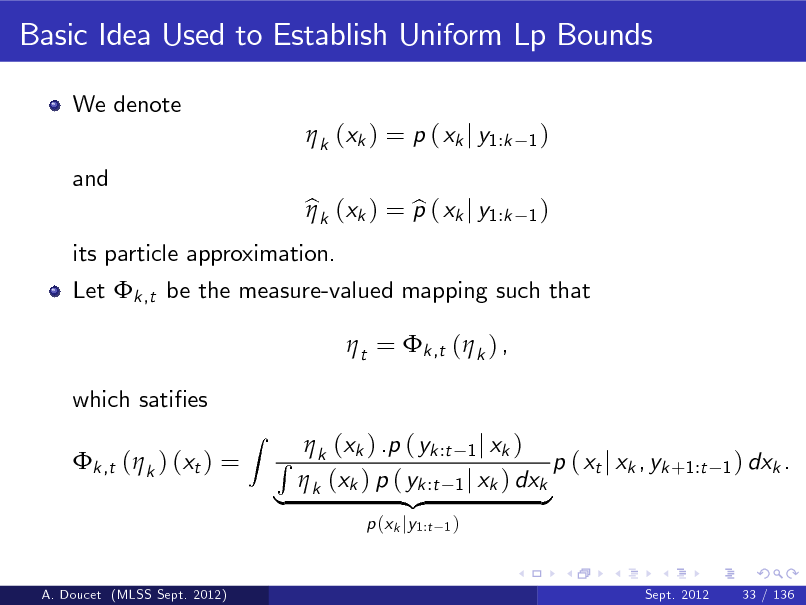

4 / 136

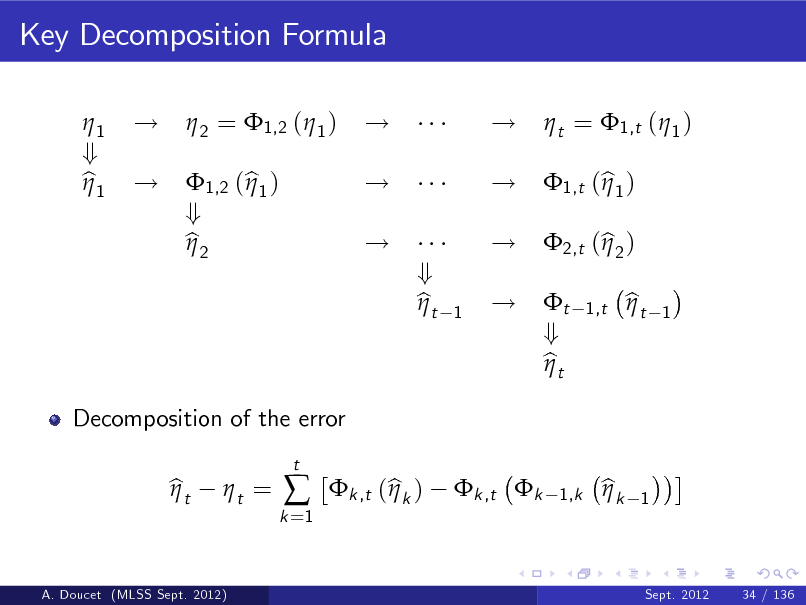

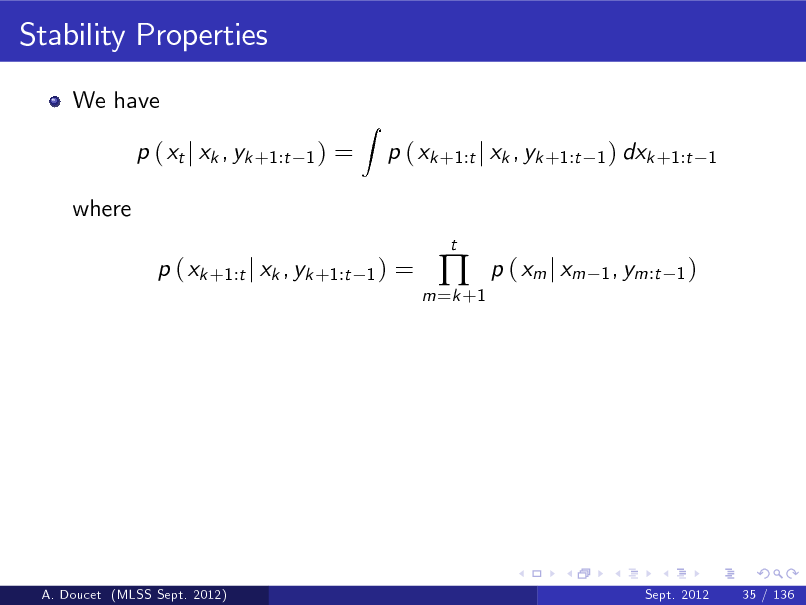

10

Bayesian Inference and Machine Learning

Bayesian approaches have been adopted by a large part of the ML community. Bayesian inference oers a number of attractive advantages over conventional approach

A. Doucet (MLSS Sept. 2012)

Sept. 2012

4 / 136

11

Bayesian Inference and Machine Learning

Bayesian approaches have been adopted by a large part of the ML community. Bayesian inference oers a number of attractive advantages over conventional approach

exibility in constructing complex models from simple parts;

A. Doucet (MLSS Sept. 2012)

Sept. 2012

4 / 136

12

Bayesian Inference and Machine Learning

Bayesian approaches have been adopted by a large part of the ML community. Bayesian inference oers a number of attractive advantages over conventional approach

exibility in constructing complex models from simple parts; the incorporation of prior knowledge is very natural;

A. Doucet (MLSS Sept. 2012)

Sept. 2012

4 / 136

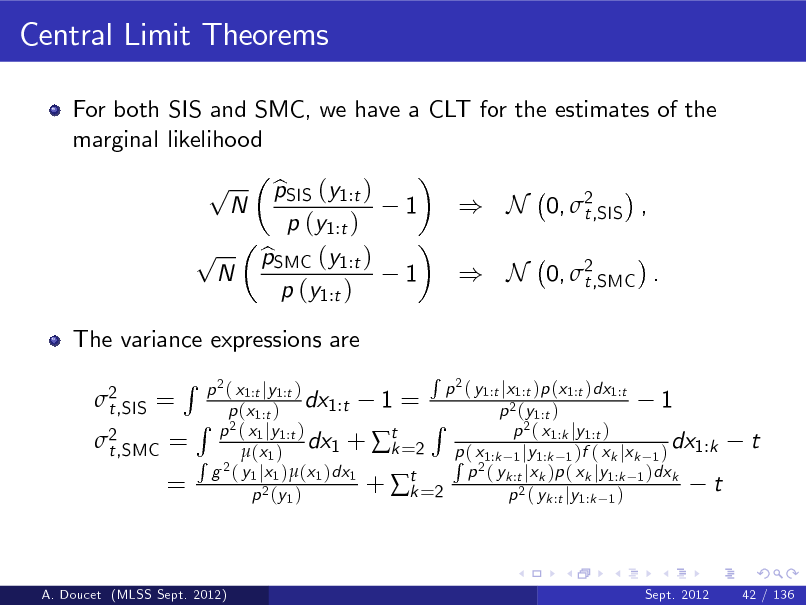

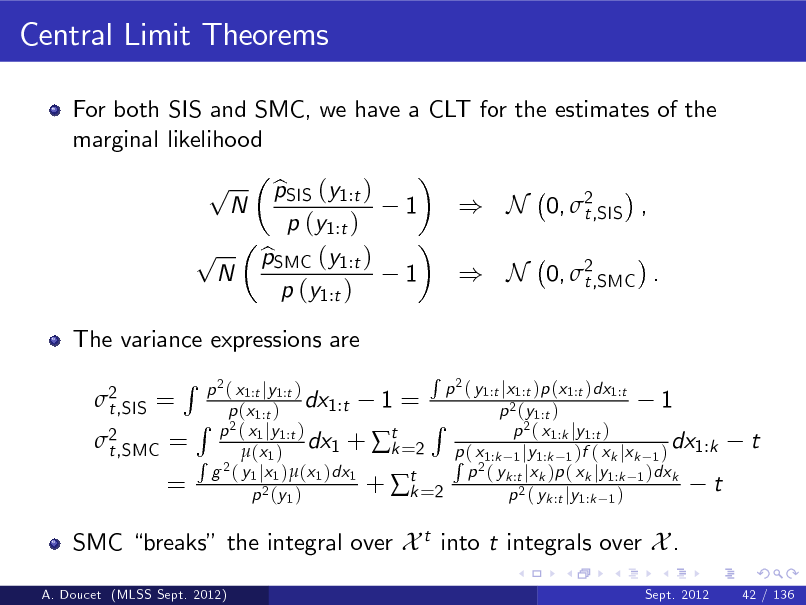

13

Bayesian Inference and Machine Learning

Bayesian approaches have been adopted by a large part of the ML community. Bayesian inference oers a number of attractive advantages over conventional approach

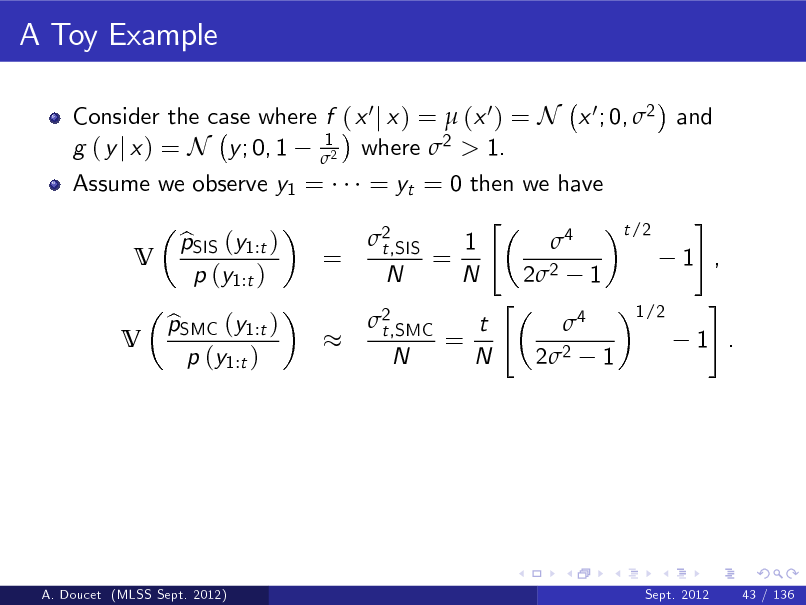

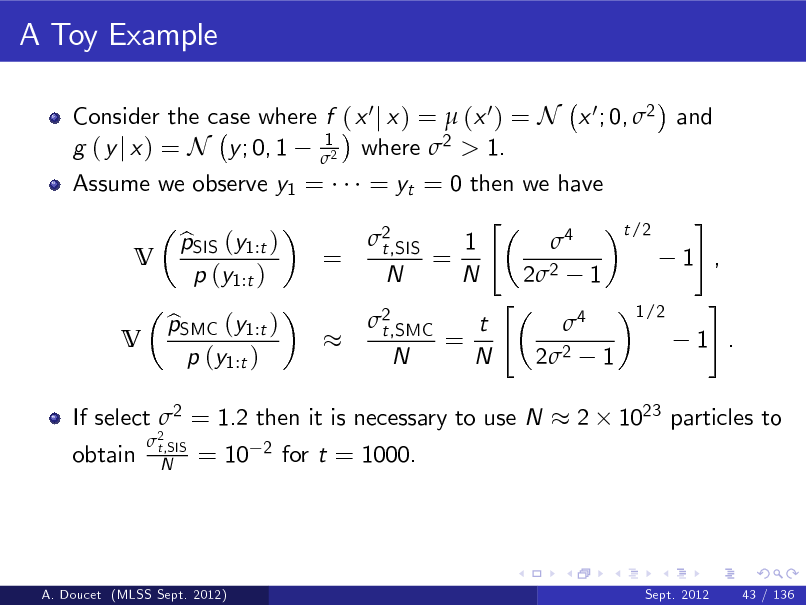

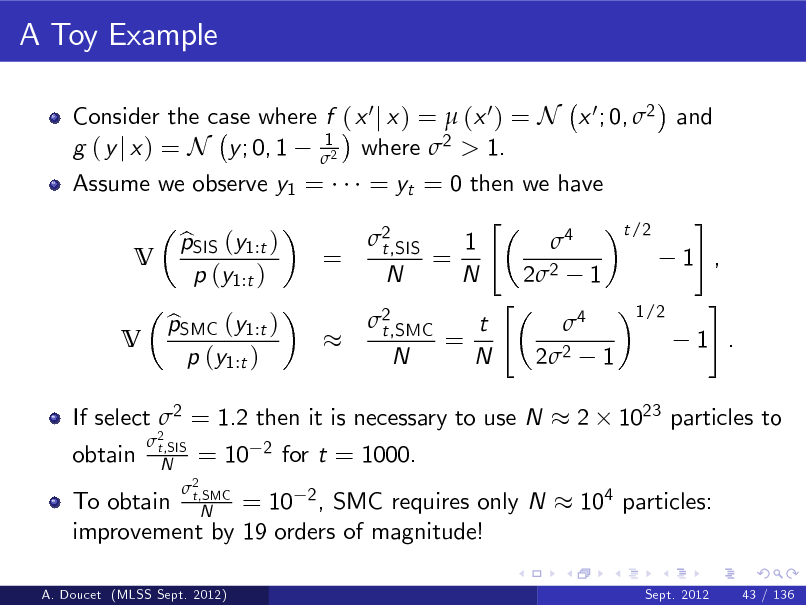

exibility in constructing complex models from simple parts; the incorporation of prior knowledge is very natural; all modelling assumptions are made explicit;

A. Doucet (MLSS Sept. 2012)

Sept. 2012

4 / 136

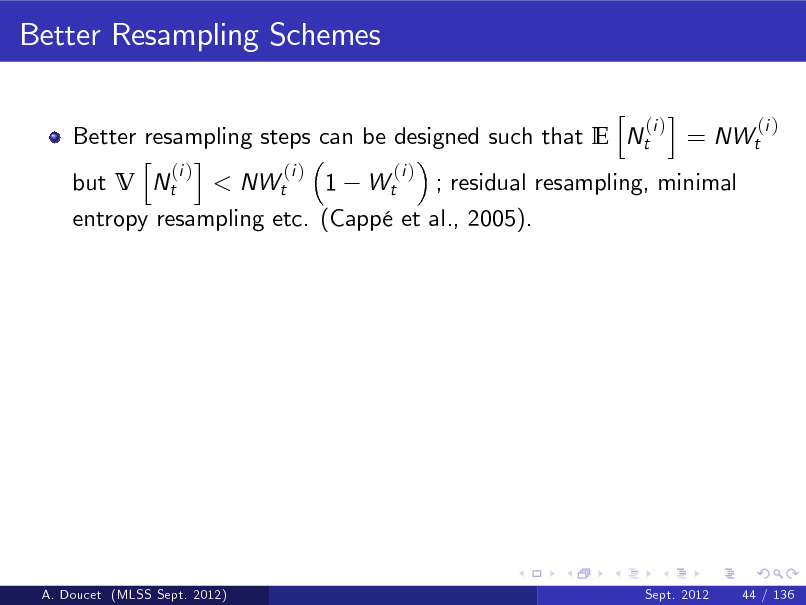

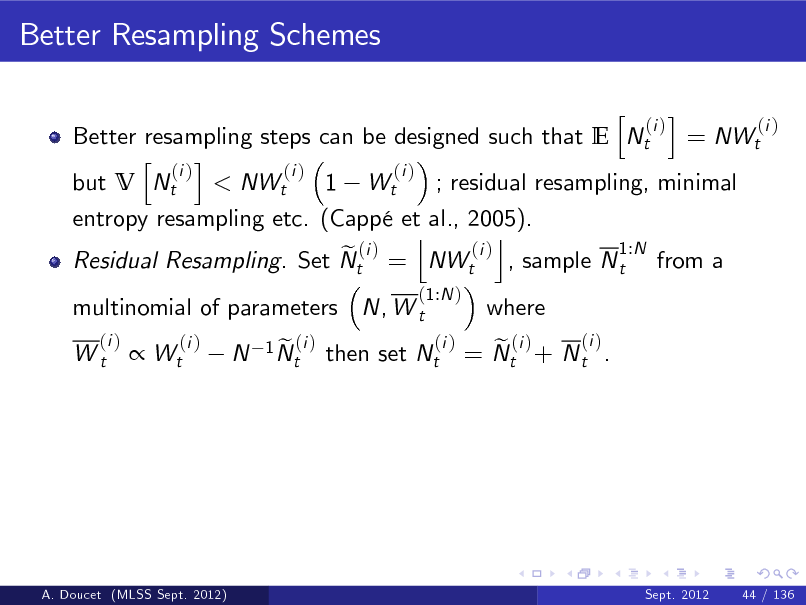

14

Bayesian Inference and Machine Learning

Bayesian approaches have been adopted by a large part of the ML community. Bayesian inference oers a number of attractive advantages over conventional approach

exibility in constructing complex models from simple parts; the incorporation of prior knowledge is very natural; all modelling assumptions are made explicit; uncertainties over model order;

A. Doucet (MLSS Sept. 2012)

Sept. 2012

4 / 136

15

Bayesian Inference and Machine Learning

Bayesian approaches have been adopted by a large part of the ML community. Bayesian inference oers a number of attractive advantages over conventional approach

exibility in constructing complex models from simple parts; the incorporation of prior knowledge is very natural; all modelling assumptions are made explicit; uncertainties over model order; model parameters and predictions are technically straightforward to compute;

A. Doucet (MLSS Sept. 2012)

Sept. 2012

4 / 136

16

Bayesian Inference and Machine Learning

Bayesian approaches have been adopted by a large part of the ML community. Bayesian inference oers a number of attractive advantages over conventional approach

exibility in constructing complex models from simple parts; the incorporation of prior knowledge is very natural; all modelling assumptions are made explicit; uncertainties over model order; model parameters and predictions are technically straightforward to compute;

The cost to pay is that approximate inference techniques are necessary to approximate the resulting posterior distributions for all but trivial models.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

4 / 136

17

Approximate Inference Methods

Gaussian/Laplace approximation, local linearization, Extended Kalman lters.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

5 / 136

18

Approximate Inference Methods

Gaussian/Laplace approximation, local linearization, Extended Kalman lters. Variational methods, density assumed lters.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

5 / 136

19

Approximate Inference Methods

Gaussian/Laplace approximation, local linearization, Extended Kalman lters. Variational methods, density assumed lters. Expectation-Propagation.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

5 / 136

20

Approximate Inference Methods

Gaussian/Laplace approximation, local linearization, Extended Kalman lters. Variational methods, density assumed lters. Expectation-Propagation. Markov chain Monte Carlo (MCMC) methods.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

5 / 136

21

Approximate Inference Methods

Gaussian/Laplace approximation, local linearization, Extended Kalman lters. Variational methods, density assumed lters. Expectation-Propagation. Markov chain Monte Carlo (MCMC) methods. Sequential Monte Carlo (SMC) methods.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

5 / 136

22

Monte Carlo Methods

Variational and EP methods are computationally cheap but perform functional approximations of the posteriors of interest.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

6 / 136

23

Monte Carlo Methods

Variational and EP methods are computationally cheap but perform functional approximations of the posteriors of interest. Both MCMC and SMC are asymptotically (as you increase computational eorts) bias-free but computationally expensive.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

6 / 136

24

Monte Carlo Methods

Variational and EP methods are computationally cheap but perform functional approximations of the posteriors of interest. Both MCMC and SMC are asymptotically (as you increase computational eorts) bias-free but computationally expensive. MCMC are the tools of choice in Bayesian computation for over 20 years whereas SMC have been widely used for 15 years in vision and robotics.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

6 / 136

25

Monte Carlo Methods

Variational and EP methods are computationally cheap but perform functional approximations of the posteriors of interest. Both MCMC and SMC are asymptotically (as you increase computational eorts) bias-free but computationally expensive. MCMC are the tools of choice in Bayesian computation for over 20 years whereas SMC have been widely used for 15 years in vision and robotics. The development of new methodology combined to the emergence of cheap multicore architectures makes now SMC a powerful alternative/complementary approach to MCMC to address general Bayesian computational problems.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

6 / 136

26

Monte Carlo Methods

Variational and EP methods are computationally cheap but perform functional approximations of the posteriors of interest. Both MCMC and SMC are asymptotically (as you increase computational eorts) bias-free but computationally expensive. MCMC are the tools of choice in Bayesian computation for over 20 years whereas SMC have been widely used for 15 years in vision and robotics. The development of new methodology combined to the emergence of cheap multicore architectures makes now SMC a powerful alternative/complementary approach to MCMC to address general Bayesian computational problems. The aim of these lectures is to provide an introduction to this active research eld and discuss some open research problems.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

6 / 136

27

Some References and Resources

A.D., J.F.G. De Freitas & N.J. Gordon (editors), Sequential Monte Carlo Methods in Practice, Springer-Verlag: New York, 2001.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

7 / 136

28

Some References and Resources

A.D., J.F.G. De Freitas & N.J. Gordon (editors), Sequential Monte Carlo Methods in Practice, Springer-Verlag: New York, 2001. P. Del Moral, Feynman-Kac Formulae: Genealogical and Interacting Particle Systems with Applications, Springer-Verlag: New York, 2004.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

7 / 136

29

Some References and Resources

A.D., J.F.G. De Freitas & N.J. Gordon (editors), Sequential Monte Carlo Methods in Practice, Springer-Verlag: New York, 2001. P. Del Moral, Feynman-Kac Formulae: Genealogical and Interacting Particle Systems with Applications, Springer-Verlag: New York, 2004. O. Capp, E. Moulines & T. Ryden, Hidden Markov Models, Springer-Verlag: New York, 2005.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

7 / 136

30

Some References and Resources

A.D., J.F.G. De Freitas & N.J. Gordon (editors), Sequential Monte Carlo Methods in Practice, Springer-Verlag: New York, 2001. P. Del Moral, Feynman-Kac Formulae: Genealogical and Interacting Particle Systems with Applications, Springer-Verlag: New York, 2004. O. Capp, E. Moulines & T. Ryden, Hidden Markov Models, Springer-Verlag: New York, 2005. Webpage with links to papers and codes: http://www.stats.ox.ac.uk/~doucet/smc_resources.html

A. Doucet (MLSS Sept. 2012)

Sept. 2012

7 / 136

31

Some References and Resources

A.D., J.F.G. De Freitas & N.J. Gordon (editors), Sequential Monte Carlo Methods in Practice, Springer-Verlag: New York, 2001. P. Del Moral, Feynman-Kac Formulae: Genealogical and Interacting Particle Systems with Applications, Springer-Verlag: New York, 2004. O. Capp, E. Moulines & T. Ryden, Hidden Markov Models, Springer-Verlag: New York, 2005. Webpage with links to papers and codes: http://www.stats.ox.ac.uk/~doucet/smc_resources.html Thousands of papers on the subject appear every year.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

7 / 136

32

Organization of Lectures

State-Space Models (approx.4 hours)

A. Doucet (MLSS Sept. 2012)

Sept. 2012

8 / 136

33

Organization of Lectures

State-Space Models (approx.4 hours)

SMC ltering and smoothing

A. Doucet (MLSS Sept. 2012)

Sept. 2012

8 / 136

34

Organization of Lectures

State-Space Models (approx.4 hours)

SMC ltering and smoothing Maximum likelihood parameter inference

A. Doucet (MLSS Sept. 2012)

Sept. 2012

8 / 136

35

Organization of Lectures

State-Space Models (approx.4 hours)

SMC ltering and smoothing Maximum likelihood parameter inference Bayesian parameter inference

A. Doucet (MLSS Sept. 2012)

Sept. 2012

8 / 136

36

Organization of Lectures

State-Space Models (approx.4 hours)

SMC ltering and smoothing Maximum likelihood parameter inference Bayesian parameter inference

Beyond State-Space Models (approx. 2 hours)

A. Doucet (MLSS Sept. 2012)

Sept. 2012

8 / 136

37

Organization of Lectures

State-Space Models (approx.4 hours)

SMC ltering and smoothing Maximum likelihood parameter inference Bayesian parameter inference

Beyond State-Space Models (approx. 2 hours)

SMC methods for generic sequence of target distributions

A. Doucet (MLSS Sept. 2012)

Sept. 2012

8 / 136

38

Organization of Lectures

State-Space Models (approx.4 hours)

SMC ltering and smoothing Maximum likelihood parameter inference Bayesian parameter inference

Beyond State-Space Models (approx. 2 hours)

SMC methods for generic sequence of target distributions SMC samplers.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

8 / 136

39

Organization of Lectures

State-Space Models (approx.4 hours)

SMC ltering and smoothing Maximum likelihood parameter inference Bayesian parameter inference

Beyond State-Space Models (approx. 2 hours)

SMC methods for generic sequence of target distributions SMC samplers. Approximate Bayesian Computation.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

8 / 136

40

Organization of Lectures

State-Space Models (approx.4 hours)

SMC ltering and smoothing Maximum likelihood parameter inference Bayesian parameter inference

Beyond State-Space Models (approx. 2 hours)

SMC methods for generic sequence of target distributions SMC samplers. Approximate Bayesian Computation. Optimal design, optimal control.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

8 / 136

41

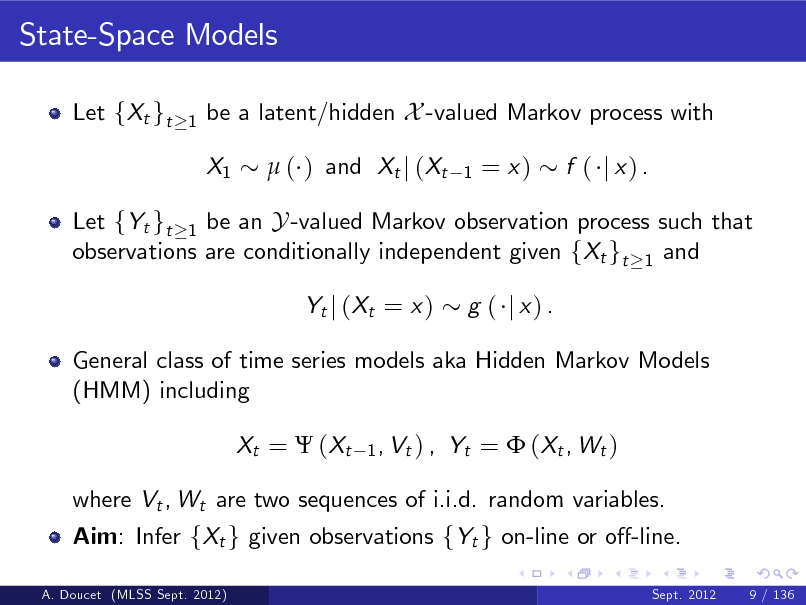

State-Space Models

Let fXt gt

1

be a latent/hidden X -valued Markov process with X1 ( ) and Xt j (Xt

1

= x)

f ( j x) .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

9 / 136

42

State-Space Models

Let fXt gt

1

be a latent/hidden X -valued Markov process with X1 ( ) and Xt j (Xt

1

= x)

f ( j x) .

Let fYt gt 1 be an Y -valued Markov observation process such that observations are conditionally independent given fXt gt 1 and Yt j ( Xt = x ) g ( j x) .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

9 / 136

43

State-Space Models

Let fXt gt

1

be a latent/hidden X -valued Markov process with X1 ( ) and Xt j (Xt

1

= x)

f ( j x) .

Let fYt gt 1 be an Y -valued Markov observation process such that observations are conditionally independent given fXt gt 1 and Yt j ( Xt = x ) g ( j x) .

General class of time series models aka Hidden Markov Models (HMM) including Xt = ( Xt

1 , Vt ) ,

Yt = ( Xt , W t )

where Vt , Wt are two sequences of i.i.d. random variables.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

9 / 136

44

State-Space Models

Let fXt gt

1

be a latent/hidden X -valued Markov process with X1 ( ) and Xt j (Xt

1

= x)

f ( j x) .

Let fYt gt 1 be an Y -valued Markov observation process such that observations are conditionally independent given fXt gt 1 and Yt j ( Xt = x ) g ( j x) .

General class of time series models aka Hidden Markov Models (HMM) including Xt = ( Xt

1 , Vt ) ,

Yt = ( Xt , W t )

where Vt , Wt are two sequences of i.i.d. random variables. Aim: Infer fXt g given observations fYt g on-line or o-line.

A. Doucet (MLSS Sept. 2012) Sept. 2012 9 / 136

45

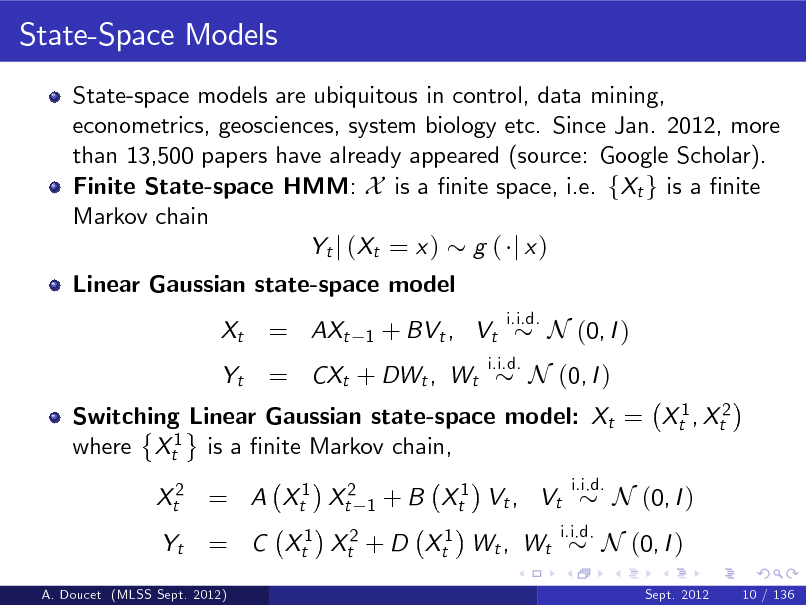

State-Space Models

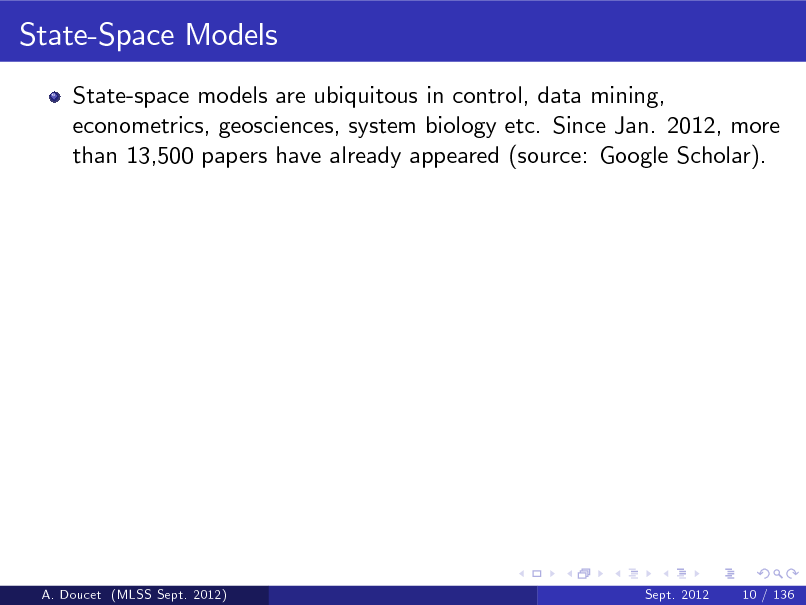

State-space models are ubiquitous in control, data mining, econometrics, geosciences, system biology etc. Since Jan. 2012, more than 13,500 papers have already appeared (source: Google Scholar).

A. Doucet (MLSS Sept. 2012)

Sept. 2012

10 / 136

46

State-Space Models

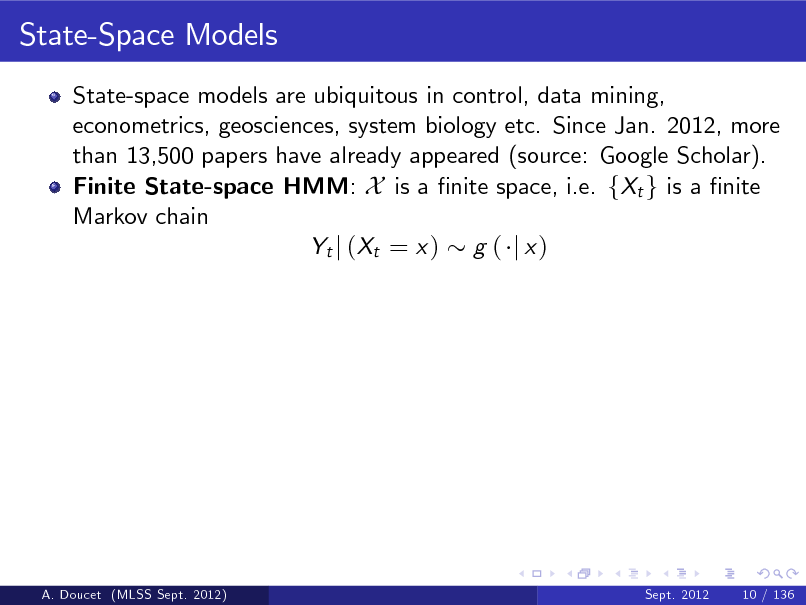

State-space models are ubiquitous in control, data mining, econometrics, geosciences, system biology etc. Since Jan. 2012, more than 13,500 papers have already appeared (source: Google Scholar). Finite State-space HMM: X is a nite space, i.e. fXt g is a nite Markov chain Yt j ( Xt = x ) g ( j x )

A. Doucet (MLSS Sept. 2012)

Sept. 2012

10 / 136

47

State-Space Models

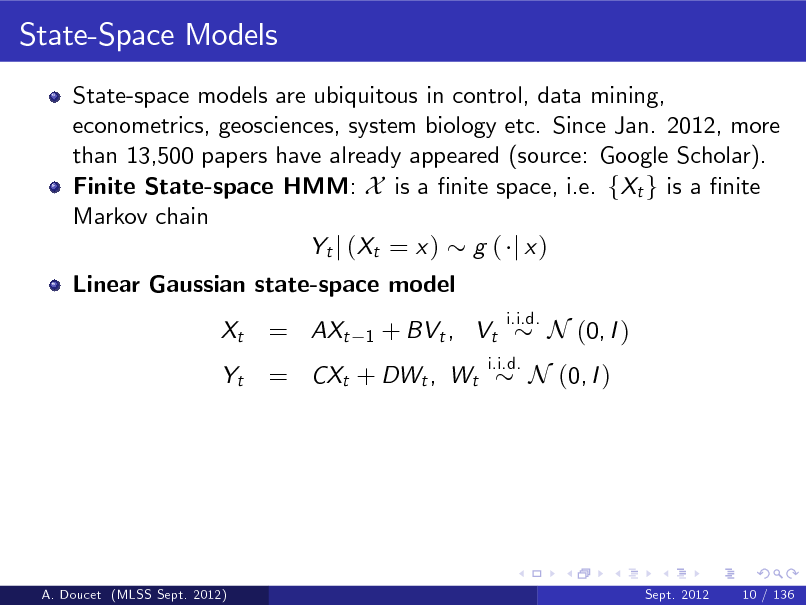

State-space models are ubiquitous in control, data mining, econometrics, geosciences, system biology etc. Since Jan. 2012, more than 13,500 papers have already appeared (source: Google Scholar). Finite State-space HMM: X is a nite space, i.e. fXt g is a nite Markov chain Yt j ( Xt = x ) g ( j x ) Linear Gaussian state-space model Xt Yt

= AXt

1

+ BVt , Vt

i.i.d.

= CXt + DWt , Wt

i.i.d.

N (0, I )

N (0, I )

A. Doucet (MLSS Sept. 2012)

Sept. 2012

10 / 136

48

State-Space Models

State-space models are ubiquitous in control, data mining, econometrics, geosciences, system biology etc. Since Jan. 2012, more than 13,500 papers have already appeared (source: Google Scholar). Finite State-space HMM: X is a nite space, i.e. fXt g is a nite Markov chain Yt j ( Xt = x ) g ( j x ) Linear Gaussian state-space model Xt Yt

= AXt

1

+ BVt , Vt

i.i.d.

= CXt + DWt , Wt

i.i.d.

N (0, I )

Switching Linear Gaussian state-space model: Xt = Xt1 , Xt2 where Xt1 is a nite Markov chain, Xt2 = A Xt1 Xt2 Yt

1

N (0, I )

+ B Xt1 Vt , Vt

i.i.d.

= C Xt1 Xt2 + D Xt1 Wt , Wt

i.i.d.

N (0, I )

Sept. 2012 10 / 136

N (0, I )

A. Doucet (MLSS Sept. 2012)

49

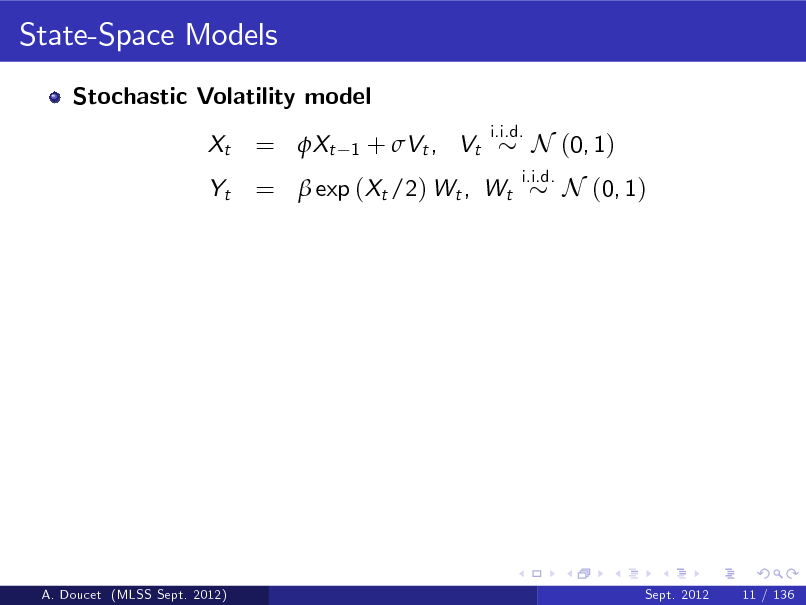

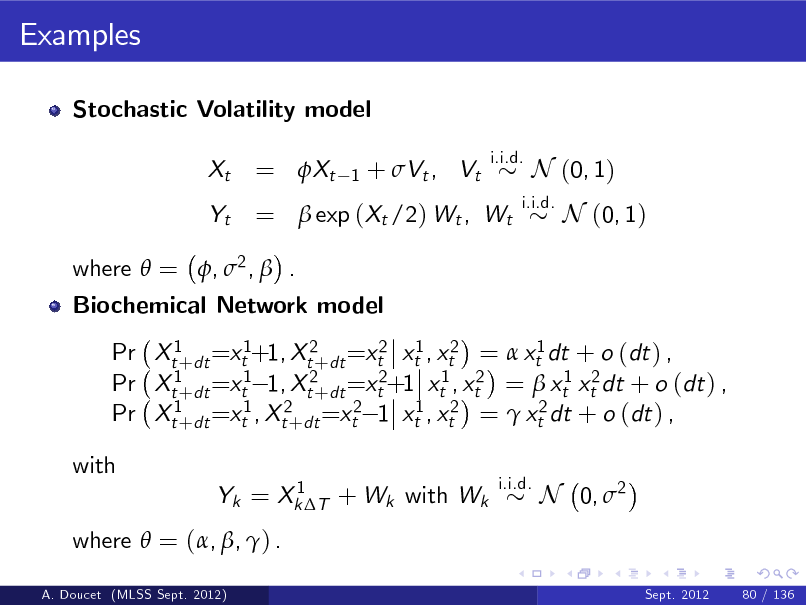

State-Space Models

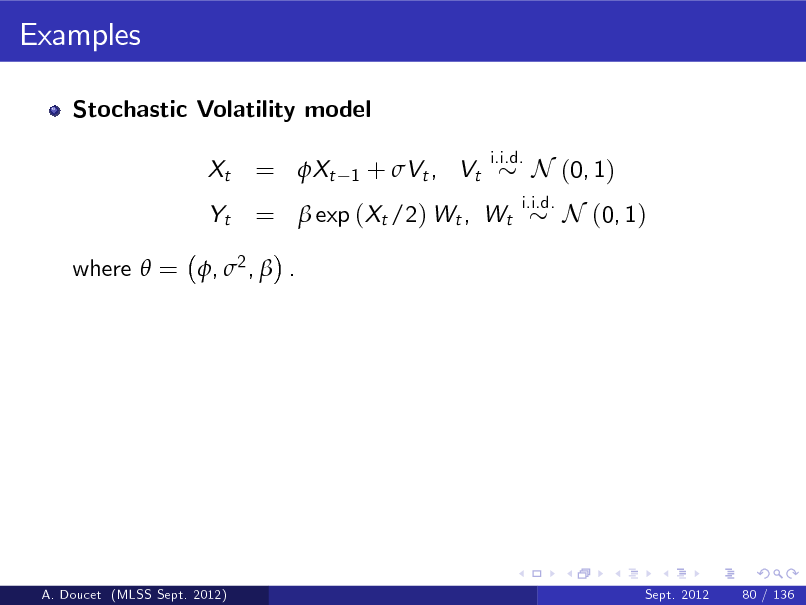

Stochastic Volatility model Xt Yt

i.i.d.

= Xt

1

+ Vt , Vt

= exp (Xt /2) Wt , Wt

i.i.d.

N (0, 1) N (0, 1)

A. Doucet (MLSS Sept. 2012)

Sept. 2012

11 / 136

50

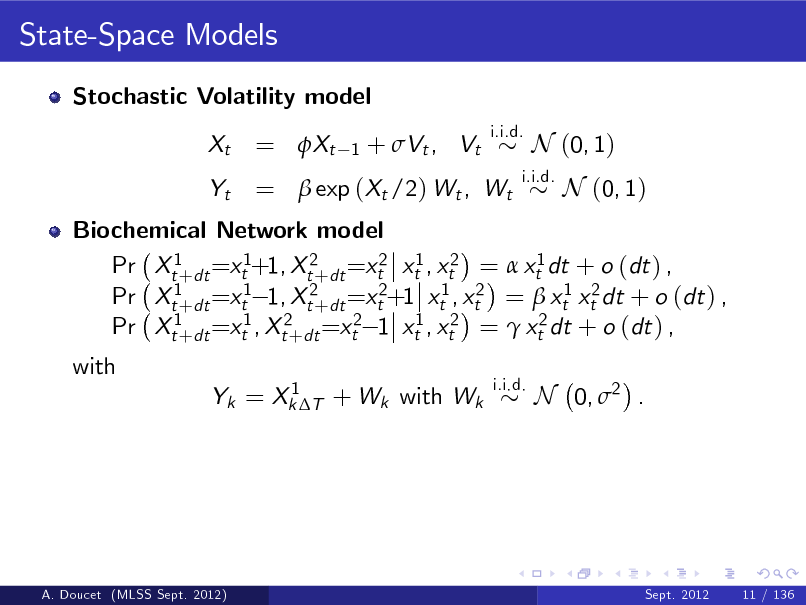

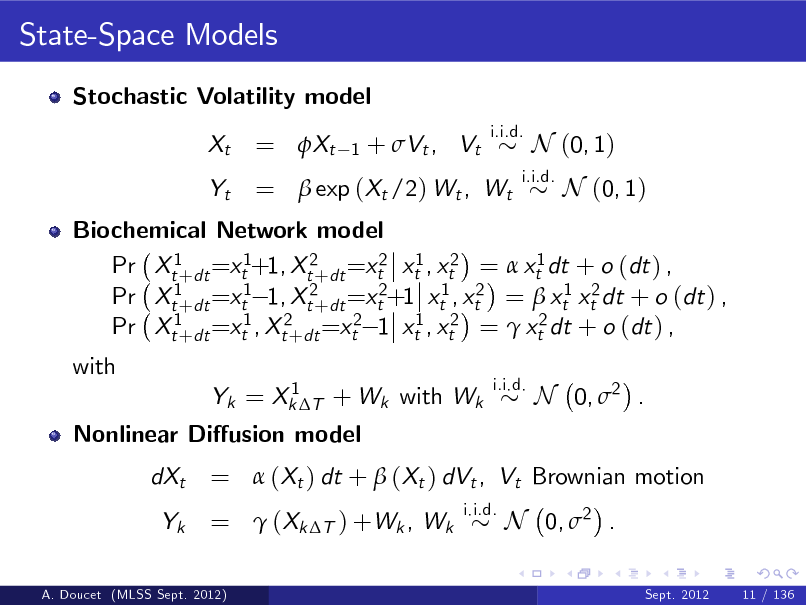

State-Space Models

Stochastic Volatility model Xt Yt

i.i.d.

= Xt

1

+ Vt , Vt

= exp (Xt /2) Wt , Wt

i.i.d.

N (0, 1) N (0, 1)

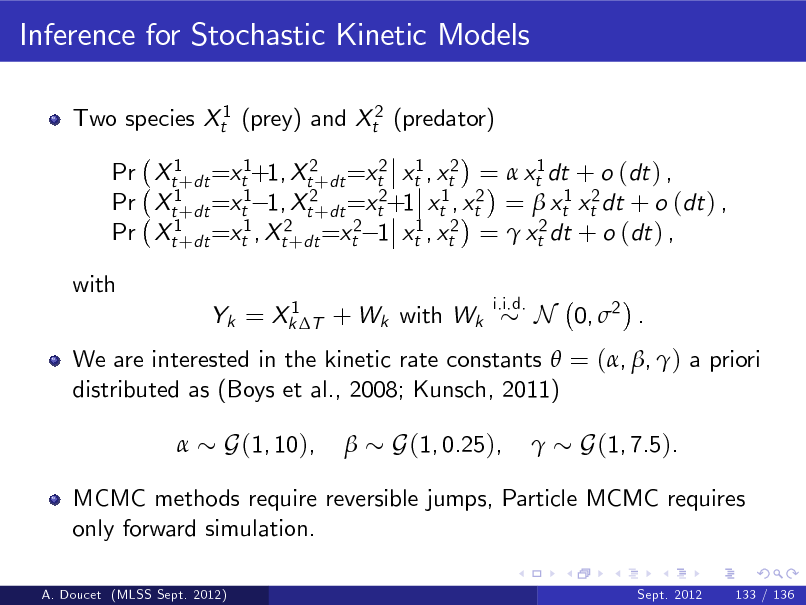

Biochemical Network model Pr Xt1+dt =xt1+1, Xt2+dt =xt2 xt1 , xt2 = xt1 dt + o (dt ) , Pr Xt1+dt =xt1 1, Xt2+dt =xt2+1 xt1 , xt2 = xt1 xt2 dt + o (dt ) , Pr Xt1+dt =xt1 , Xt2+dt =xt2 1 xt1 , xt2 = xt2 dt + o (dt ) , with

1 Yk = Xk T + Wk with Wk i.i.d.

N 0, 2 .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

11 / 136

51

State-Space Models

Stochastic Volatility model Xt Yt

i.i.d.

= Xt

1

+ Vt , Vt

= exp (Xt /2) Wt , Wt

i.i.d.

N (0, 1) N (0, 1)

Biochemical Network model Pr Xt1+dt =xt1+1, Xt2+dt =xt2 xt1 , xt2 = xt1 dt + o (dt ) , Pr Xt1+dt =xt1 1, Xt2+dt =xt2+1 xt1 , xt2 = xt1 xt2 dt + o (dt ) , Pr Xt1+dt =xt1 , Xt2+dt =xt2 1 xt1 , xt2 = xt2 dt + o (dt ) , with

1 Yk = Xk T + Wk with Wk i.i.d.

Nonlinear Diusion model dXt Yk

i.i.d.

N 0, 2 .

= (Xt ) dt + (Xt ) dVt , Vt Brownian motion = (Xk T ) +Wk , Wk N 0, 2 .

Sept. 2012 11 / 136

A. Doucet (MLSS Sept. 2012)

52

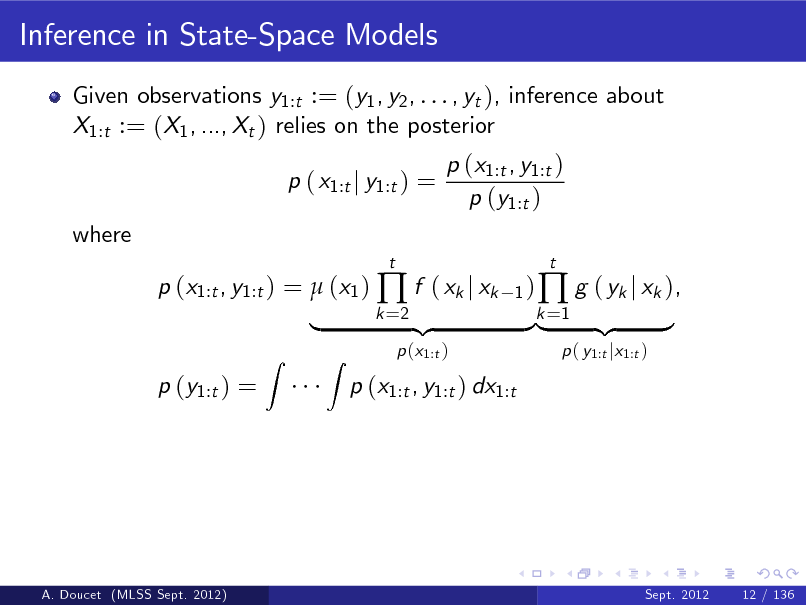

Inference in State-Space Models

Given observations y1:t := (y1 , y2 , . . . , yt ), inference about X1:t := (X1 , ..., Xt ) relies on the posterior p ( x1:t j y1:t ) = where p (x1:t , y1:t ) = (x1 ) f ( xk j xk p (y1:t ) =

Z

t 1) k =2

p (x1:t , y1:t ) p (y1:t )

|

Z

p (x1:t )

p (x1:t , y1:t ) dx1:t

{z

}k =1 {z |

g ( yk j xk ),

p ( y1:t jx1:t )

t

}

A. Doucet (MLSS Sept. 2012)

Sept. 2012

12 / 136

53

Inference in State-Space Models

Given observations y1:t := (y1 , y2 , . . . , yt ), inference about X1:t := (X1 , ..., Xt ) relies on the posterior p ( x1:t j y1:t ) = where p (x1:t , y1:t ) = (x1 ) f ( xk j xk p (y1:t ) =

Z

t 1) k =2

p (x1:t , y1:t ) p (y1:t )

|

When X is nite & linear Gaussian models, fp ( xt j y1:t )gt 1 can be computed exactly. For non-linear models, approximations are required: EKF, UKF, Gaussian sum lters, etc.

Z

p (x1:t )

p (x1:t , y1:t ) dx1:t

{z

}k =1 {z |

g ( yk j xk ),

p ( y1:t jx1:t )

t

}

A. Doucet (MLSS Sept. 2012)

Sept. 2012

12 / 136

54

Inference in State-Space Models

Given observations y1:t := (y1 , y2 , . . . , yt ), inference about X1:t := (X1 , ..., Xt ) relies on the posterior p ( x1:t j y1:t ) = where p (x1:t , y1:t ) = (x1 ) f ( xk j xk p (y1:t ) =

Z

t 1) k =2

p (x1:t , y1:t ) p (y1:t )

|

A. Doucet (MLSS Sept. 2012)

When X is nite & linear Gaussian models, fp ( xt j y1:t )gt 1 can be computed exactly. For non-linear models, approximations are required: EKF, UKF, Gaussian sum lters, etc. Approximations of fp ( xt j y1:t )gT=1 provide approximation of t p ( x1:T j y1:T ) .

Sept. 2012

Z

p (x1:t )

p (x1:t , y1:t ) dx1:t

{z

}k =1 {z |

g ( yk j xk ),

p ( y1:t jx1:t )

t

}

12 / 136

55

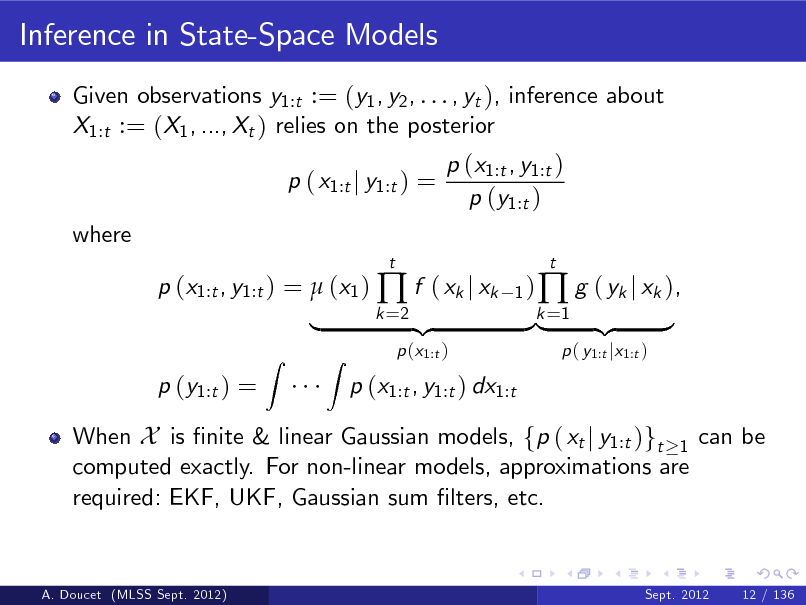

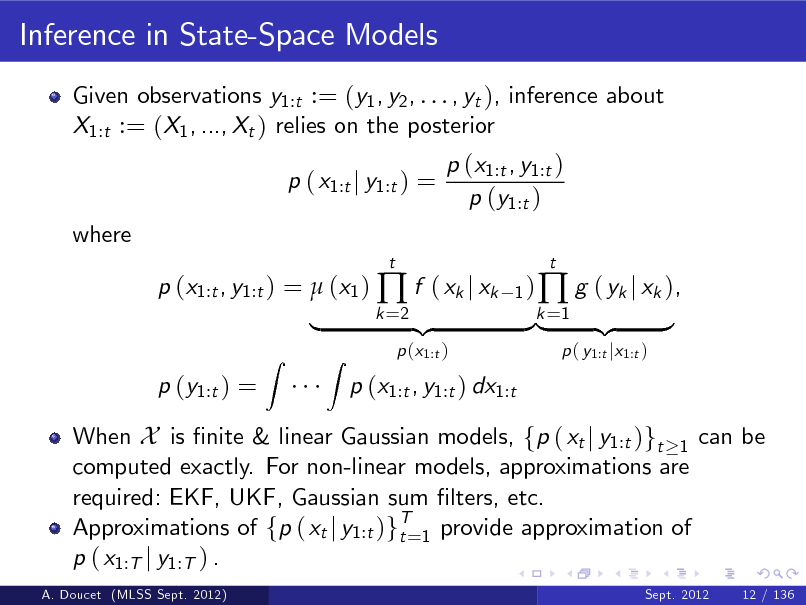

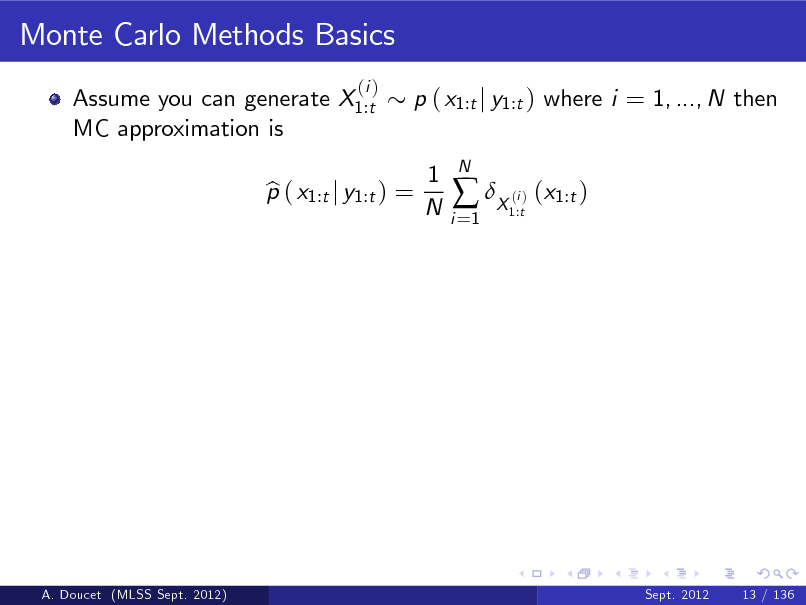

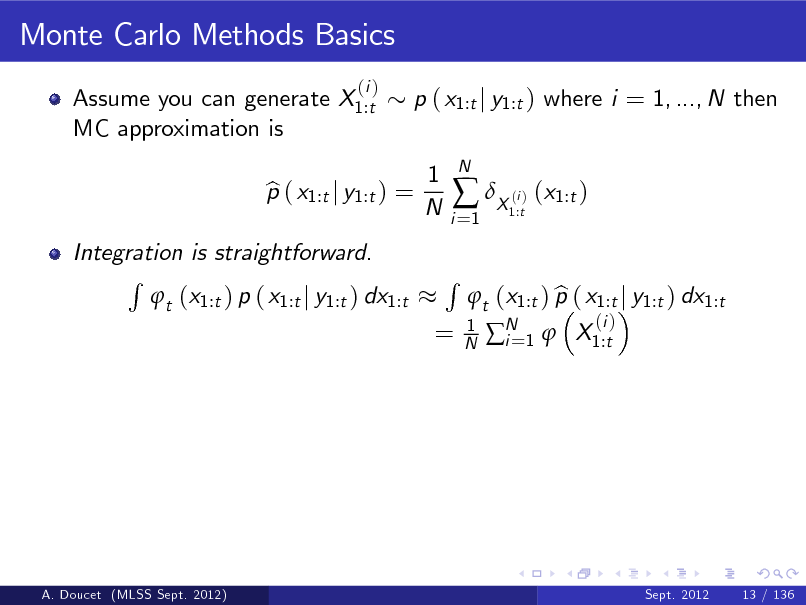

Monte Carlo Methods Basics

Assume you can generate X1:t MC approximation is

(i )

p ( x1:t j y1:t ) where i = 1, ..., N then 1 N

p ( x1:t j y1:t ) = b

i =1

X ( ) (x1:t )

i 1:t

N

A. Doucet (MLSS Sept. 2012)

Sept. 2012

13 / 136

56

Monte Carlo Methods Basics

Assume you can generate X1:t MC approximation is

(i )

p ( x1:t j y1:t ) where i = 1, ..., N then 1 N

Integration is straightforward. R t (x1:t ) p ( x1:t j y1:t ) dx1:t

p ( x1:t j y1:t ) = b

i =1

X ( ) (x1:t )

i 1:t

N

=

R

1 N

b t (x1:t ) p ( x1:t j y1:t ) dx1:t N 1 X1:t i=

(i )

A. Doucet (MLSS Sept. 2012)

Sept. 2012

13 / 136

57

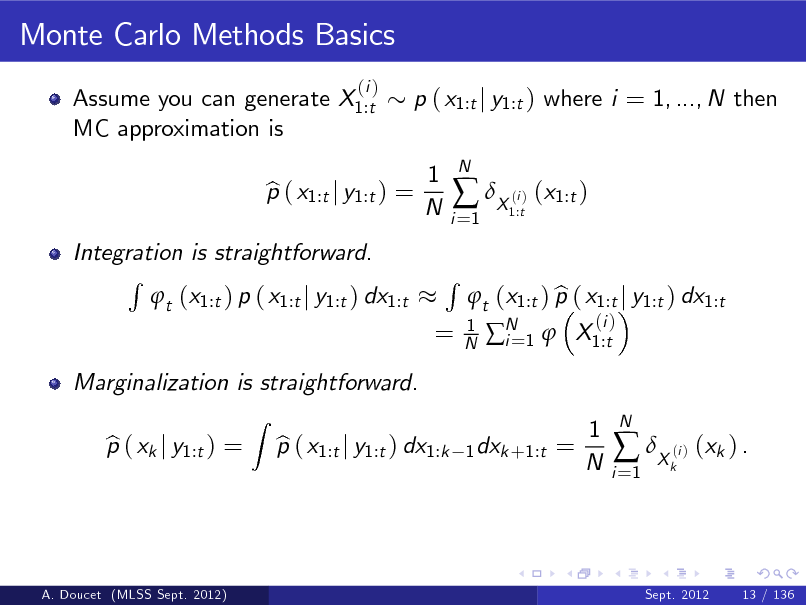

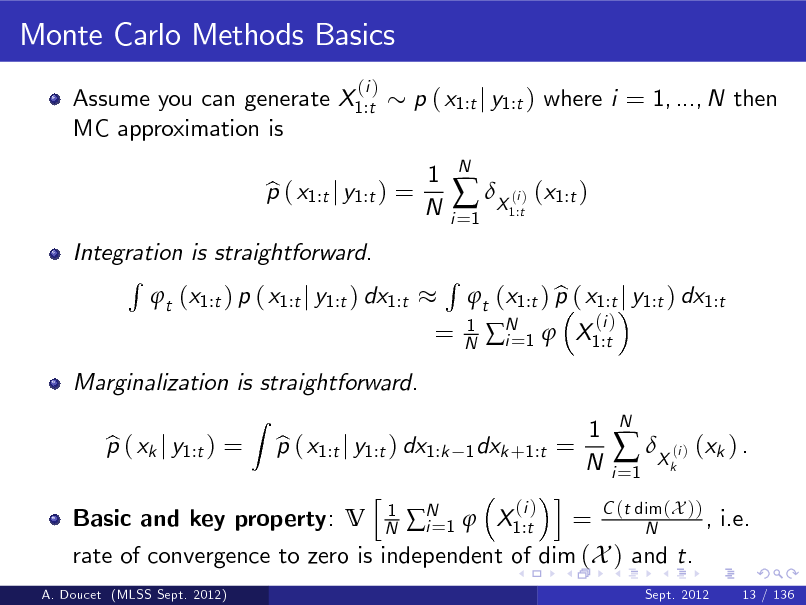

Monte Carlo Methods Basics

Assume you can generate X1:t MC approximation is

(i )

p ( x1:t j y1:t ) where i = 1, ..., N then 1 N

Integration is straightforward. R t (x1:t ) p ( x1:t j y1:t ) dx1:t

Z

p ( x1:t j y1:t ) = b

i =1

X ( ) (x1:t )

i 1:t

N

=

R

1 N

Marginalization is straightforward. p ( xk j y1:t ) = b p ( x1:t j y1:t ) dx1:k b

b t (x1:t ) p ( x1:t j y1:t ) dx1:t N 1 X1:t i=

(i )

1 dxk +1:t =

1 N

i =1

X ( ) (xk ) .

i k

N

A. Doucet (MLSS Sept. 2012)

Sept. 2012

13 / 136

58

Monte Carlo Methods Basics

Assume you can generate X1:t MC approximation is

(i )

p ( x1:t j y1:t ) where i = 1, ..., N then 1 N

Integration is straightforward. R t (x1:t ) p ( x1:t j y1:t ) dx1:t

Z

p ( x1:t j y1:t ) = b

i =1

X ( ) (x1:t )

i 1:t

N

=

R

1 N

Marginalization is straightforward. p ( xk j y1:t ) = b p ( x1:t j y1:t ) dx1:k b h

1 N

b t (x1:t ) p ( x1:t j y1:t ) dx1:t N 1 X1:t i=

(i )

1 dxk +1:t =

1 N

Basic and key property: V

A. Doucet (MLSS Sept. 2012)

i (i ) = C (t dim (X )) , i.e. N 1 X1:t i= N rate of convergence to zero is independent of dim (X ) and t.

Sept. 2012 13 / 136

i =1

X ( ) (xk ) .

i k

N

59

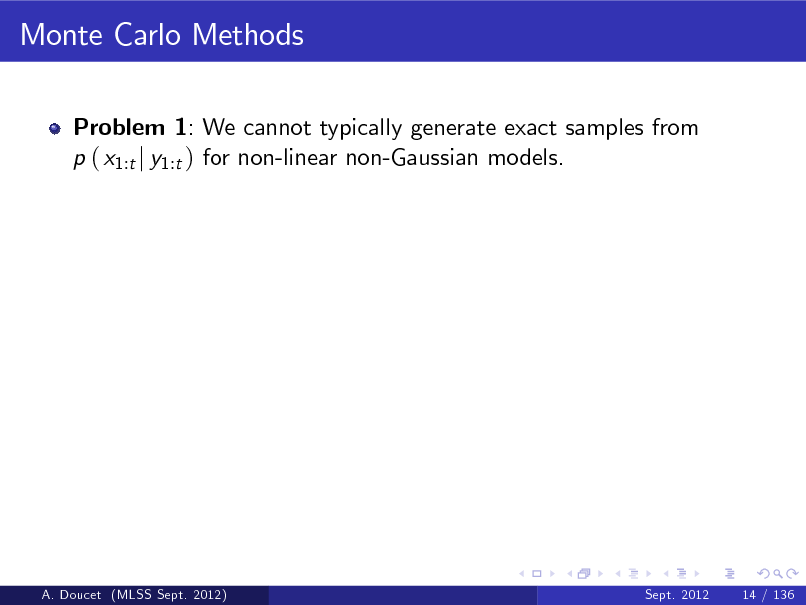

Monte Carlo Methods

Problem 1: We cannot typically generate exact samples from p ( x1:t j y1:t ) for non-linear non-Gaussian models.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

14 / 136

60

Monte Carlo Methods

Problem 1: We cannot typically generate exact samples from p ( x1:t j y1:t ) for non-linear non-Gaussian models.

Problem 2: Even if we could, algorithms to generate samples from p ( x1:t j y1:t ) will have at least complexity O (t ) .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

14 / 136

61

Monte Carlo Methods

Problem 1: We cannot typically generate exact samples from p ( x1:t j y1:t ) for non-linear non-Gaussian models.

Problem 2: Even if we could, algorithms to generate samples from p ( x1:t j y1:t ) will have at least complexity O (t ) . Typical solution to problem 1 is to generate approximate samples using MCMC methods but these methods are not recursive.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

14 / 136

62

Monte Carlo Methods

Problem 1: We cannot typically generate exact samples from p ( x1:t j y1:t ) for non-linear non-Gaussian models.

Problem 2: Even if we could, algorithms to generate samples from p ( x1:t j y1:t ) will have at least complexity O (t ) . Typical solution to problem 1 is to generate approximate samples using MCMC methods but these methods are not recursive.

SMC Methods solves partially Problem 1 and Problem 2 by breaking the problem of sampling from p ( x1:t j y1:t ) into a collection of simpler subproblems. First approximate p ( x1 j y1 ) and p (y1 ) at time 1, then p ( x1:2 j y1:2 ) and p (y1:2 ) at time 2 and so on.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

14 / 136

63

Monte Carlo Methods

Problem 1: We cannot typically generate exact samples from p ( x1:t j y1:t ) for non-linear non-Gaussian models.

Problem 2: Even if we could, algorithms to generate samples from p ( x1:t j y1:t ) will have at least complexity O (t ) . Typical solution to problem 1 is to generate approximate samples using MCMC methods but these methods are not recursive.

SMC Methods solves partially Problem 1 and Problem 2 by breaking the problem of sampling from p ( x1:t j y1:t ) into a collection of simpler subproblems. First approximate p ( x1 j y1 ) and p (y1 ) at time 1, then p ( x1:2 j y1:2 ) and p (y1:2 ) at time 2 and so on. Each target distribution is approximated by a cloud of random samples termed particles evolving according to importance sampling and resampling steps.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

14 / 136

64

Standard Bayesian Recursion

In most textbooks, you will nd the following recursion for fp ( xt j y1:t )gt 1 .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

15 / 136

65

Standard Bayesian Recursion

In most textbooks, you will nd the following recursion for fp ( xt j y1:t )gt 1 . Prediction step p ( xt j y1:t

1)

= = =

Z

p ( xt

Z Z

1 , xt j y1:t 1 ) dxt 1 1 , xt 1 ) p ( xt 1 j y1:t 1 ) dxt 1 1 ) p ( xt 1 j y1:t 1 ) dxt 1 .

p ( xt j y1:t f ( xt j xt

A. Doucet (MLSS Sept. 2012)

Sept. 2012

15 / 136

66

Standard Bayesian Recursion

In most textbooks, you will nd the following recursion for fp ( xt j y1:t )gt 1 . Prediction step p ( xt j y1:t

1)

= = =

Z

p ( xt

Z Z

1 , xt j y1:t 1 ) dxt 1 1 , xt 1 ) p ( xt 1 j y1:t 1 ) dxt 1 1 ) p ( xt 1 j y1:t 1 ) dxt 1 .

p ( xt j y1:t f ( xt j xt

Bayes Updating step

p ( xt j y1:t ) = where p ( yt j y1:t

A. Doucet (MLSS Sept. 2012)

1)

=

Z

g ( yt j xt ) p ( xt j y1:t p ( yt j y1:t 1 ) g ( yt j xt ) p ( xt j y1:t

1)

1 ) dxt

Sept. 2012

15 / 136

67

Standard Bayesian Recursion

In most textbooks, you will nd the following recursion for fp ( xt j y1:t )gt 1 . Prediction step p ( xt j y1:t

1)

= = =

Z

p ( xt

Z Z

1 , xt j y1:t 1 ) dxt 1 1 , xt 1 ) p ( xt 1 j y1:t 1 ) dxt 1 1 ) p ( xt 1 j y1:t 1 ) dxt 1 .

p ( xt j y1:t f ( xt j xt

Bayes Updating step

p ( xt j y1:t ) = where p ( yt j y1:t

A. Doucet (MLSS Sept. 2012)

1)

=

This is the recursion implemented by Wonham and Kalman lters...

Sept. 2012 15 / 136

Z

g ( yt j xt ) p ( xt j y1:t p ( yt j y1:t 1 ) g ( yt j xt ) p ( xt j y1:t

1)

1 ) dxt

68

Bayesian Recursion on Path Space

SMC approximate directly fp ( x1:t j y1:t )gt 1 not fp ( xt j y1:t )gt 1 and relies on p (x1:t , y1:t ) g ( yt j xt ) f ( xt j xt 1 ) p (x1:t 1 , y1:t 1 ) p ( x1:t j y1:t ) = = p (y1:t ) p ( yt j y1:t 1 ) p (y1:t 1 )

=

where

}| z g ( yt j xt ) f ( xt j xt 1 ) p ( x1:t p ( yt j y1:t 1 )

1)

predictive p ( x1:t jy1:t

1)

1 j y1:t

{ 1)

1 ) dx1:t

p ( yt j y1:t

=

Z

g ( yt j xt ) p ( x1:t j y1:t

A. Doucet (MLSS Sept. 2012)

Sept. 2012

16 / 136

69

Bayesian Recursion on Path Space

SMC approximate directly fp ( x1:t j y1:t )gt 1 not fp ( xt j y1:t )gt 1 and relies on p (x1:t , y1:t ) g ( yt j xt ) f ( xt j xt 1 ) p (x1:t 1 , y1:t 1 ) p ( x1:t j y1:t ) = = p (y1:t ) p ( yt j y1:t 1 ) p (y1:t 1 )

=

where

}| z g ( yt j xt ) f ( xt j xt 1 ) p ( x1:t p ( yt j y1:t 1 )

1)

predictive p ( x1:t jy1:t

1)

1 j y1:t

{ 1)

1 ) dx1:t

p ( yt j y1:t Prediction Update

=

This can be alternatively written as p ( x1:t j y1:t 1 ) = f ( xt j xt 1 ) p ( x1:t g ( yt jxt )p ( x1:t jy1:t 1 ) . p ( x1:t j y1:t ) = p ( yt jy1:t 1 )

1 j y1:t 1 ) ,

Z

g ( yt j xt ) p ( x1:t j y1:t

A. Doucet (MLSS Sept. 2012)

Sept. 2012

16 / 136

70

Bayesian Recursion on Path Space

SMC approximate directly fp ( x1:t j y1:t )gt 1 not fp ( xt j y1:t )gt 1 and relies on p (x1:t , y1:t ) g ( yt j xt ) f ( xt j xt 1 ) p (x1:t 1 , y1:t 1 ) p ( x1:t j y1:t ) = = p (y1:t ) p ( yt j y1:t 1 ) p (y1:t 1 )

=

where

}| z g ( yt j xt ) f ( xt j xt 1 ) p ( x1:t p ( yt j y1:t 1 )

1)

predictive p ( x1:t jy1:t

1)

1 j y1:t

{ 1)

1 ) dx1:t

p ( yt j y1:t Prediction Update

=

This can be alternatively written as p ( x1:t j y1:t 1 ) = f ( xt j xt 1 ) p ( x1:t g ( yt jxt )p ( x1:t jy1:t 1 ) . p ( x1:t j y1:t ) = p ( yt jy1:t 1 )

1 j y1:t 1 ) ,

Z

g ( yt j xt ) p ( x1:t j y1:t

SMC is a simple and natural simulation-based implementation of this recursion.

A. Doucet (MLSS Sept. 2012) Sept. 2012 16 / 136

71

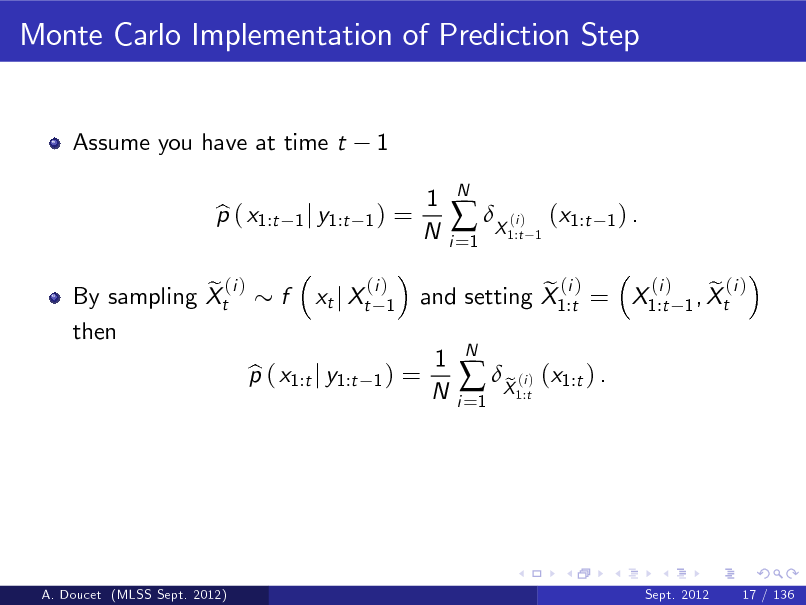

Monte Carlo Implementation of Prediction Step

Assume you have at time t p ( x1:t b

1

1 j y1:t 1 )

=

1 N

i =1

X ( )

N

i 1:t 1

(x1:t

1) .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

17 / 136

72

Monte Carlo Implementation of Prediction Step

Assume you have at time t p ( x1:t b

1

1 j y1:t 1 )

=

1 N

i =1

X ( )

N

i 1:t 1

(x1:t

1) .

e (i ) By sampling Xt then

f

xt j Xt

(i ) 1

p ( x1:t j y1:t b

1)

=

(i ) e (i ) e (i ) and setting X1:t = X1:t 1 , Xt

1 N

i =1

X ( ) (x1:t ) . e

i 1:t

N

A. Doucet (MLSS Sept. 2012)

Sept. 2012

17 / 136

73

Monte Carlo Implementation of Prediction Step

Assume you have at time t p ( x1:t b

1

1 j y1:t 1 )

=

1 N

i =1

X ( )

N

i 1:t 1

(x1:t

1) .

e (i ) By sampling Xt then

f

xt j Xt

(i ) 1

Sampling from f ( xt j xt 1 ) is usually straightforward and can be done even if f ( xt j xt 1 ) does not admit any analytical expression; e.g. biochemical network models.

p ( x1:t j y1:t b

1)

=

(i ) e (i ) e (i ) and setting X1:t = X1:t 1 , Xt

1 N

i =1

X ( ) (x1:t ) . e

i 1:t

N

A. Doucet (MLSS Sept. 2012)

Sept. 2012

17 / 136

74

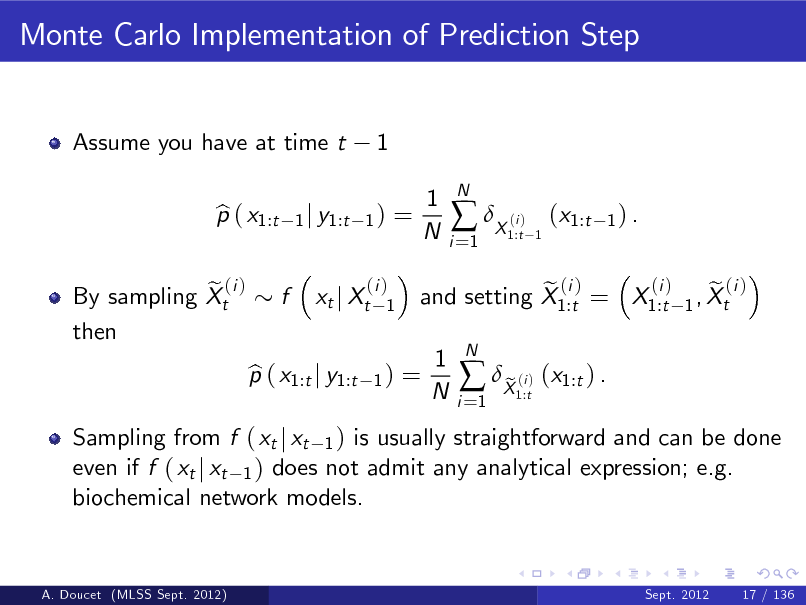

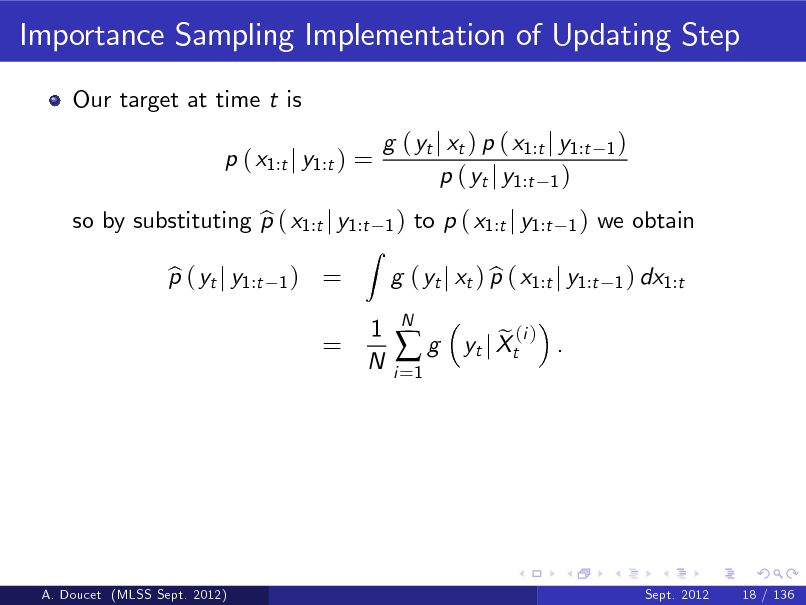

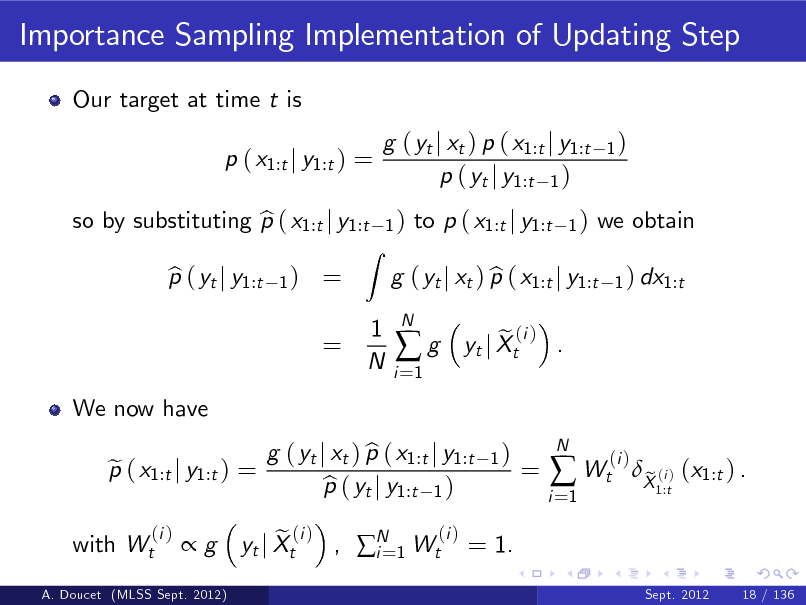

Importance Sampling Implementation of Updating Step

Our target at time t is p ( x1:t j y1:t ) = so by substituting p ( x1:t j y1:t b p ( yt j y1:t b

1)

g ( yt j xt ) p ( x1:t j y1:t p ( yt j y1:t 1 )

1)

1)

= =

Z

to p ( x1:t j y1:t

1)

we obtain

1 ) dx1:t

1 N

g ( yt j xt ) p ( x1:t j y1:t b

i =1

g

N

e (i ) . yt j Xt

A. Doucet (MLSS Sept. 2012)

Sept. 2012

18 / 136

75

Importance Sampling Implementation of Updating Step

Our target at time t is p ( x1:t j y1:t ) = so by substituting p ( x1:t j y1:t b p ( yt j y1:t b

1)

g ( yt j xt ) p ( x1:t j y1:t p ( yt j y1:t 1 )

1)

1)

= =

Z

to p ( x1:t j y1:t

1)

we obtain

1 ) dx1:t

1 N

g ( yt j xt ) p ( x1:t j y1:t b

i =1

g

N

We now have p ( x1:t j y1:t ) = e

(i )

e (i ) . yt j Xt

1)

with Wt

g

A. Doucet (MLSS Sept. 2012)

e (i ) , N 1 Wt(i ) = 1. yt j Xt i=

g ( yt j xt ) p ( x1:t j y1:t b p ( yt j y1:t 1 ) b

=

i =1

Wt

N

(i )

X (i ) (x1:t ) . e

1:t Sept. 2012 18 / 136

76

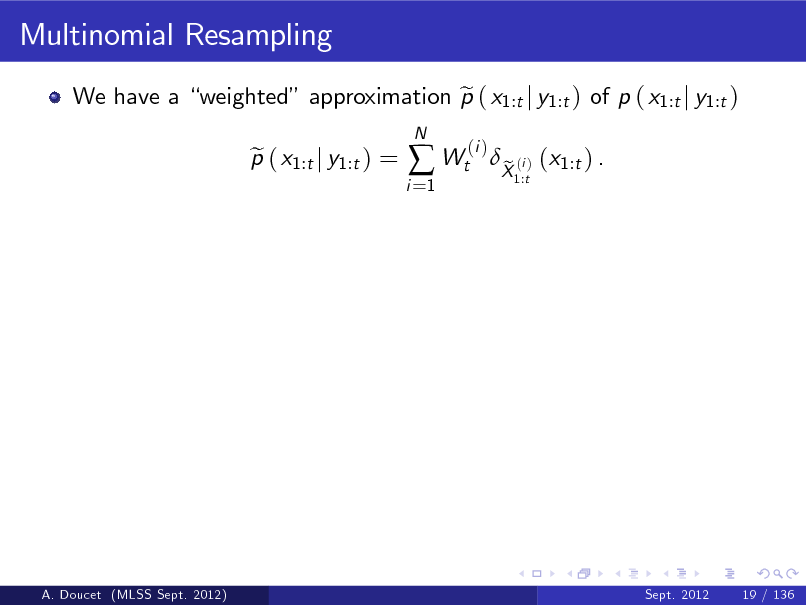

Multinomial Resampling

We have a weighted approximation p ( x1:t j y1:t ) of p ( x1:t j y1:t ) e p ( x1:t j y1:t ) = e

i =1 N

Wt

(i )

X (i ) (x1:t ) . e

1:t

A. Doucet (MLSS Sept. 2012)

Sept. 2012

19 / 136

77

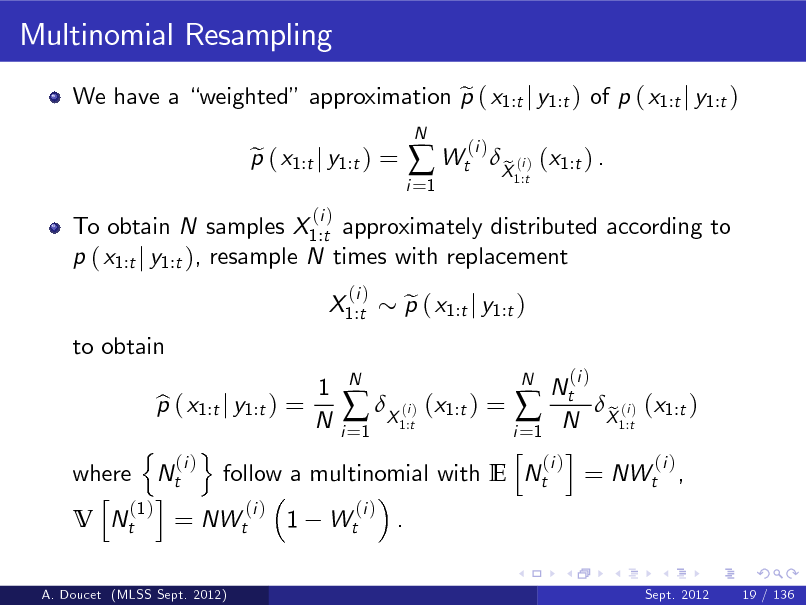

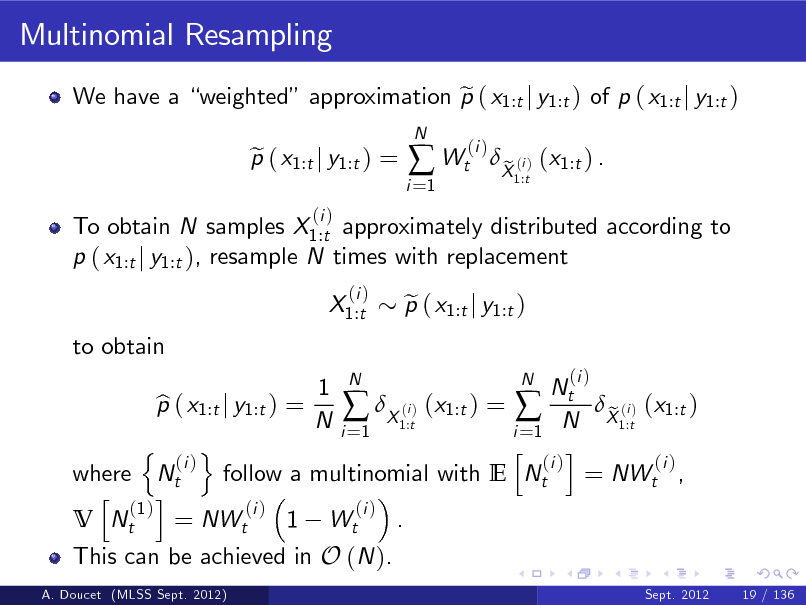

Multinomial Resampling

We have a weighted approximation p ( x1:t j y1:t ) of p ( x1:t j y1:t ) e To obtain N samples X1:t approximately distributed according to p ( x1:t j y1:t ), resample N times with replacement X1:t to obtain

(i )

N

p ( x1:t j y1:t ) = e

(i )

i =1

Wt

(i )

X (i ) (x1:t ) . e

1:t

N 1 N Nt p ( x1:t j y1:t ) = b (i X1:t) (x1:t ) = N X1:t) (x1:t ) e (i N i =1 i =1 n o h i (i ) (i ) (i ) where Nt follow a multinomial with E Nt = NWt , h i (1 ) (i ) (i ) V Nt = NWt 1 Wt .

A. Doucet (MLSS Sept. 2012) Sept. 2012 19 / 136

p ( x1:t j y1:t ) e

(i )

78

Multinomial Resampling

We have a weighted approximation p ( x1:t j y1:t ) of p ( x1:t j y1:t ) e To obtain N samples X1:t approximately distributed according to p ( x1:t j y1:t ), resample N times with replacement X1:t to obtain

(i )

N

p ( x1:t j y1:t ) = e

(i )

i =1

Wt

(i )

X (i ) (x1:t ) . e

1:t

This can be achieved in O (N ).

A. Doucet (MLSS Sept. 2012)

N 1 N Nt p ( x1:t j y1:t ) = b (i X1:t) (x1:t ) = N X1:t) (x1:t ) e (i N i =1 i =1 n o h i (i ) (i ) (i ) where Nt follow a multinomial with E Nt = NWt , h i (1 ) (i ) (i ) V Nt = NWt 1 Wt .

Sept. 2012 19 / 136

p ( x1:t j y1:t ) e

(i )

79

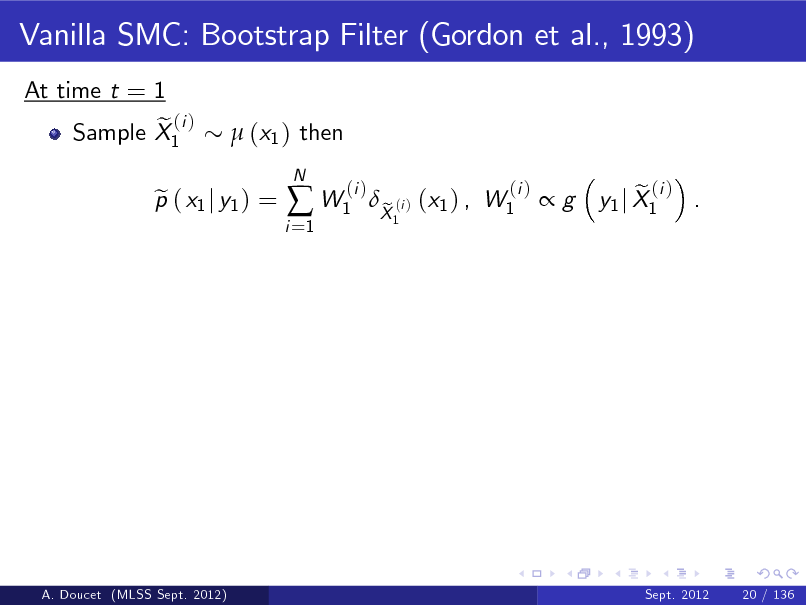

Vanilla SMC: Bootstrap Filter (Gordon et al., 1993)

At time t = 1 e (i ) Sample X1 (x1 ) then

p ( x1 j y1 ) = e

i =1

W1

N

(i )

X (i ) (x1 ) , W1 e

1

(i )

g

e (i ) y1 j X1 .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

20 / 136

80

Vanilla SMC: Bootstrap Filter (Gordon et al., 1993)

At time t = 1 e (i ) Sample X1 (x1 ) then

Resample X1

p ( x1 j y1 ) = e

(i )

i =1

W1

N

(i )

p ( x1 j y1 ) to obtain p ( x1 j y1 ) = e b

X (i ) (x1 ) , W1 e

1

(i )

g

1 N

e (i ) y1 j X1 .

1

N 1 X (i ) (x1 ). i=

A. Doucet (MLSS Sept. 2012)

Sept. 2012

20 / 136

81

Vanilla SMC: Bootstrap Filter (Gordon et al., 1993)

At time t = 1 e (i ) Sample X1 (x1 ) then

Resample X1

p ( x1 j y1 ) = e

(i )

i =1

W1

N

(i )

p ( x1 j y1 ) to obtain p ( x1 j y1 ) = e b

X (i ) (x1 ) , W1 e

1

(i )

g

1 N

e (i ) y1 j X1 .

1

N 1 X (i ) (x1 ). i=

A. Doucet (MLSS Sept. 2012)

Sept. 2012

20 / 136

82

Vanilla SMC: Bootstrap Filter (Gordon et al., 1993)

At time t = 1 e (i ) Sample X1 (x1 ) then

Resample X1 At time t 2 e (i ) Sample Xt

p ( x1 j y1 ) = e

(i )

i =1

W1

N

(i )

p ( x1 j y1 ) to obtain p ( x1 j y1 ) = e b xt j Xt

N

X (i ) (x1 ) , W1 e

1

(i )

g

1 N

e (i ) y1 j X1 .

1

N 1 X (i ) (x1 ). i= and

f

(i ) 1

p ( x1:t j y1:t ) = e

i =1

Wt

(i )

(i ) e (i ) e (i ) , set X1:t = X1:t 1 , Xt

X (i ) (x1:t ) , Wt e

1:t

(i )

g

e (i ) . yt j Xt

A. Doucet (MLSS Sept. 2012)

Sept. 2012

20 / 136

83

Vanilla SMC: Bootstrap Filter (Gordon et al., 1993)

At time t = 1 e (i ) Sample X1 (x1 ) then

Resample X1 At time t 2 e (i ) Sample Xt

p ( x1 j y1 ) = e

(i )

i =1

W1

N

(i )

p ( x1 j y1 ) to obtain p ( x1 j y1 ) = e b xt j Xt

N

X (i ) (x1 ) , W1 e

1

(i )

g

1 N

e (i ) y1 j X1 .

1

N 1 X (i ) (x1 ). i= and

f

(i ) 1

A. Doucet (MLSS Sept. 2012)

Resample X1:t p ( x1:t j y1:t ) = b

p ( x1:t j y1:t ) = e

(i )

1 N

i =1

Wt

(i )

(i ) e (i ) e (i ) , set X1:t = X1:t 1 , Xt

p ( x1:t j y1:t ) to obtain e N 1 X (i ) (x1:t ). i=

1:t

X (i ) (x1:t ) , Wt e

1:t

(i )

g

e (i ) . yt j Xt

Sept. 2012

20 / 136

84

SMC Output

At time t, we get p ( x1:t j y1:t ) = e

N

i =1

Wt

1 N

N i =1

(i )

p ( x1:t j y1:t ) = b

X ( ) (x1:t ) .

i 1:t

X (i ) (x1:t ) , e

1:t

A. Doucet (MLSS Sept. 2012)

Sept. 2012

21 / 136

85

SMC Output

At time t, we get p ( x1:t j y1:t ) = e

N

i =1

Wt

1 N

N i =1

(i )

The marginal likelihood estimate is given by p (y1:t ) = b b p ( yk j y1:k

t 1)

p ( x1:t j y1:t ) = b

X ( ) (x1:t ) .

i 1:t

X (i ) (x1:t ) , e

1:t

=

k =1

k =1

t

1 N

i =1

g

N

e (i ) yk j Xk

!

.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

21 / 136

86

SMC Output

At time t, we get p ( x1:t j y1:t ) = e

N

i =1

Wt

1 N

N i =1

(i )

The marginal likelihood estimate is given by p (y1:t ) = b b p ( yk j y1:k

t 1)

p ( x1:t j y1:t ) = b

X ( ) (x1:t ) .

i 1:t

X (i ) (x1:t ) , e

1:t

=

k =1

k =1

t

1 N

i =1

g

N

Computational complexity is O (N ) at each time step and memory requirements O (tN ) .

e (i ) yk j Xk

!

.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

21 / 136

87

SMC Output

At time t, we get p ( x1:t j y1:t ) = e

N

i =1

Wt

1 N

N i =1

(i )

The marginal likelihood estimate is given by p (y1:t ) = b b p ( yk j y1:k

t 1)

p ( x1:t j y1:t ) = b

X ( ) (x1:t ) .

i 1:t

X (i ) (x1:t ) , e

1:t

=

k =1

k =1

t

1 N

i =1

g

N

Computational complexity is O (N ) at each time step and memory requirements O (tN ) . If we are only interested in p ( xt j y1:t ) or p ( st (x1:t )j y1:t ) where 2 st (x1:t ) = t (xt , st 1 (x1:t 1 )) - e.g. st (x1:t ) = t =1 xk - is k xed-dimensional then memory requirements O (N ) .

A. Doucet (MLSS Sept. 2012) Sept. 2012 21 / 136

e (i ) yk j Xk

!

.

88

![Slide: SMC on Path-Space - gures by Olivier Capp e

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

b Figure: p ( x1 j y1 ) and E [ X1 j y1 ] (top) and particle approximation of p ( x1 j y1 ) (bottom) (MLSS Sept. 2012) A. Doucet Sept. 2012 22 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_089.png)

SMC on Path-Space - gures by Olivier Capp e

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

b Figure: p ( x1 j y1 ) and E [ X1 j y1 ] (top) and particle approximation of p ( x1 j y1 ) (bottom) (MLSS Sept. 2012) A. Doucet Sept. 2012 22 / 136

89

![Slide: 1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

b b Figure: p ( x1 j y1 ) , p ( x2 j y1 :2 )and E [ X1 j y1 ] , E [ X2 j y1 :2 ] (top) and particle approximation of p ( x1 :2 j y1 :2 ) (bottom)

A. Doucet (MLSS Sept. 2012) Sept. 2012 23 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_090.png)

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

b b Figure: p ( x1 j y1 ) , p ( x2 j y1 :2 )and E [ X1 j y1 ] , E [ X2 j y1 :2 ] (top) and particle approximation of p ( x1 :2 j y1 :2 ) (bottom)

A. Doucet (MLSS Sept. 2012) Sept. 2012 23 / 136

90

![Slide: 1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

b Figure: p ( xt j y1 :t ) and E [ Xt j y1 :t ] for t = 1, 2, 3 (top) and particle approximation of p ( x1 :3 j y1 :3 ) (bottom)

A. Doucet (MLSS Sept. 2012) Sept. 2012 24 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_091.png)

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

b Figure: p ( xt j y1 :t ) and E [ Xt j y1 :t ] for t = 1, 2, 3 (top) and particle approximation of p ( x1 :3 j y1 :3 ) (bottom)

A. Doucet (MLSS Sept. 2012) Sept. 2012 24 / 136

91

![Slide: 1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

b Figure: p ( xt j y1 :t ) and E [ Xt j y1 :t ] for t = 1, ..., 10 (top) and particle approximation of p ( x1 :10 j y1 :10 ) (bottom)

A. Doucet (MLSS Sept. 2012) Sept. 2012 25 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_092.png)

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

b Figure: p ( xt j y1 :t ) and E [ Xt j y1 :t ] for t = 1, ..., 10 (top) and particle approximation of p ( x1 :10 j y1 :10 ) (bottom)

A. Doucet (MLSS Sept. 2012) Sept. 2012 25 / 136

92

![Slide: 1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

b Figure: p ( xt j y1 :t ) and E [ Xt j y1 :t ] for t = 1, ..., 24 (top) and particle approximation of p ( x1 :24 j y1 :24 ) (bottom)

A. Doucet (MLSS Sept. 2012) Sept. 2012 26 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_093.png)

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

1.6 1.4 1.2

state

1 0.8 0.6 0.4

5

10 time index

15

20

25

b Figure: p ( xt j y1 :t ) and E [ Xt j y1 :t ] for t = 1, ..., 24 (top) and particle approximation of p ( x1 :24 j y1 :24 ) (bottom)

A. Doucet (MLSS Sept. 2012) Sept. 2012 26 / 136

93

Remarks

Empirically this SMC strategy performs well in terms of estimating the marginals fp ( xt j y1:t )gt 1 . This is what is only necessary in many applications thankfully.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

27 / 136

94

Remarks

Empirically this SMC strategy performs well in terms of estimating the marginals fp ( xt j y1:t )gt 1 . This is what is only necessary in many applications thankfully. However, the joint distribution p ( x1:t j y1:t ) is poorly estimated when t is large; i.e. we have in the previous example p ( x1:11 j y1:24 ) = X 1:11 (x1:11 ) . b

A. Doucet (MLSS Sept. 2012)

Sept. 2012

27 / 136

95

Remarks

Empirically this SMC strategy performs well in terms of estimating the marginals fp ( xt j y1:t )gt 1 . This is what is only necessary in many applications thankfully. However, the joint distribution p ( x1:t j y1:t ) is poorly estimated when t is large; i.e. we have in the previous example p ( x1:11 j y1:24 ) = X 1:11 (x1:11 ) . b p ( x1:k j y1:t ) = X 1:k (x1:k ) ; b

Degeneracy problem. For any N and any k, there exists t (k, N ) such that for any t t (k, N )

p ( x1:t j y1:t ) is an unreliable approximation of p ( x1:t j y1:t ) as t %. b

A. Doucet (MLSS Sept. 2012) Sept. 2012 27 / 136

96

Another Illustration of the Degeneracy Phenomenon

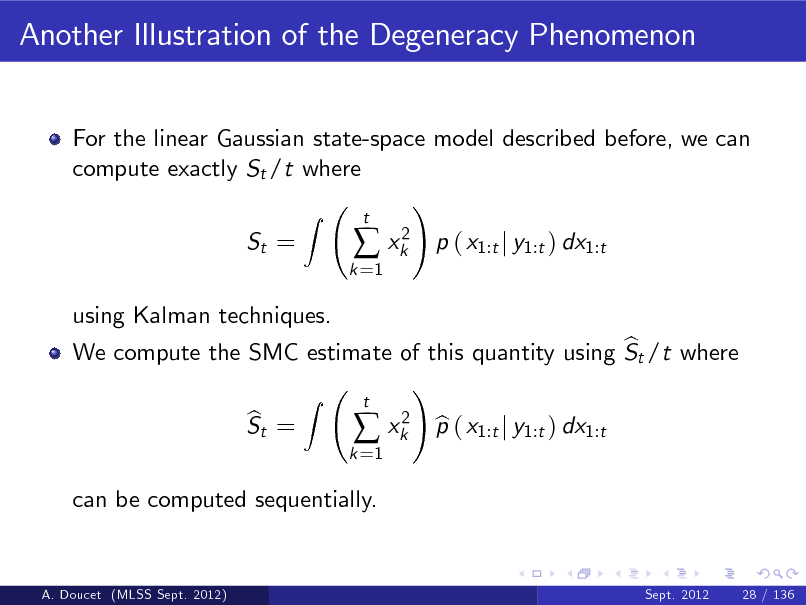

For the linear Gaussian state-space model described before, we can compute exactly St /t where ! Z St =

k =1

xk2

t

p ( x1:t j y1:t ) dx1:t

using Kalman techniques.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

28 / 136

97

Another Illustration of the Degeneracy Phenomenon

For the linear Gaussian state-space model described before, we can compute exactly St /t where ! Z St =

k =1

xk2

t

p ( x1:t j y1:t ) dx1:t

using Kalman techniques. b We compute the SMC estimate of this quantity using St /t where ! Z t 2 b St = b xk p ( x1:t j y1:t ) dx1:t

k =1

can be computed sequentially.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

28 / 136

98

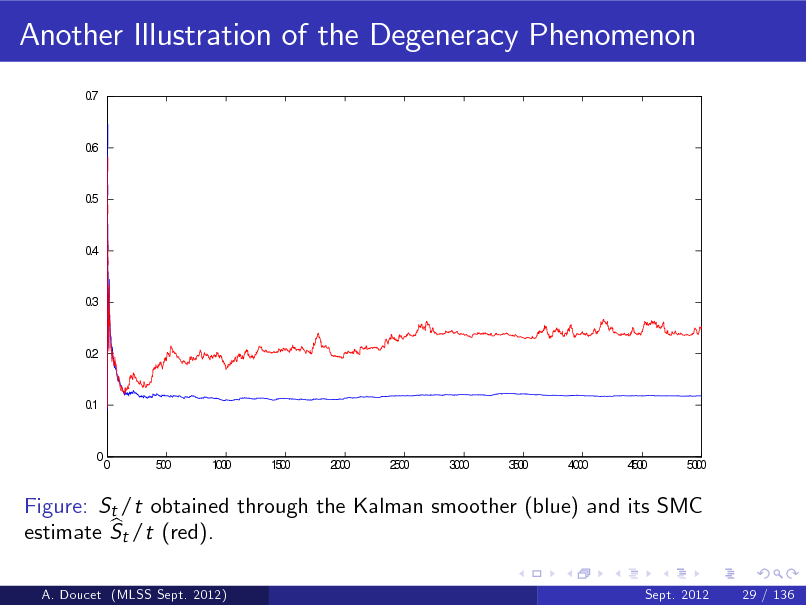

Another Illustration of the Degeneracy Phenomenon

0 .7

0 .6

0 .5

0 .4

0 .3

0 .2

0 .1

0

0

5 00

10 00

10 50

20 00

20 50

30 00

30 50

40 00

40 50

50 00

Figure: St /t obtained through the Kalman smoother (blue) and its SMC b estimate St /t (red).

A. Doucet (MLSS Sept. 2012)

Sept. 2012

29 / 136

99

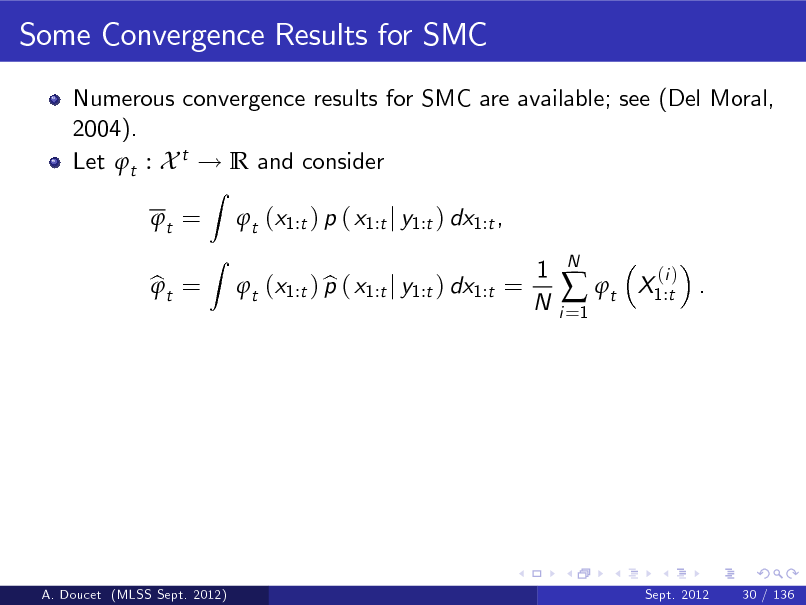

Some Convergence Results for SMC

Numerous convergence results for SMC are available; see (Del Moral, 2004).

A. Doucet (MLSS Sept. 2012)

Sept. 2012

30 / 136

100

Some Convergence Results for SMC

Numerous convergence results for SMC are available; see (Del Moral, 2004). Let t : X t ! R and consider t = bt =

Z Z

t (x1:t ) p ( x1:t j y1:t ) dx1:t , b t (x1:t ) p ( x1:t j y1:t ) dx1:t = 1 N

i =1

t

N

X1:t .

(i )

A. Doucet (MLSS Sept. 2012)

Sept. 2012

30 / 136

101

![Slide: Some Convergence Results for SMC

Numerous convergence results for SMC are available; see (Del Moral, 2004). Let t : X t ! R and consider t = bt =

Z Z

t (x1:t ) p ( x1:t j y1:t ) dx1:t , b t (x1:t ) p ( x1:t j y1:t ) dx1:t = 1 N

i =1

t

N

X1:t . 1

(i )

We can prove that for any bounded function and any p B (t ) c (p ) k k 1/p p E [j b t t jp ] , N p lim N ( b t t ) ) N 0, 2 . t

N !

A. Doucet (MLSS Sept. 2012)

Sept. 2012

30 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_102.png)

Some Convergence Results for SMC

Numerous convergence results for SMC are available; see (Del Moral, 2004). Let t : X t ! R and consider t = bt =

Z Z

t (x1:t ) p ( x1:t j y1:t ) dx1:t , b t (x1:t ) p ( x1:t j y1:t ) dx1:t = 1 N

i =1

t

N

X1:t . 1

(i )

We can prove that for any bounded function and any p B (t ) c (p ) k k 1/p p E [j b t t jp ] , N p lim N ( b t t ) ) N 0, 2 . t

N !

A. Doucet (MLSS Sept. 2012)

Sept. 2012

30 / 136

102

![Slide: Some Convergence Results for SMC

Numerous convergence results for SMC are available; see (Del Moral, 2004). Let t : X t ! R and consider t = bt =

Z Z

t (x1:t ) p ( x1:t j y1:t ) dx1:t , b t (x1:t ) p ( x1:t j y1:t ) dx1:t = 1 N

i =1

t

N

X1:t . 1

(i )

We can prove that for any bounded function and any p B (t ) c (p ) k k 1/p p E [j b t t jp ] , N p lim N ( b t t ) ) N 0, 2 . t

N !

Very weak results: B (t ) and 2 can increase with t and will for a t path-dependent t (x1:t ) as the degeneracy problem suggests.

A. Doucet (MLSS Sept. 2012) Sept. 2012 30 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_103.png)

Some Convergence Results for SMC

Numerous convergence results for SMC are available; see (Del Moral, 2004). Let t : X t ! R and consider t = bt =

Z Z

t (x1:t ) p ( x1:t j y1:t ) dx1:t , b t (x1:t ) p ( x1:t j y1:t ) dx1:t = 1 N

i =1

t

N

X1:t . 1

(i )

We can prove that for any bounded function and any p B (t ) c (p ) k k 1/p p E [j b t t jp ] , N p lim N ( b t t ) ) N 0, 2 . t

N !

Very weak results: B (t ) and 2 can increase with t and will for a t path-dependent t (x1:t ) as the degeneracy problem suggests.

A. Doucet (MLSS Sept. 2012) Sept. 2012 30 / 136

103

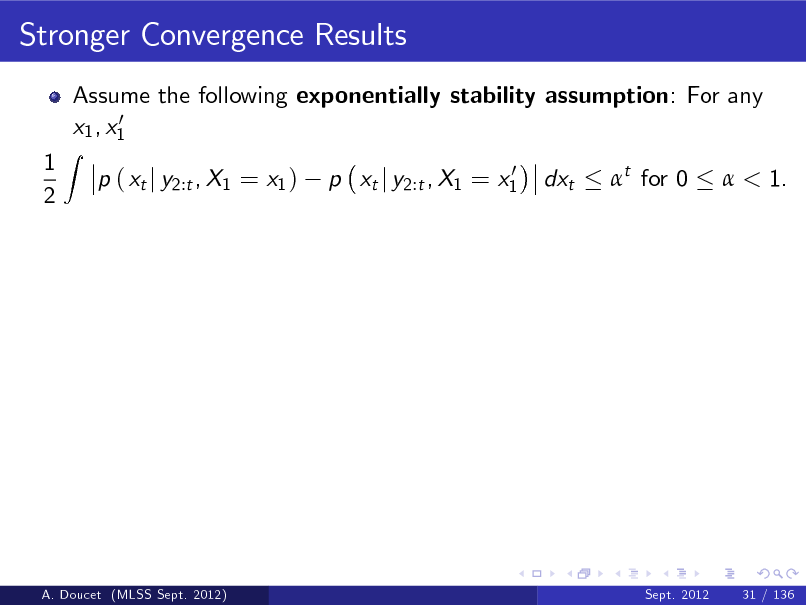

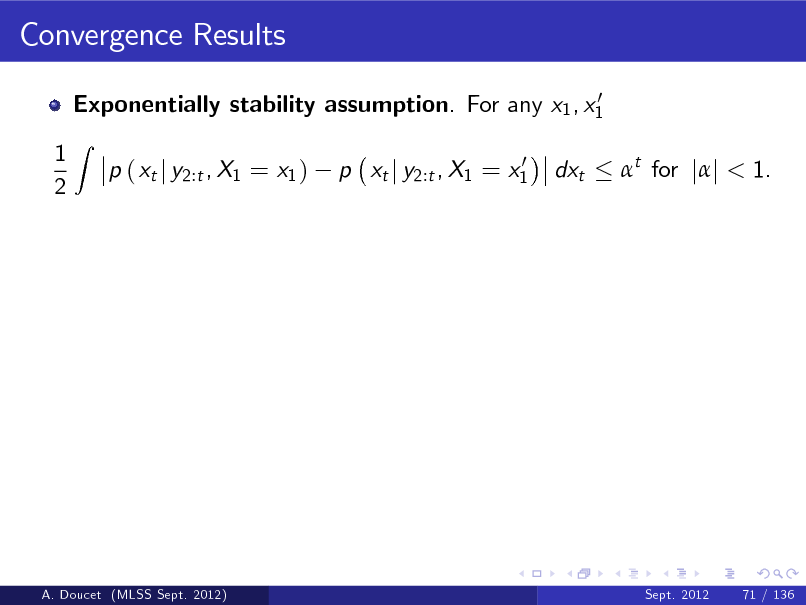

Stronger Convergence Results

Assume the following exponentially stability assumption: For any 0 x1 , x1 p ( xt j y2:t , X1 = x1 )

0 p xt j y2:t , X1 = x1

1 2

Z

dxt

t for 0

< 1.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

31 / 136

104

![Slide: Stronger Convergence Results

Assume the following exponentially stability assumption: For any 0 x1 , x1 p ( xt j y2:t , X1 = x1 )

0 p xt j y2:t , X1 = x1

1 2

Z

dxt

t for 0

< 1.

Marginal distribution. For t (x1:t ) = (xt L:t ), there exists B1 , B2 < s.t. B1 c ( p ) k k 1/p p E [j b t t jp ] , N p lim N ( b t t ) ) N 0, 2 where 2 B2 , t t i.e. there is no accumulation of numerical errors over time.

N !

A. Doucet (MLSS Sept. 2012)

Sept. 2012

31 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_105.png)

Stronger Convergence Results

Assume the following exponentially stability assumption: For any 0 x1 , x1 p ( xt j y2:t , X1 = x1 )

0 p xt j y2:t , X1 = x1

1 2

Z

dxt

t for 0

< 1.

Marginal distribution. For t (x1:t ) = (xt L:t ), there exists B1 , B2 < s.t. B1 c ( p ) k k 1/p p E [j b t t jp ] , N p lim N ( b t t ) ) N 0, 2 where 2 B2 , t t i.e. there is no accumulation of numerical errors over time.

N !

A. Doucet (MLSS Sept. 2012)

Sept. 2012

31 / 136

105

![Slide: Stronger Convergence Results

Assume the following exponentially stability assumption: For any 0 x1 , x1 p ( xt j y2:t , X1 = x1 )

0 p xt j y2:t , X1 = x1

1 2

Z

dxt

t for 0

< 1.

i.e. there is no accumulation of numerical errors over time. b L1 distance. If p ( x1:t j y1:t ) = E (p ( x1:t j y1:t )), there exists B3 < s.t. Z B3 t ; jp ( x1:t j y1:t ) p ( x1:t j y1:t )j dx1:t N i.e. the bias only increases in t.

A. Doucet (MLSS Sept. 2012) Sept. 2012 31 / 136

Marginal distribution. For t (x1:t ) = (xt L:t ), there exists B1 , B2 < s.t. B1 c ( p ) k k 1/p p E [j b t t jp ] , N p lim N ( b t t ) ) N 0, 2 where 2 B2 , t t

N !](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_106.png)

Stronger Convergence Results

Assume the following exponentially stability assumption: For any 0 x1 , x1 p ( xt j y2:t , X1 = x1 )

0 p xt j y2:t , X1 = x1

1 2

Z

dxt

t for 0

< 1.

i.e. there is no accumulation of numerical errors over time. b L1 distance. If p ( x1:t j y1:t ) = E (p ( x1:t j y1:t )), there exists B3 < s.t. Z B3 t ; jp ( x1:t j y1:t ) p ( x1:t j y1:t )j dx1:t N i.e. the bias only increases in t.

A. Doucet (MLSS Sept. 2012) Sept. 2012 31 / 136

Marginal distribution. For t (x1:t ) = (xt L:t ), there exists B1 , B2 < s.t. B1 c ( p ) k k 1/p p E [j b t t jp ] , N p lim N ( b t t ) ) N 0, 2 where 2 B2 , t t

N !

106

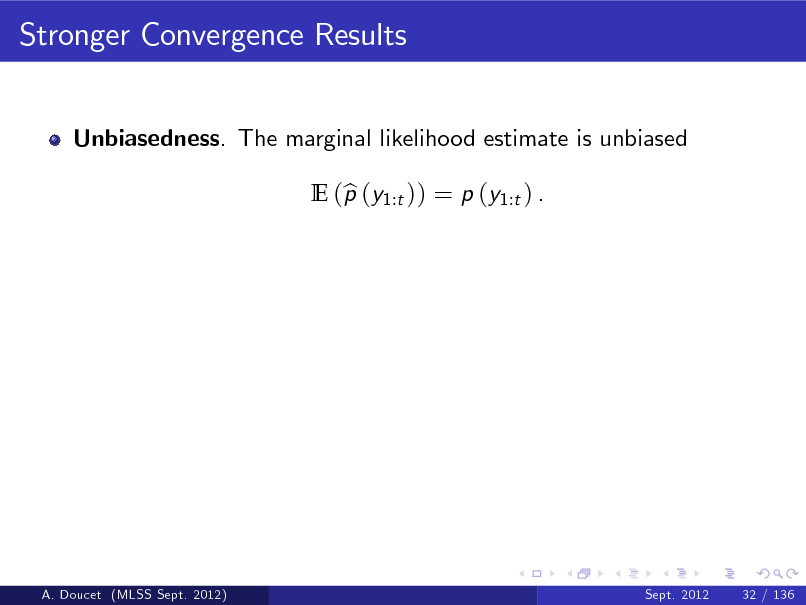

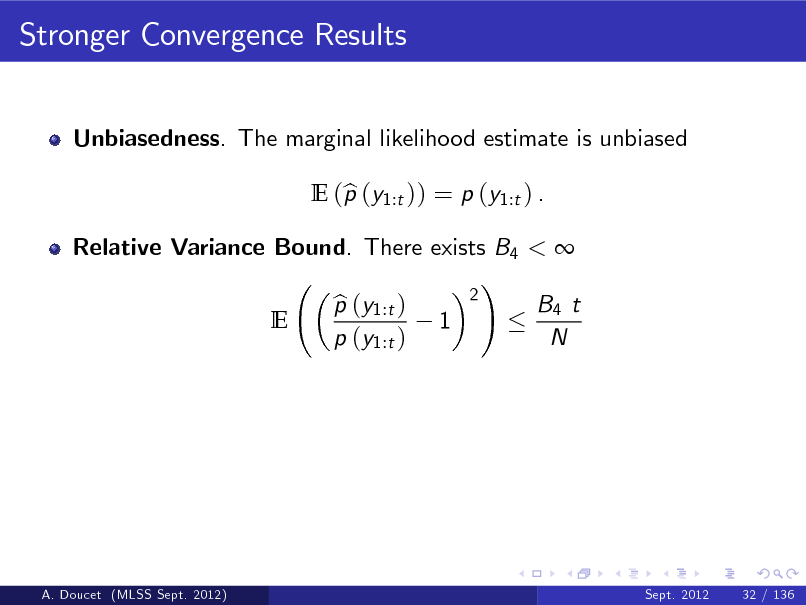

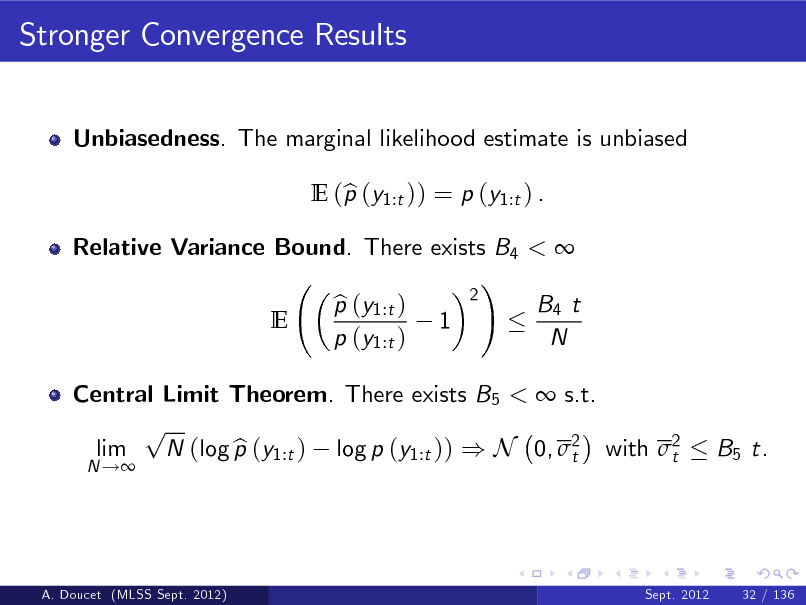

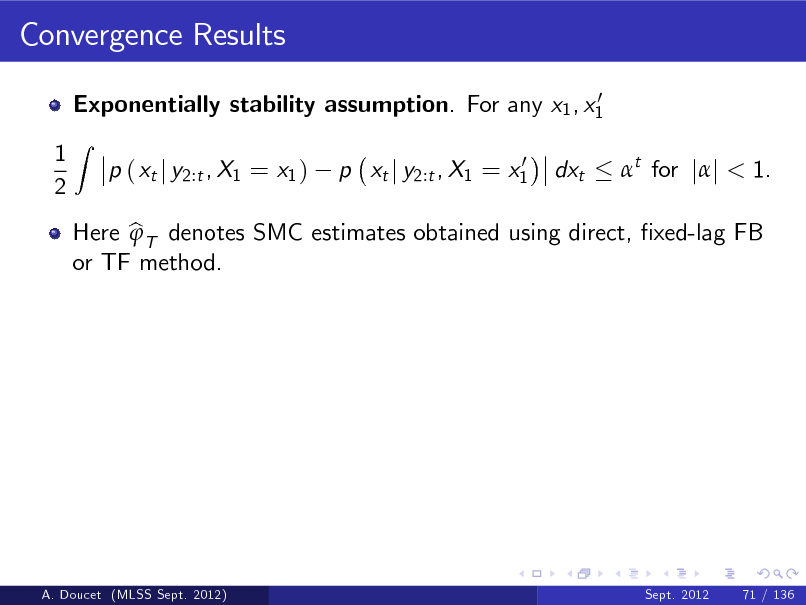

Stronger Convergence Results

Unbiasedness. The marginal likelihood estimate is unbiased E (p (y1:t )) = p (y1:t ) . b

A. Doucet (MLSS Sept. 2012)

Sept. 2012

32 / 136

107

Stronger Convergence Results

Unbiasedness. The marginal likelihood estimate is unbiased E (p (y1:t )) = p (y1:t ) . b

Relative Variance Bound. There exists B4 < ! 2 p (y1:t ) b B4 t E 1 p (y1:t ) N

A. Doucet (MLSS Sept. 2012)

Sept. 2012

32 / 136

108

Stronger Convergence Results

Unbiasedness. The marginal likelihood estimate is unbiased E (p (y1:t )) = p (y1:t ) . b

Relative Variance Bound. There exists B4 < ! 2 p (y1:t ) b B4 t E 1 p (y1:t ) N

Central Limit Theorem. There exists B5 < s.t. p lim N (log p (y1:t ) log p (y1:t )) ) N 0, 2 with 2 b t t

N !

B5 t.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

32 / 136

109

Basic Idea Used to Establish Uniform Lp Bounds

We denote k (xk ) = p ( xk j y1:k and its particle approximation. bk (xk ) = p ( xk j y1:k b

1) 1)

A. Doucet (MLSS Sept. 2012)

Sept. 2012

33 / 136

110

Basic Idea Used to Establish Uniform Lp Bounds

We denote k (xk ) = p ( xk j y1:k and its particle approximation. bk (xk ) = p ( xk j y1:k b t = k ,t ( k ) , which saties k ,t ( k ) (xt ) =

Z

1) 1)

Let k ,t be the measure-valued mapping such that

(x ) .p ( yk :t 1 j xk ) R k k p ( xt j xk , yk +1:t k (xk ) p ( yk :t 1 j xk ) dxk | {z }

p (xk jy1:t

1) Sept. 2012

1 ) dxk .

A. Doucet (MLSS Sept. 2012)

33 / 136

111

Key Decomposition Formula

1 + b1

! !

2 = 1,2 ( 1 ) 1,2 (b1 ) + b2

! ! ! + bt

! ! !

1

t = 1,t ( 1 ) 1,t (b1 )

1,t

!

Decomposition of the error bt t =

t + bt

2,t (b2 )

bt

1

k =1

t

k ,t (bk )

k ,t k

1,k

bk

1

A. Doucet (MLSS Sept. 2012)

Sept. 2012

34 / 136

112

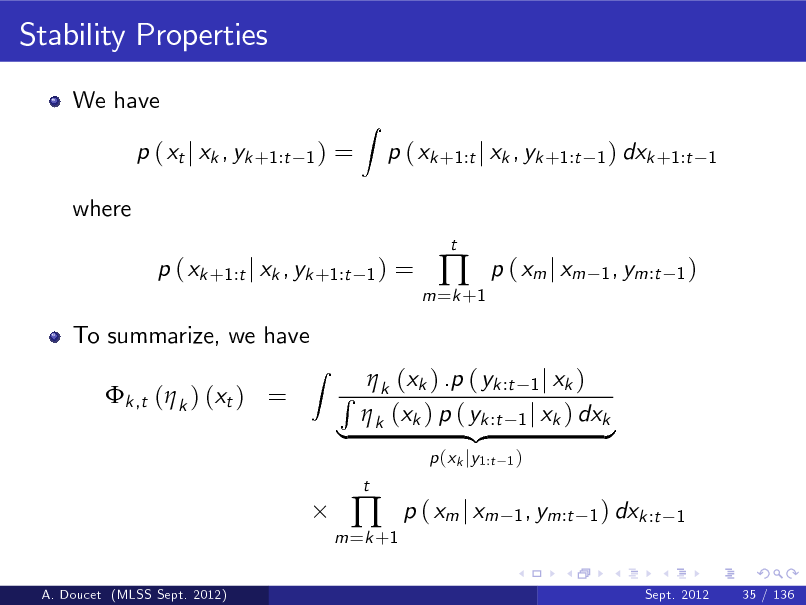

Stability Properties

We have p ( xt j xk , yk +1:t where p ( xk +1:t j xk , yk +1:t

1) 1) =

Z

p ( xk +1:t j xk , yk +1:t

t

1 ) dxk +1:t 1

=

m =k +1

p ( xm j xm

1 , ym:t 1 )

A. Doucet (MLSS Sept. 2012)

Sept. 2012

35 / 136

113

Stability Properties

We have p ( xt j xk , yk +1:t where p ( xk +1:t j xk , yk +1:t To summarize, we have k ,t ( k ) (xt ) =

Z

1) 1) =

Z

p ( xk +1:t j xk , yk +1:t

t

1 ) dxk +1:t 1

=

m =k +1

p ( xm j xm

1 , ym:t 1 )

(x ) .p ( yk :t 1 j xk ) R k k (xk ) p ( yk :t 1 j xk ) dxk | k {z }

m =k +1

t

p (xk jy1:t

1)

p ( xm j xm

1 , ym:t 1 ) dxk :t 1

A. Doucet (MLSS Sept. 2012)

Sept. 2012

35 / 136

114

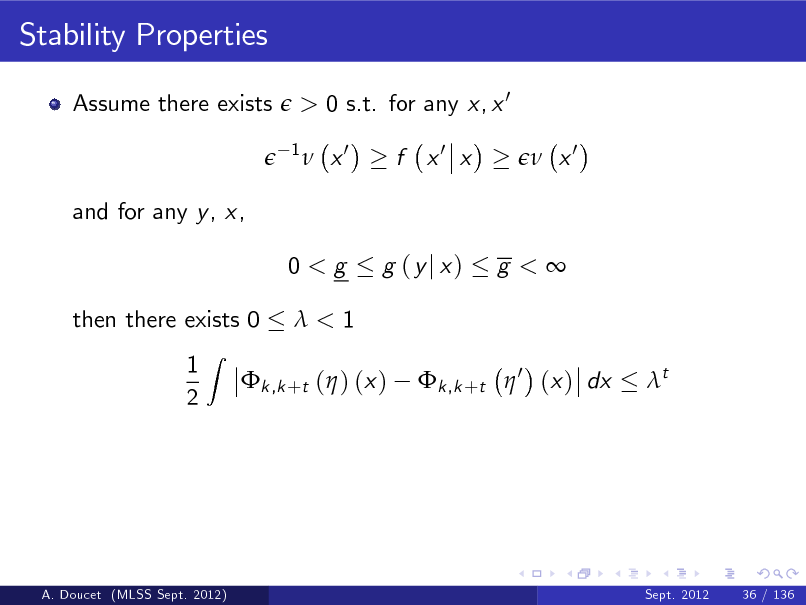

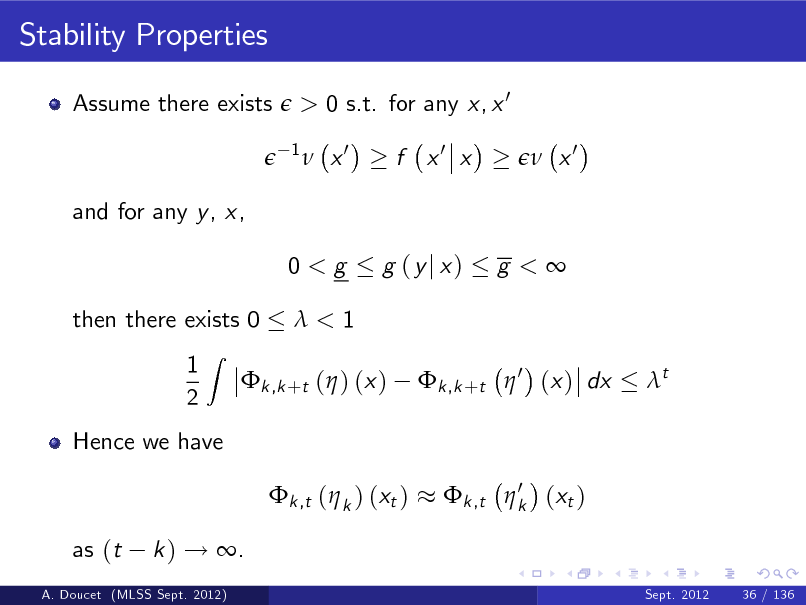

Stability Properties

Assume there exists

> 0 s.t. for any x, x 0

1

x0

f x0 x

x0

and for any y , x, 0<g then there exists 0 1 2

Z

g (yj x)

g <

<1 k ,k +t 0 (x ) dx t

k ,k +t ( ) (x )

A. Doucet (MLSS Sept. 2012)

Sept. 2012

36 / 136

115

Stability Properties

Assume there exists

> 0 s.t. for any x, x 0

1

x0

f x0 x

x0

and for any y , x, 0<g then there exists 0 1 2

Z

g (yj x)

g <

<1 k ,k +t 0 (x ) dx t

k ,k +t ( ) (x )

Hence we have k ,t ( k ) (xt ) as (t k ) ! .

Sept. 2012 36 / 136

0 k ,t k (xt )

A. Doucet (MLSS Sept. 2012)

116

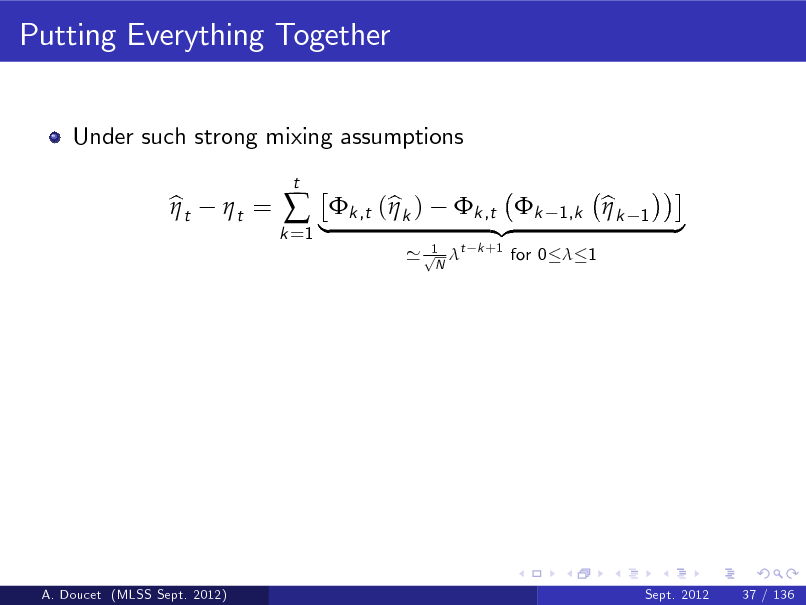

Putting Everything Together

Under such strong mixing assumptions bt t = |k ,t (bk )

t

k =1

1 ' p t N

k ,t k {z

k +1

1,k

for 0 1

bk

1

}

A. Doucet (MLSS Sept. 2012)

Sept. 2012

37 / 136

117

![Slide: Putting Everything Together

Under such strong mixing assumptions bt t = |k ,t (bk ) t jp ]

1/p t

k =1

1 ' p t N

We can then obtain results such as there exists B1 < s.t. E [j b t B1 c ( p ) k k p N

k ,t k {z

k +1

1,k

for 0 1

bk

1

}

A. Doucet (MLSS Sept. 2012)

Sept. 2012

37 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_118.png)

Putting Everything Together

Under such strong mixing assumptions bt t = |k ,t (bk ) t jp ]

1/p t

k =1

1 ' p t N

We can then obtain results such as there exists B1 < s.t. E [j b t B1 c ( p ) k k p N

k ,t k {z

k +1

1,k

for 0 1

bk

1

}

A. Doucet (MLSS Sept. 2012)

Sept. 2012

37 / 136

118

![Slide: Putting Everything Together

Under such strong mixing assumptions bt t = |k ,t (bk ) t jp ]

1/p t

k =1

1 ' p t N

We can then obtain results such as there exists B1 < s.t. E [j b t B1 c ( p ) k k p N

k ,t k {z

k +1

1,k

for 0 1

bk

1

}

Much work has been done recently on removing such strong mixing assumptions; e.g. Whiteley (2012) for much weaker and realistic assumptions.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

37 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_119.png)

Putting Everything Together

Under such strong mixing assumptions bt t = |k ,t (bk ) t jp ]

1/p t

k =1

1 ' p t N

We can then obtain results such as there exists B1 < s.t. E [j b t B1 c ( p ) k k p N

k ,t k {z

k +1

1,k

for 0 1

bk

1

}

Much work has been done recently on removing such strong mixing assumptions; e.g. Whiteley (2012) for much weaker and realistic assumptions.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

37 / 136

119

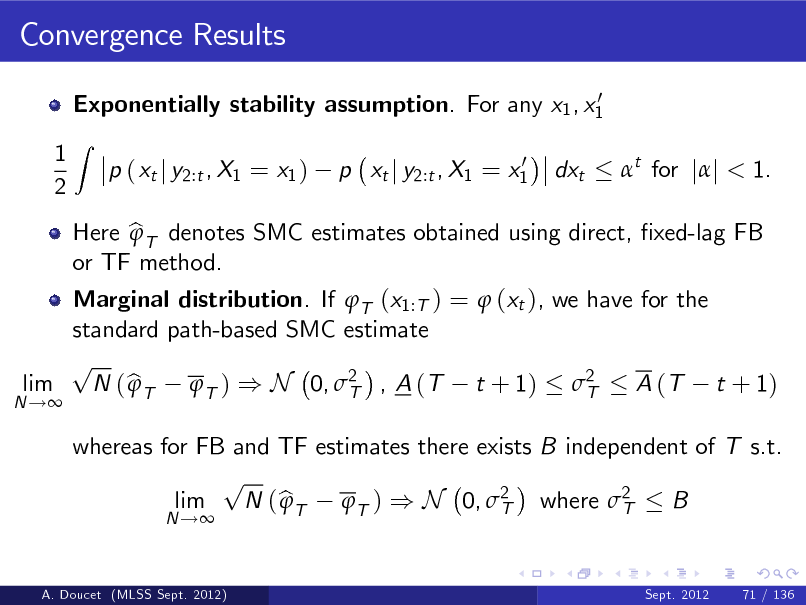

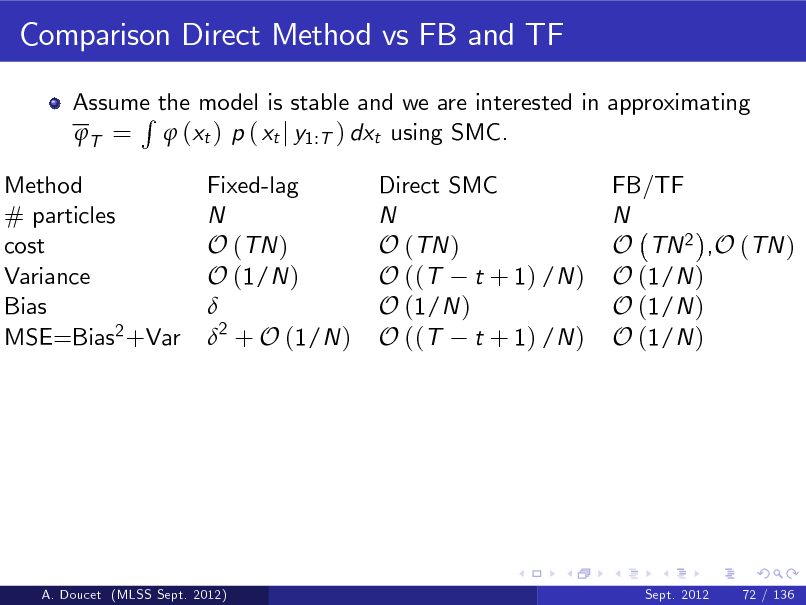

Summary

SMC provide consistent estimates under weak assumptions.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

38 / 136

120

Summary

SMC provide consistent estimates under weak assumptions. Under stability assumptions, we have uniform in time stability of the SMC estimates of fp ( xt j y1:t )gt 1 .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

38 / 136

121

Summary

SMC provide consistent estimates under weak assumptions. Under stability assumptions, we have uniform in time stability of the SMC estimates of fp ( xt j y1:t )gt 1 . Under stability assumptions, the relative variance of the SMC estimate of fp (y1:t )gt 1 only increases linearly with t.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

38 / 136

122

Summary

SMC provide consistent estimates under weak assumptions. Under stability assumptions, we have uniform in time stability of the SMC estimates of fp ( xt j y1:t )gt 1 . Under stability assumptions, the relative variance of the SMC estimate of fp (y1:t )gt 1 only increases linearly with t.

Even under stability assumptions, one cannot expect to obtain uniform in time stability for SMC estimates of fp ( x1:t j y1:t )gt is due to the degeneracy problem.

1

; this

A. Doucet (MLSS Sept. 2012)

Sept. 2012

38 / 136

123

Summary

SMC provide consistent estimates under weak assumptions. Under stability assumptions, we have uniform in time stability of the SMC estimates of fp ( xt j y1:t )gt 1 . Under stability assumptions, the relative variance of the SMC estimate of fp (y1:t )gt 1 only increases linearly with t.

Even under stability assumptions, one cannot expect to obtain uniform in time stability for SMC estimates of fp ( x1:t j y1:t )gt is due to the degeneracy problem.

1

; this

Is it possible to Q1: eliminate, Q2: mitigate the degeneracy problem?

A. Doucet (MLSS Sept. 2012)

Sept. 2012

38 / 136

124

Summary

SMC provide consistent estimates under weak assumptions. Under stability assumptions, we have uniform in time stability of the SMC estimates of fp ( xt j y1:t )gt 1 . Under stability assumptions, the relative variance of the SMC estimate of fp (y1:t )gt 1 only increases linearly with t.

Even under stability assumptions, one cannot expect to obtain uniform in time stability for SMC estimates of fp ( x1:t j y1:t )gt is due to the degeneracy problem. Answer: Q1: no, Q2: yes.

1

; this

Is it possible to Q1: eliminate, Q2: mitigate the degeneracy problem?

A. Doucet (MLSS Sept. 2012)

Sept. 2012

38 / 136

125

Is Resampling Really Necessary?

Resampling is the source of the degeneracy problem and might appear wasteful.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

39 / 136

126

![Slide: Is Resampling Really Necessary?

Resampling is the source of the degeneracy problem and might appear wasteful. The resampling step is an unbiased operation E [ p ( x1:t j y1:t )j p ( x1:t j y1:t )] = p ( x1:t j y1:t ) b e e V

Z

but clearly it introduces some errors locally in time. That is for any test function, we have V

Z

(x1:t ) p ( x1:t j y1:t ) dx1:t b

(x1:t ) p ( x1:t j y1:t ) dx1:t e

A. Doucet (MLSS Sept. 2012)

Sept. 2012

39 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_127.png)

Is Resampling Really Necessary?

Resampling is the source of the degeneracy problem and might appear wasteful. The resampling step is an unbiased operation E [ p ( x1:t j y1:t )j p ( x1:t j y1:t )] = p ( x1:t j y1:t ) b e e V

Z

but clearly it introduces some errors locally in time. That is for any test function, we have V

Z

(x1:t ) p ( x1:t j y1:t ) dx1:t b

(x1:t ) p ( x1:t j y1:t ) dx1:t e

A. Doucet (MLSS Sept. 2012)

Sept. 2012

39 / 136

127

![Slide: Is Resampling Really Necessary?

Resampling is the source of the degeneracy problem and might appear wasteful. The resampling step is an unbiased operation E [ p ( x1:t j y1:t )j p ( x1:t j y1:t )] = p ( x1:t j y1:t ) b e e V

Z

but clearly it introduces some errors locally in time. That is for any test function, we have V

Z

What about eliminating the resampling step?

(x1:t ) p ( x1:t j y1:t ) dx1:t b

(x1:t ) p ( x1:t j y1:t ) dx1:t e

A. Doucet (MLSS Sept. 2012)

Sept. 2012

39 / 136](https://yosinski.com/mlss12/media/slides/MLSS-2012-Doucet-Sequential-Monte-Carlo-Methods_128.png)

Is Resampling Really Necessary?

Resampling is the source of the degeneracy problem and might appear wasteful. The resampling step is an unbiased operation E [ p ( x1:t j y1:t )j p ( x1:t j y1:t )] = p ( x1:t j y1:t ) b e e V

Z

but clearly it introduces some errors locally in time. That is for any test function, we have V

Z

What about eliminating the resampling step?

(x1:t ) p ( x1:t j y1:t ) dx1:t b

(x1:t ) p ( x1:t j y1:t ) dx1:t e

A. Doucet (MLSS Sept. 2012)

Sept. 2012

39 / 136

128

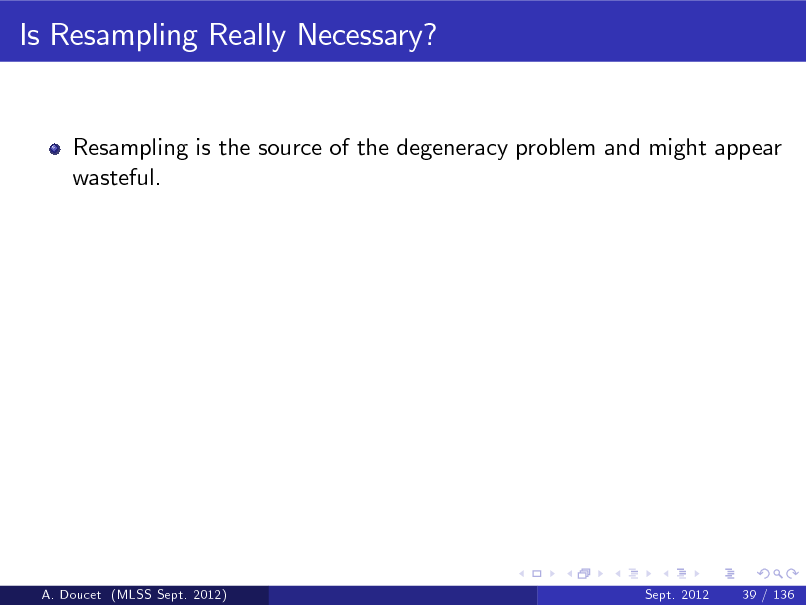

Sequential Importance Samping: SMC Without Resampling

In this case, the estimate of the posterior is pSIS ( x1:t j y1:t ) = b p y1:t j X1:t

(i )

N

i =1

Wt

t

(i )

X (i ) (x1:t )

1:t

where X1:t

(i )

p (x1:t ) and Wt

(i )

k =1

g

yk j Xt

(i )

.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

40 / 136

129

Sequential Importance Samping: SMC Without Resampling

In this case, the estimate of the posterior is pSIS ( x1:t j y1:t ) = b p y1:t j X1:t

(i )

N

i =1

Wt

t

(i )

X (i ) (x1:t )

1:t

where X1:t

(i )

p (x1:t ) and Wt

(i )

k =1

g

yk j Xt

(i )

.

In this case, the marginal likelihood estimate is pSIS (y1:t ) = b 1 N

i =1

p

N

y1:t j X1:t

(i )

A. Doucet (MLSS Sept. 2012)

Sept. 2012

40 / 136

130

Sequential Importance Samping: SMC Without Resampling

In this case, the estimate of the posterior is pSIS ( x1:t j y1:t ) = b p y1:t j X1:t

(i )

N

i =1

Wt

t

(i )

X (i ) (x1:t )

1:t

where X1:t

(i )

p (x1:t ) and Wt

(i )

k =1

g

yk j Xt

(i )

.

In this case, the marginal likelihood estimate is pSIS (y1:t ) = b 1 N

i =1

p

t

N

y1:t j X1:t

(i )

Relative variance of p y1:t j X1:t exponentially fast...

A. Doucet (MLSS Sept. 2012)

(i )

=

k =1

g

yk j Xt

(i )

is increasing

Sept. 2012

40 / 136

131

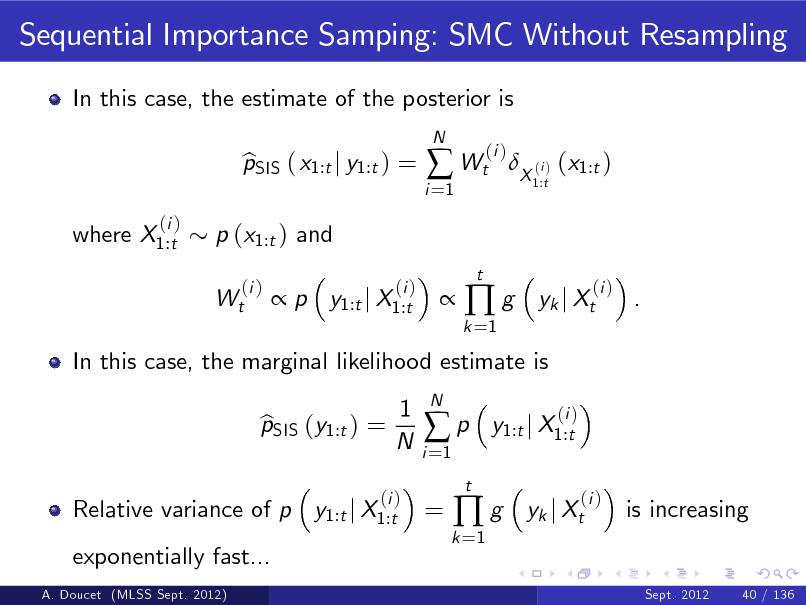

SIS For Stochastic Volatility Model

1000

500

0 - 25 1000

- 20

- 15

- 10

-5

0

500

0 - 25 100

- 20

- 15

- 10

-5

0

50

0 - 25

- 20

Importance Weights (base 10 logarithm)

- 15

- 10

-5

0

Figure: Histograms of log10 Wt t = 100 (bottom).

(i )

for t = 1 (top), t = 50 (middle) and

The algorithm performance collapse as t increases as expected.

A. Doucet (MLSS Sept. 2012) Sept. 2012 41 / 136

132

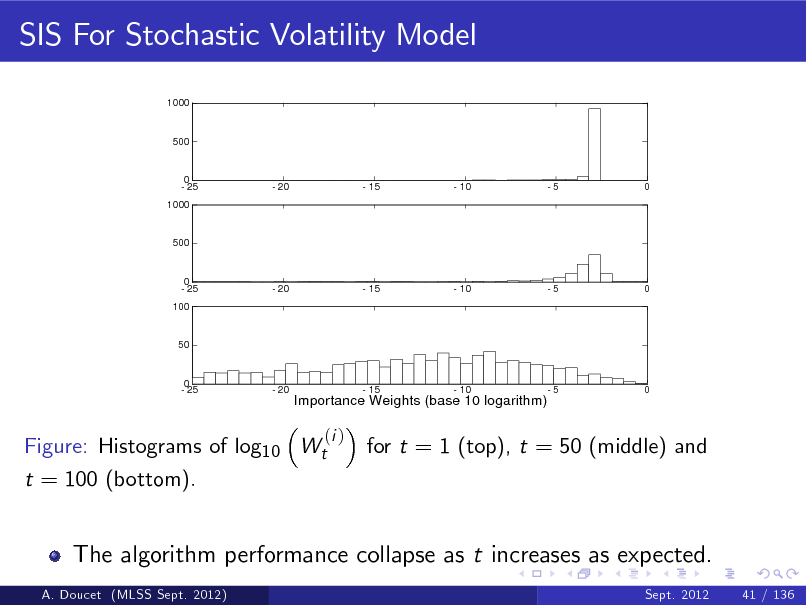

Central Limit Theorems

For both SIS and SMC, we have a CLT for the estimates of the marginal likelihood

p p

N

N

pSIS (y1:t ) b p (y1:t ) pSMC (y1:t ) b p (y1:t )

1 1

) N 0, 2 t,SIS , ) N 0, 2 t,SMC .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

42 / 136

133

Central Limit Theorems

For both SIS and SMC, we have a CLT for the estimates of the marginal likelihood

p p

N

N

The variance expressions are 2 t,SIS =

pSIS (y1:t ) b p (y1:t ) pSMC (y1:t ) b p (y1:t )

1 1

) N 0, 2 t,SIS , ) N 0, 2 t,SMC .

2 t,SMC =

R

=

R 2 p ( y1:t jx1:t )p (x1:t )dx1:t p 2 ( x1:t jy1:t ) dx1:t 1 = 1 p (x1:t ) p 2 (y1:t ) R R p 2 ( x1 jy1:t ) 2( x p 1:k jy 1:t ) dx1 + t =2 p ( x dx1:k k R 2 (x 1 ) R 21:k 1 jy1:k 1 )f ( xk jxk 1 ) g ( y1 jx1 )(x1 )dx1 p ( yk :t jxk )p ( xk jy1:k 1 )dxk + t =2 t k p 2 (y 1 ) p 2 ( yk :t jy1:k 1 )

t

A. Doucet (MLSS Sept. 2012)

Sept. 2012

42 / 136

134

Central Limit Theorems

For both SIS and SMC, we have a CLT for the estimates of the marginal likelihood

p p

N

N

The variance expressions are 2 t,SIS =

pSIS (y1:t ) b p (y1:t ) pSMC (y1:t ) b p (y1:t )

1 1

) N 0, 2 t,SIS , ) N 0, 2 t,SMC .

2 t,SMC =

R

=

R 2 p ( y1:t jx1:t )p (x1:t )dx1:t p 2 ( x1:t jy1:t ) dx1:t 1 = 1 p (x1:t ) p 2 (y1:t ) R R p 2 ( x1 jy1:t ) 2( x p 1:k jy 1:t ) dx1 + t =2 p ( x dx1:k k R 2 (x 1 ) R 21:k 1 jy1:k 1 )f ( xk jxk 1 ) g ( y1 jx1 )(x1 )dx1 p ( yk :t jxk )p ( xk jy1:k 1 )dxk + t =2 t k p 2 (y 1 ) p 2 ( yk :t jy1:k 1 )

t

SMC breaks the integral over X t into t integrals over X .

A. Doucet (MLSS Sept. 2012) Sept. 2012 42 / 136

135

A Toy Example

Consider the case where f ( x 0 j x ) = (x 0 ) = N x 0 ; 0, 2 and 1 g ( y j x ) = N y ; 0, 1 2 where 2 > 1.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

43 / 136

136

A Toy Example

Consider the case where f ( x 0 j x ) = (x 0 ) = N x 0 ; 0, 2 and 1 g ( y j x ) = N y ; 0, 1 2 where 2 > 1. Assume we observe y1 = V V pSIS (y1:t ) b p (y1:t )

=

pSMC (y1:t ) b p (y1:t )

= yt = 0 then we have " 2 1 4 t,SIS = N N 22 1 " 2 t 4 t,SMC = N N 22 1

t /2

1 ,

1/2

#

1 .

#

A. Doucet (MLSS Sept. 2012)

Sept. 2012

43 / 136

137

A Toy Example

Consider the case where f ( x 0 j x ) = (x 0 ) = N x 0 ; 0, 2 and 1 g ( y j x ) = N y ; 0, 1 2 where 2 > 1. Assume we observe y1 = V V pSIS (y1:t ) b p (y1:t )

=

pSMC (y1:t ) b p (y1:t )

2 t,SIS N

= yt = 0 then we have " 2 1 4 t,SIS = N N 22 1 " 2 t 4 t,SMC = N N 22 1

2

t /2

1 ,

1/2

#

1 . 1023 particles to

#

If select 2 = 1.2 then it is necessary to use N obtain

= 10

2

for t = 1000.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

43 / 136

138

A Toy Example

Consider the case where f ( x 0 j x ) = (x 0 ) = N x 0 ; 0, 2 and 1 g ( y j x ) = N y ; 0, 1 2 where 2 > 1. Assume we observe y1 = V V pSIS (y1:t ) b p (y1:t )

=

pSMC (y1:t ) b p (y1:t )

2 t,SIS N

= yt = 0 then we have " 2 1 4 t,SIS = N N 22 1 " 2 t 4 t,SMC = N N 22 1

2

t /2

1 ,

1/2

#

1 . 1023 particles to

#

If select 2 = 1.2 then it is necessary to use N obtain

= 10

2

for t = 1000. 104 particles:

To obtain N = 10 2 , SMC requires only N improvement by 19 orders of magnitude!

A. Doucet (MLSS Sept. 2012)

2 t,SMC

Sept. 2012

43 / 136

139

Better Resampling Schemes

h i (i ) (i ) Better resampling steps can be designed such that E Nt = NWt h i (i ) (i ) (i ) but V Nt < NWt 1 Wt ; residual resampling, minimal entropy resampling etc. (Capp et al., 2005).

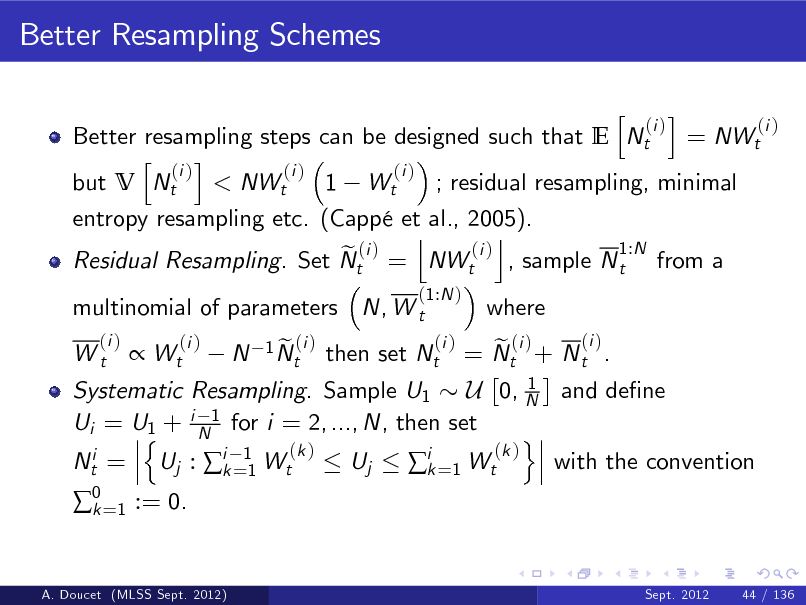

A. Doucet (MLSS Sept. 2012)

Sept. 2012

44 / 136

140

Better Resampling Schemes

h i (i ) (i ) Better resampling steps can be designed such that E Nt = NWt h i (i ) (i ) (i ) but V Nt < NWt 1 Wt ; residual resampling, minimal entropy resampling etc. (Capp et al., 2005). j k 1:N (i ) e (i ) Residual Resampling. Set Nt = NWt , sample N t from a

(1:N ) (i )

multinomial of parameters N, W t

(i ) Wt

where

Wt

(i )

N

1 N (i ) et

then set Nt

(i ) e (i ) = Nt + N t .

A. Doucet (MLSS Sept. 2012)

Sept. 2012

44 / 136

141

Better Resampling Schemes

h i (i ) (i ) Better resampling steps can be designed such that E Nt = NWt h i (i ) (i ) (i ) but V Nt < NWt 1 Wt ; residual resampling, minimal entropy resampling etc. (Capp et al., 2005). j k 1:N (i ) e (i ) Residual Resampling. Set Nt = NWt , sample N t from a

(1:N ) (i )

multinomial of parameters N, W t

(i ) Wt

where

(i ) e (i ) = Nt + N t . 1 Systematic Resampling. Sample U1 U 0, N and dene Ui = U1 + i N1 for i = 2, ..., N, then set n o (k ) (k ) Nti = Uj : ik =11 Wt Uj with the convention ik =1 Wt

Wt

(i )

N

1 N (i ) et

then set Nt

0 =1 := 0. k

A. Doucet (MLSS Sept. 2012)

Sept. 2012

44 / 136

142

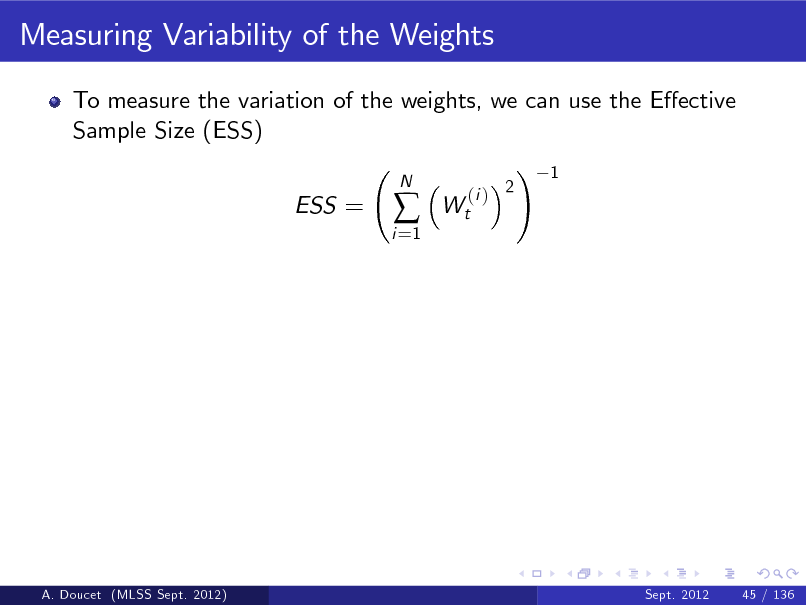

Measuring Variability of the Weights

To measure the variation of the weights, we can use the Eective Sample Size (ESS) ! 1 ESS =

i =1

N

Wt

(i ) 2

A. Doucet (MLSS Sept. 2012)

Sept. 2012

45 / 136

143

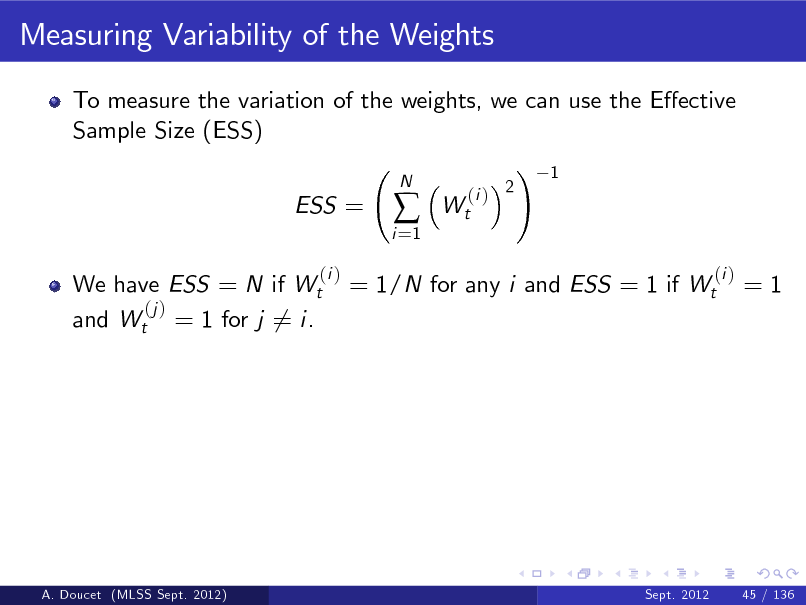

Measuring Variability of the Weights

To measure the variation of the weights, we can use the Eective Sample Size (ESS) ! 1 ESS =

(i )

i =1

N

Wt

(i ) 2

We have ESS = N if Wt (j ) and Wt = 1 for j 6= i.

= 1/N for any i and ESS = 1 if Wt

(i )

=1

A. Doucet (MLSS Sept. 2012)

Sept. 2012

45 / 136

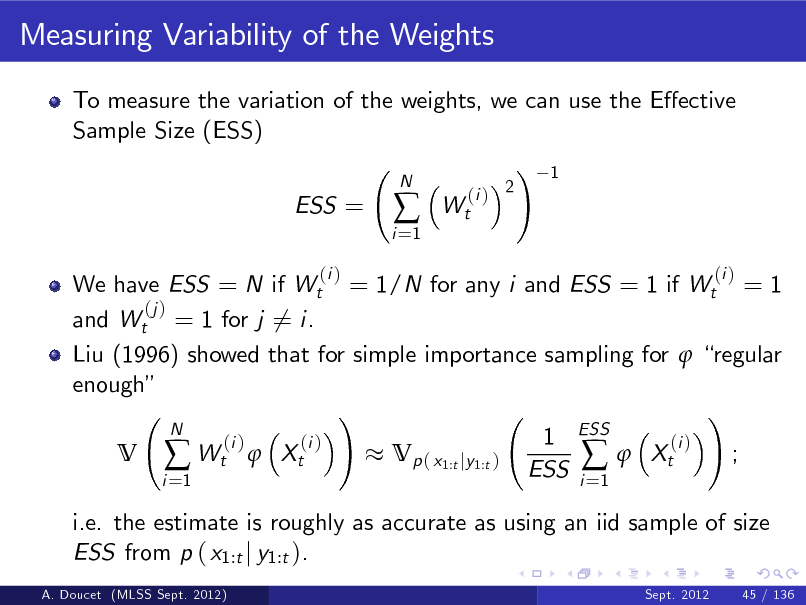

144

Measuring Variability of the Weights

To measure the variation of the weights, we can use the Eective Sample Size (ESS) ! 1 ESS =

(i )

i =1

N

Wt

(i ) 2

We have ESS = N if Wt = 1/N for any i and ESS = 1 if Wt = 1 (j ) and Wt = 1 for j 6= i. Liu (1996) showed that for simple importance sampling for regular enough ! ! N 1 ESS (i ) (i ) (i ) V Wt Xt Vp ( x1:t jy1:t ) Xt ; ESS i i =1 =1 i.e. the estimate is roughly as accurate as using an iid sample of size ESS from p ( x1:t j y1:t ).

A. Doucet (MLSS Sept. 2012) Sept. 2012 45 / 136

(i )

145

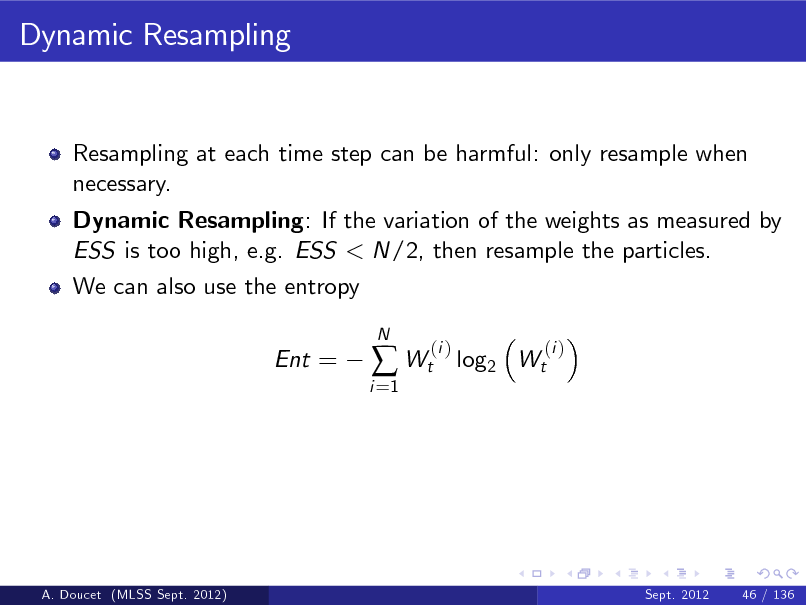

Dynamic Resampling

Resampling at each time step can be harmful: only resample when necessary.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

46 / 136

146

Dynamic Resampling

Resampling at each time step can be harmful: only resample when necessary. Dynamic Resampling: If the variation of the weights as measured by ESS is too high, e.g. ESS < N/2, then resample the particles.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

46 / 136

147

Dynamic Resampling

Resampling at each time step can be harmful: only resample when necessary. Dynamic Resampling: If the variation of the weights as measured by ESS is too high, e.g. ESS < N/2, then resample the particles. We can also use the entropy Ent =

i =1

Wt

N

(i )

log2 Wt

(i )

A. Doucet (MLSS Sept. 2012)

Sept. 2012

46 / 136

148

Dynamic Resampling

Resampling at each time step can be harmful: only resample when necessary. Dynamic Resampling: If the variation of the weights as measured by ESS is too high, e.g. ESS < N/2, then resample the particles. We can also use the entropy Ent =

i =1

Wt

(i )

N

(i )

log2 Wt

(i )

We have Ent = log2 (N ) if Wt = 1/N for any i. We have Ent = 0 (i ) (j ) if Wt = 1 and Wt = 1 for j 6= i.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

46 / 136

149

Improving the Sampling Step

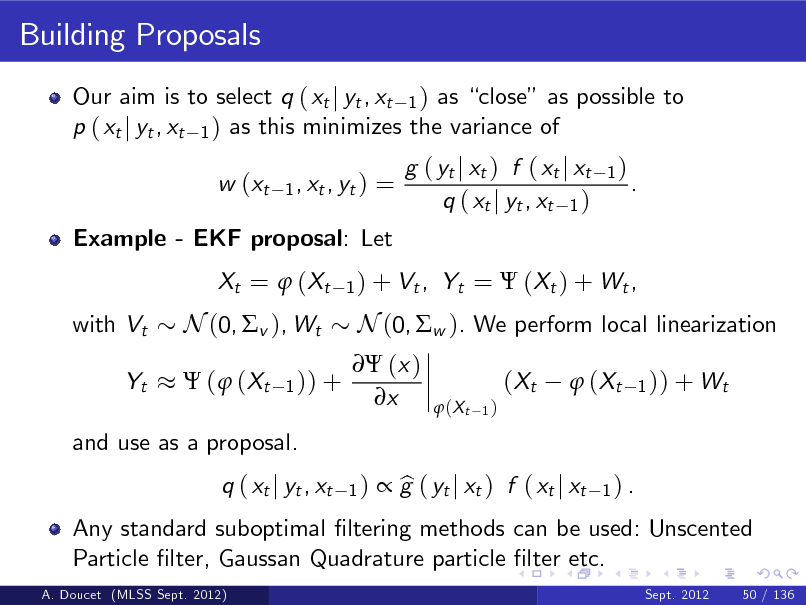

Bootstrap lter. Sample particles blindly according to the prior without taking into account the observation Very ine cient for vague prior/peaky likelihood.

A. Doucet (MLSS Sept. 2012)

Sept. 2012

47 / 136

150

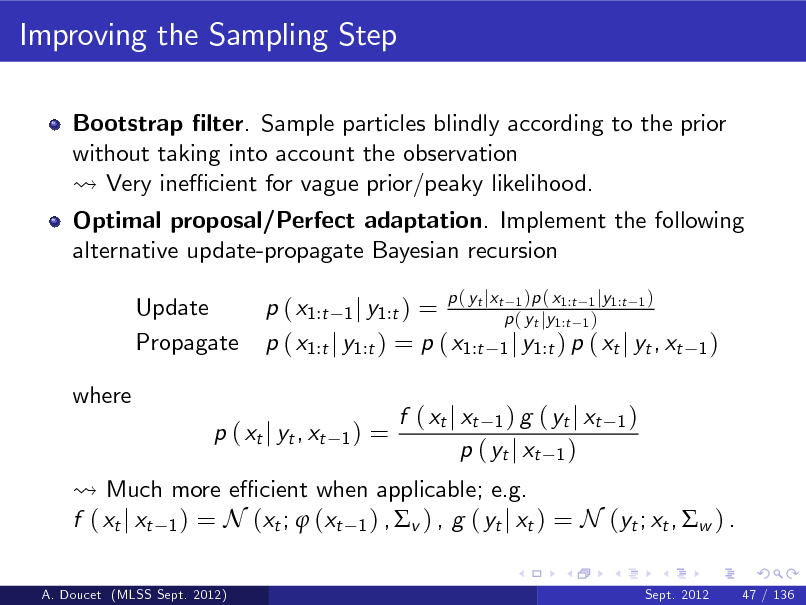

Improving the Sampling Step

Bootstrap lter. Sample particles blindly according to the prior without taking into account the observation Very ine cient for vague prior/peaky likelihood. Optimal proposal/Perfect adaptation. Implement the following alternative update-propagate Bayesian recursion Update Propagate where p ( xt j yt , xt

1)

tj t 1 1:t 1 j 1:t 1 p ( x1:t 1 j y1:t ) = p ( yt jy1:t 1 ) p ( x1:t j y1:t ) = p ( x1:t 1 j y1:t ) p ( xt j yt , xt

p( y x

)p ( x

y

)

1)

=

Much more e cient when applicable; e.g. f ( xt j xt 1 ) = N (xt ; (xt 1 ) , v ) , g ( yt j xt ) = N (yt ; xt , w ) .

A. Doucet (MLSS Sept. 2012) Sept. 2012 47 / 136

f ( xt j xt 1 ) g ( yt j xt p ( yt j xt 1 )

1)

151

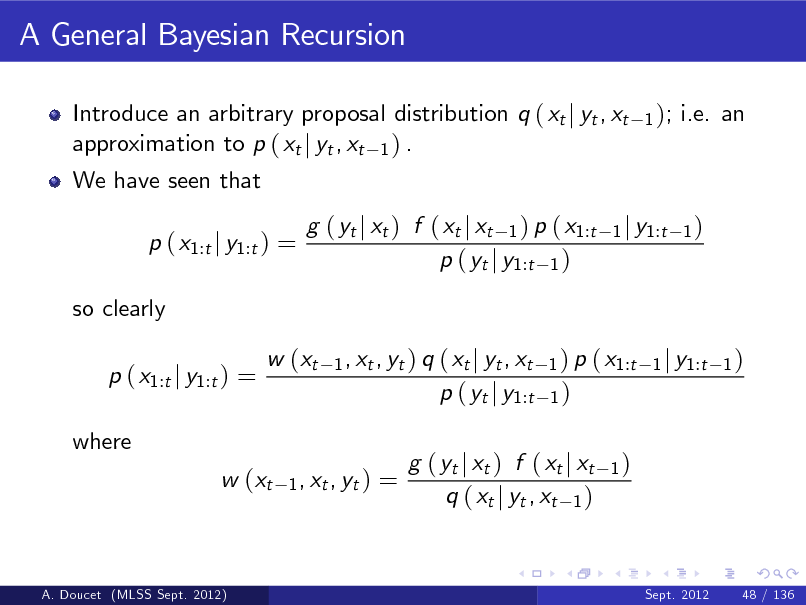

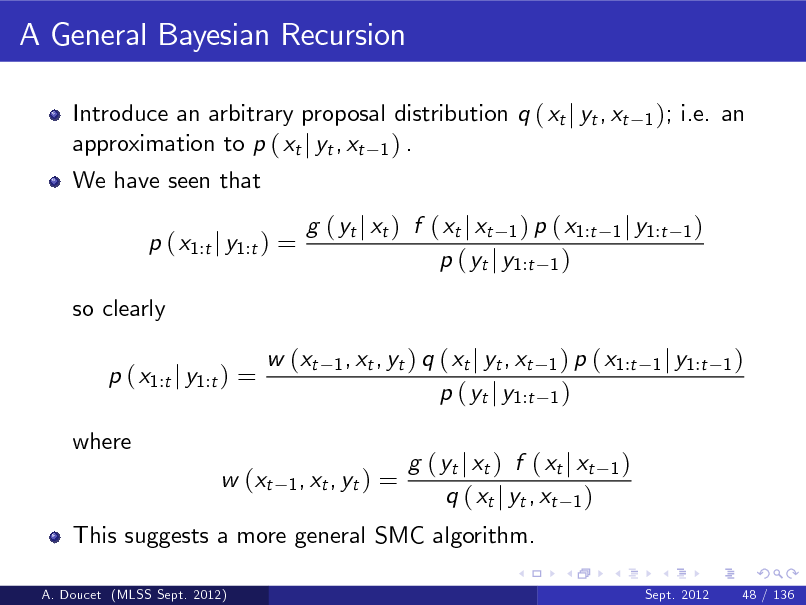

A General Bayesian Recursion

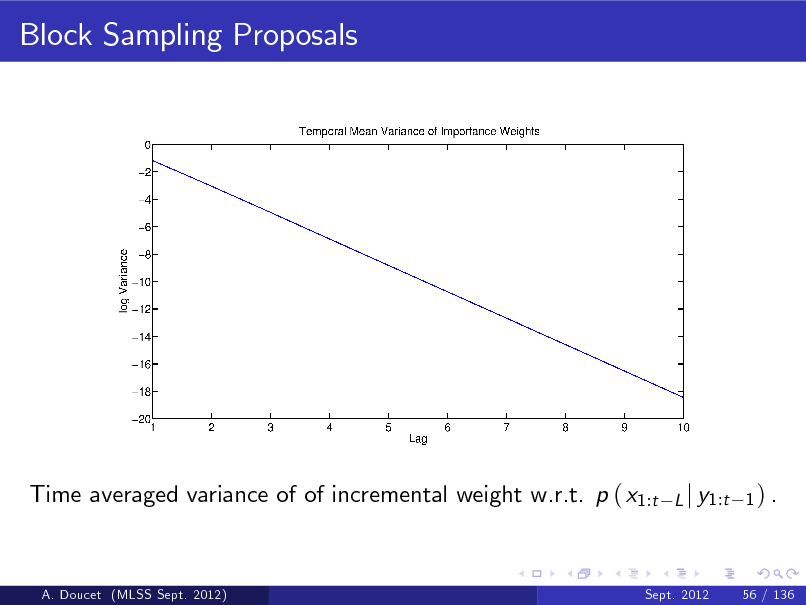

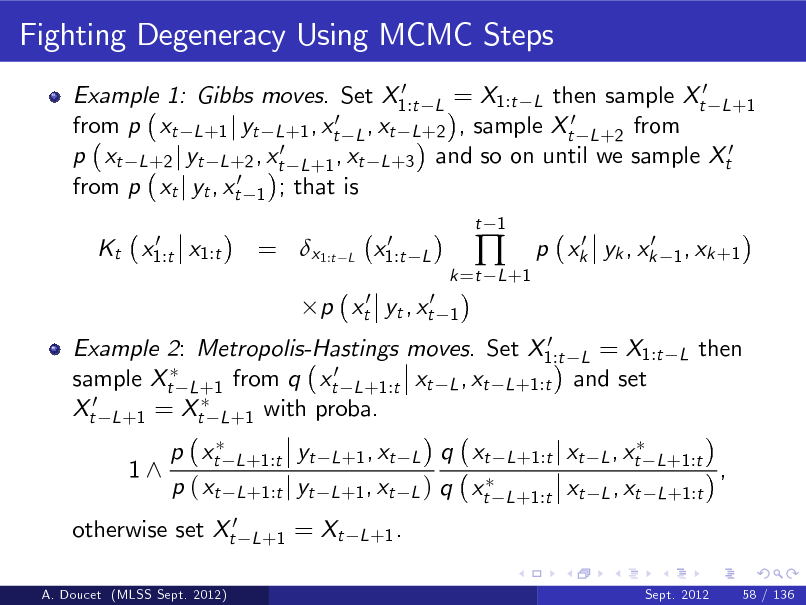

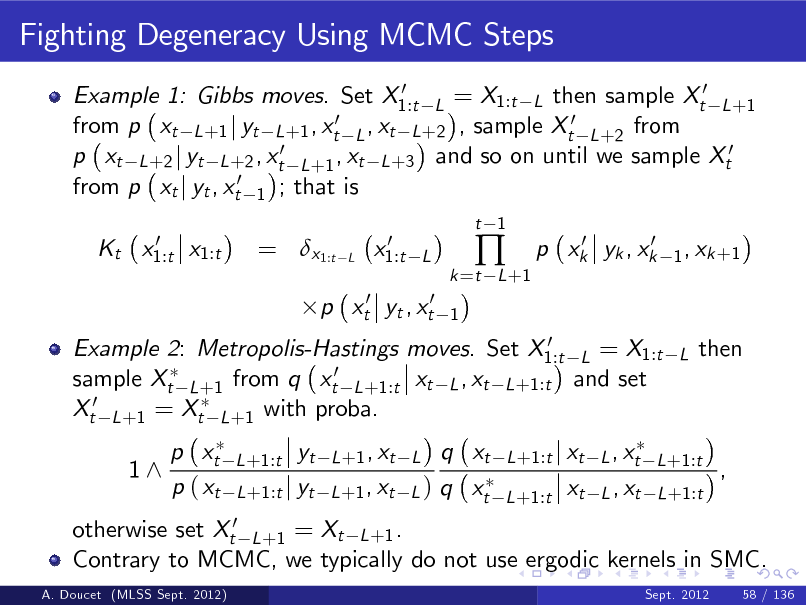

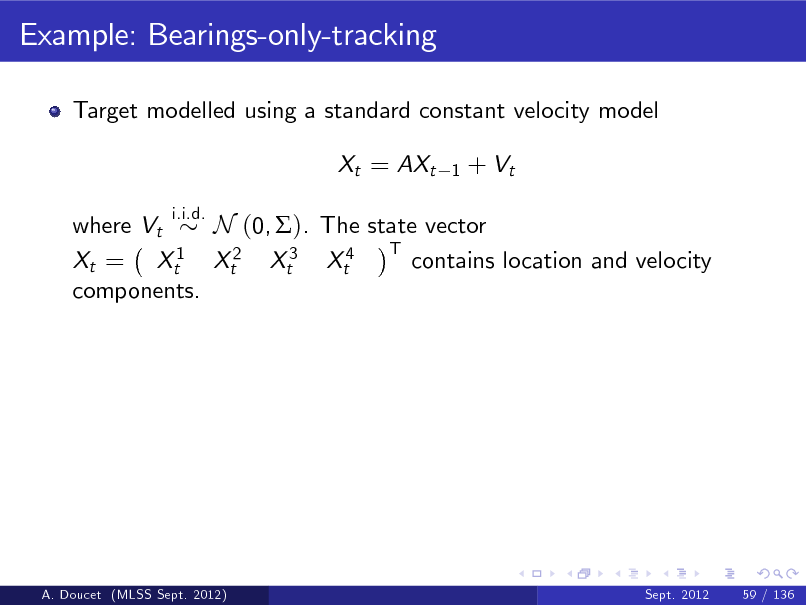

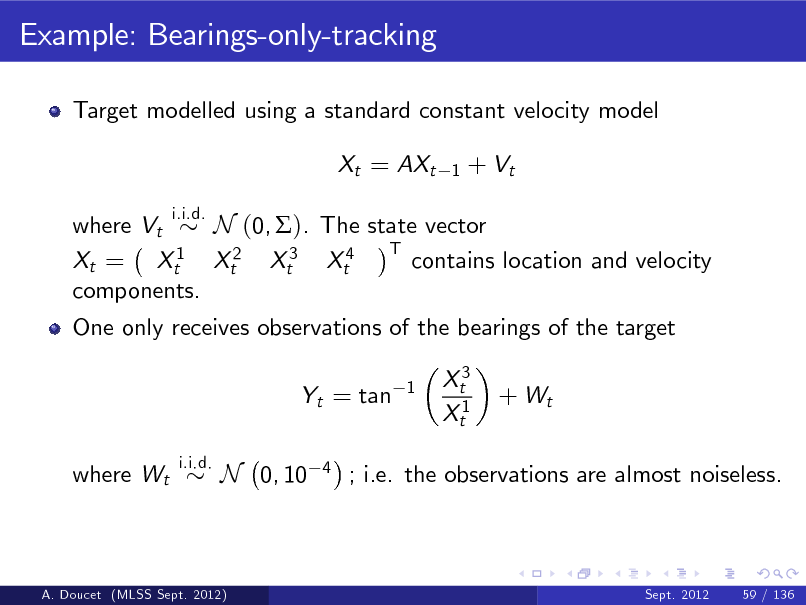

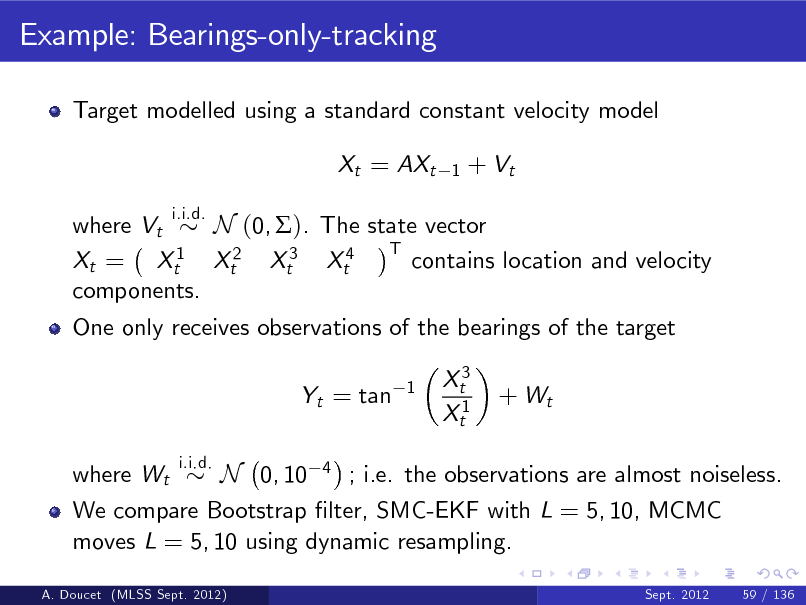

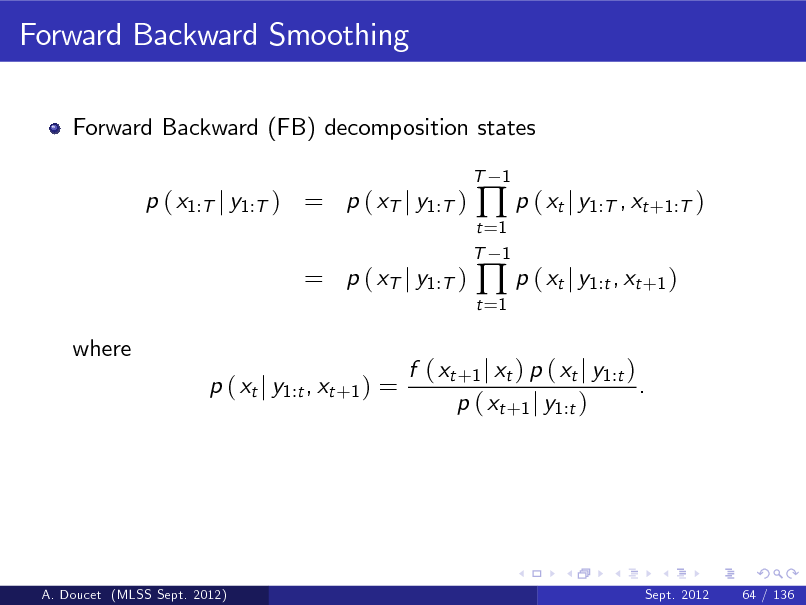

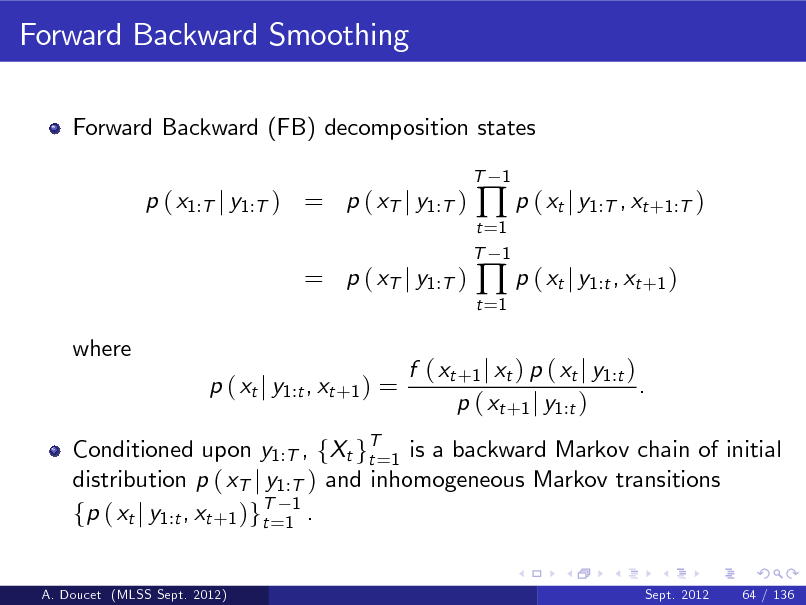

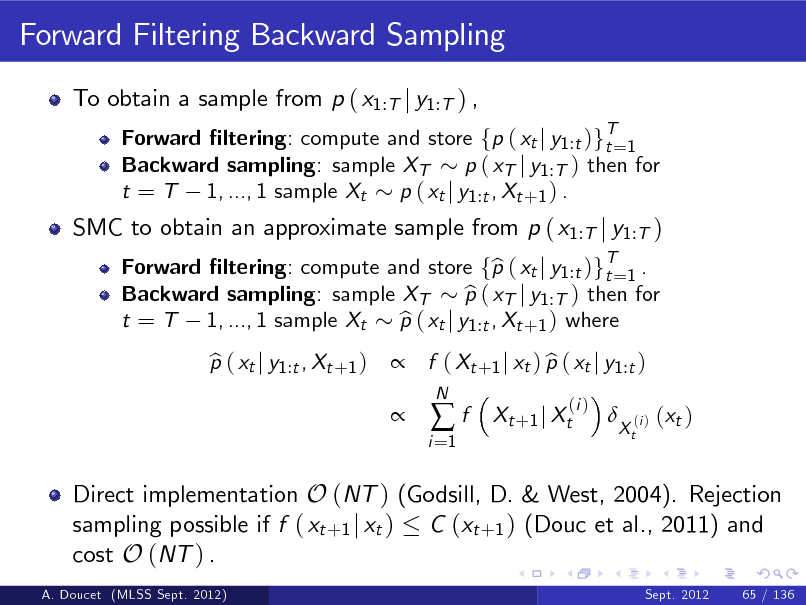

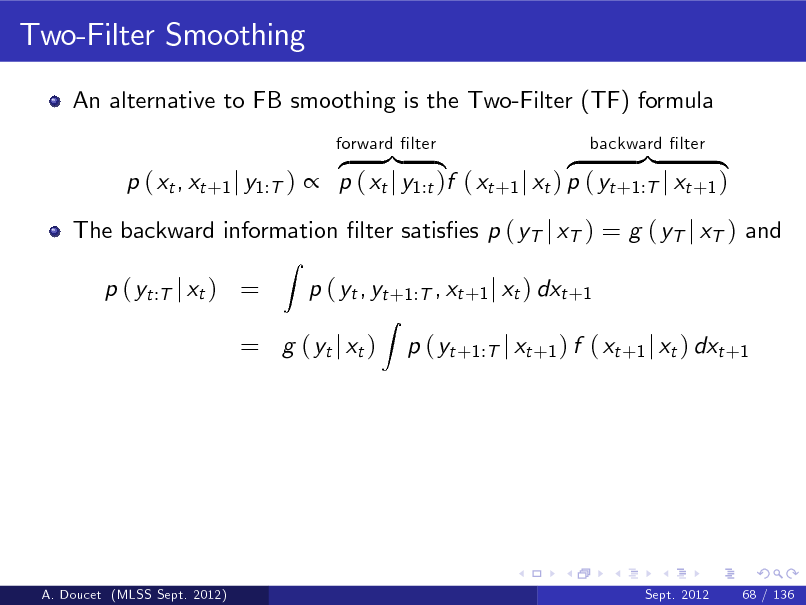

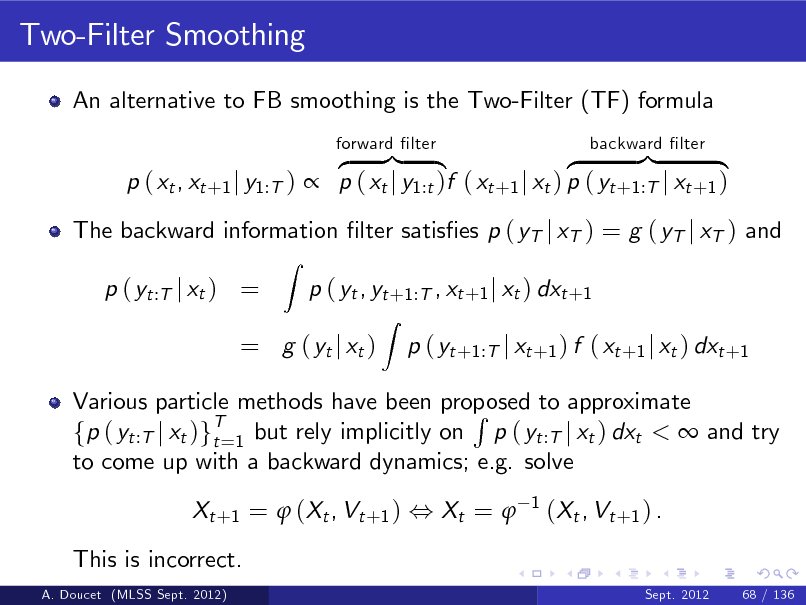

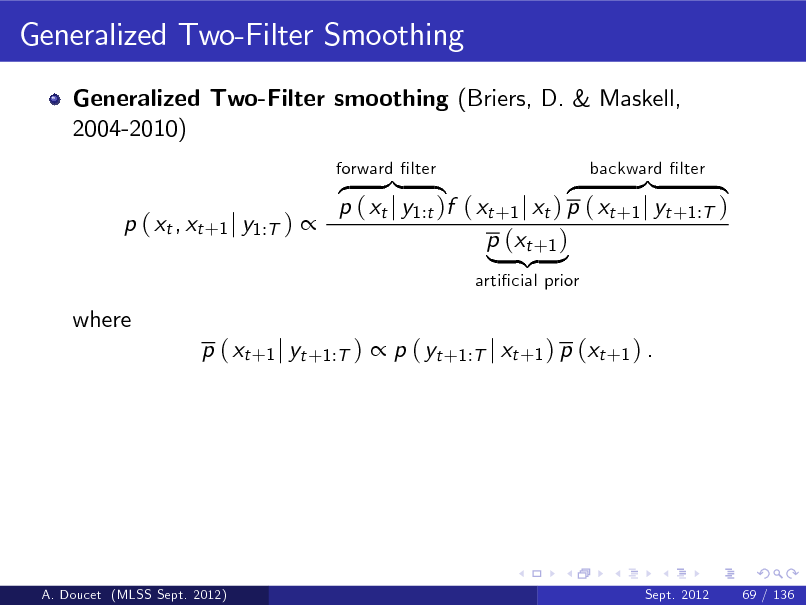

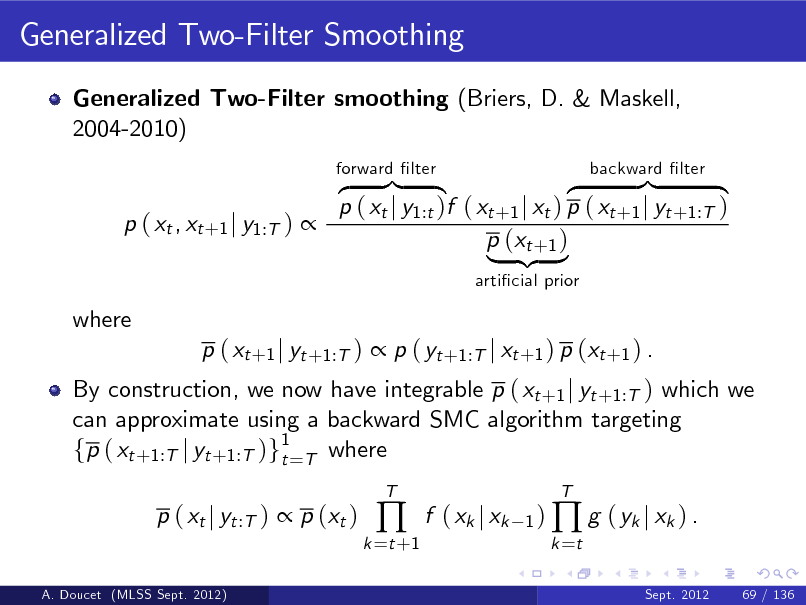

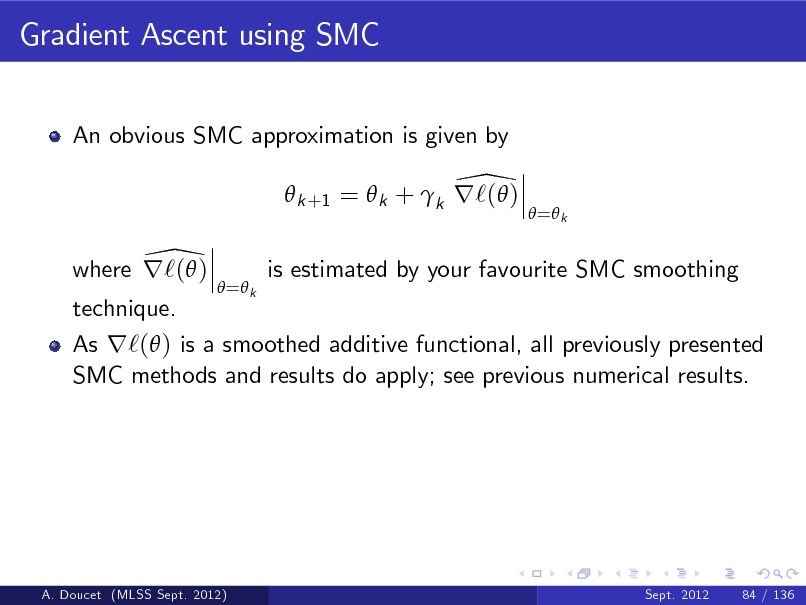

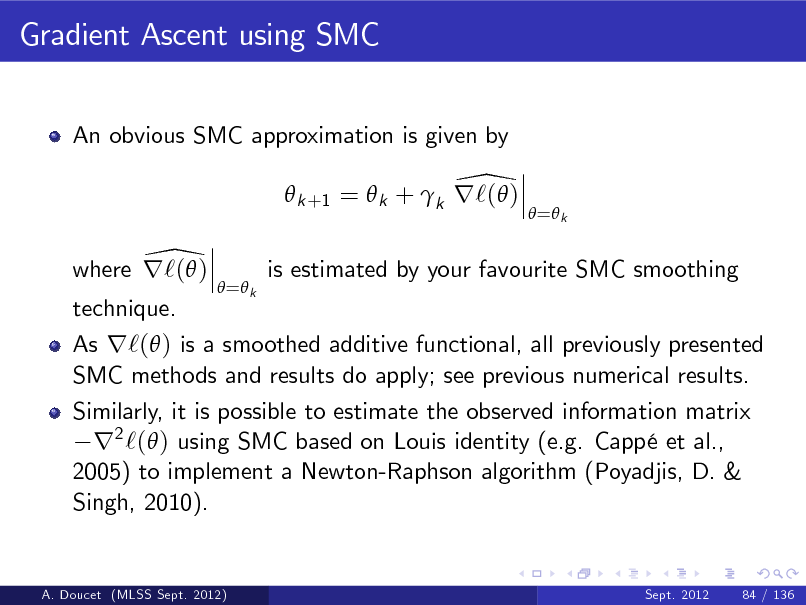

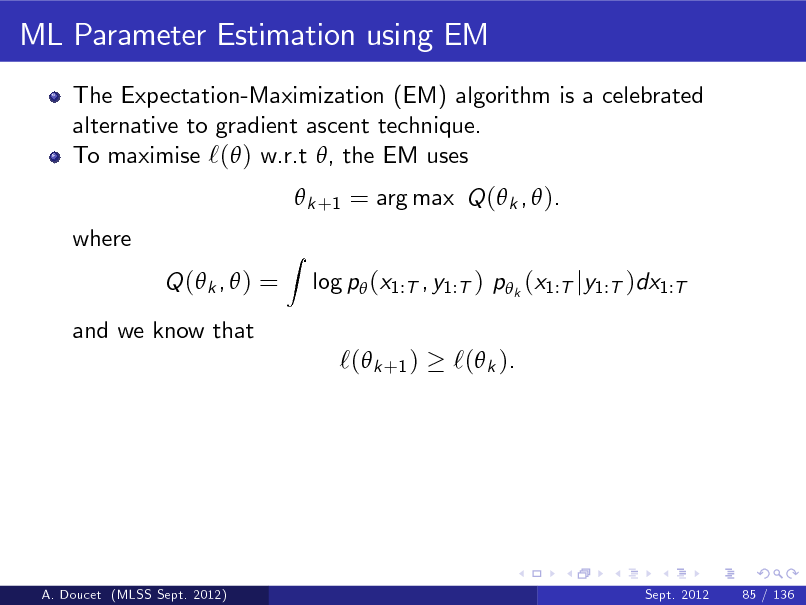

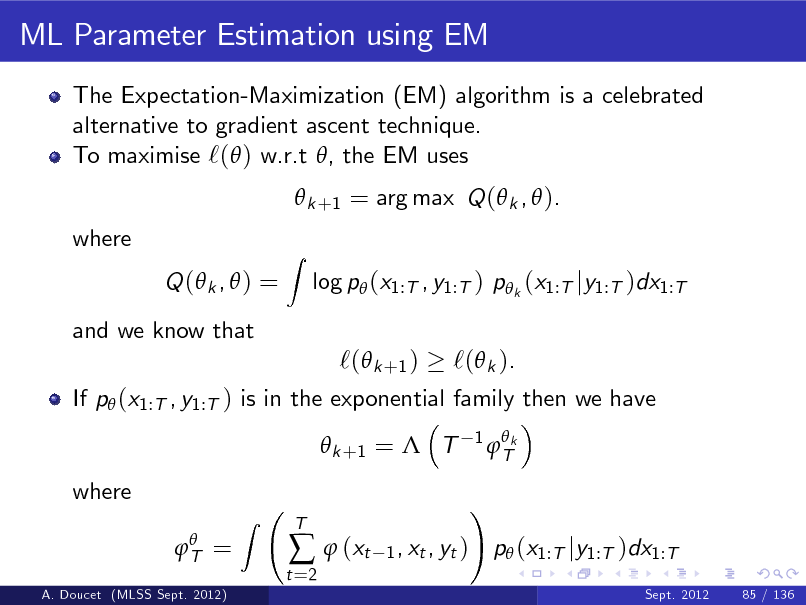

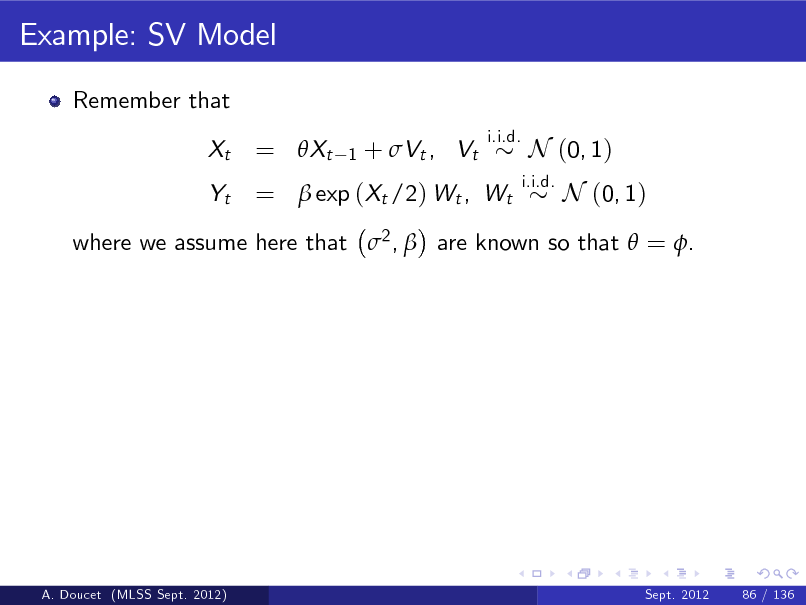

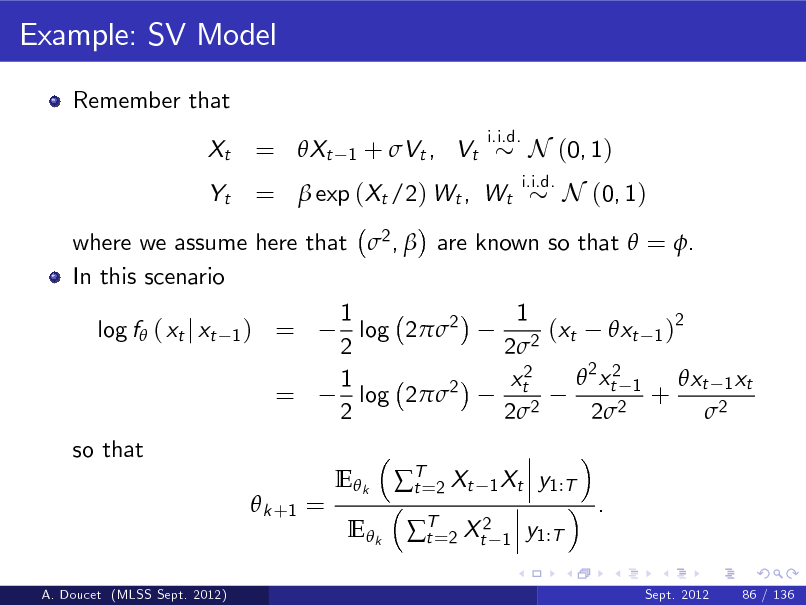

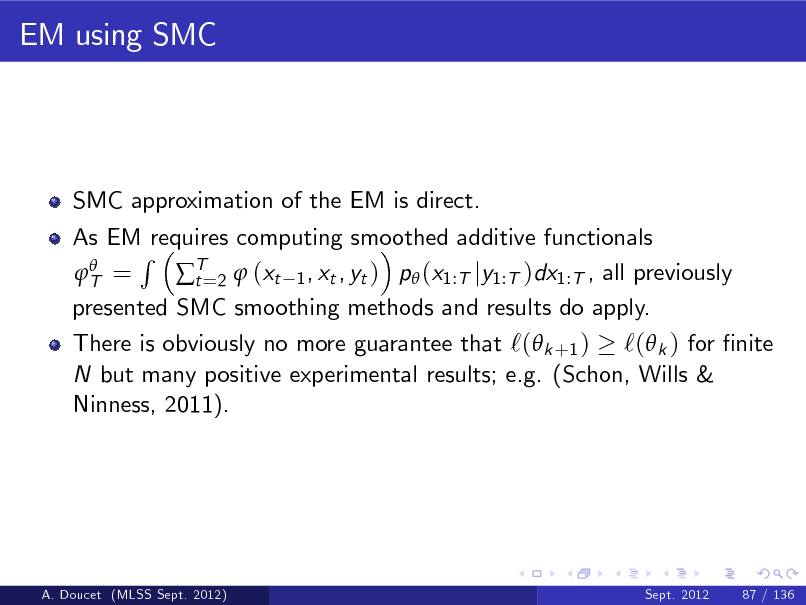

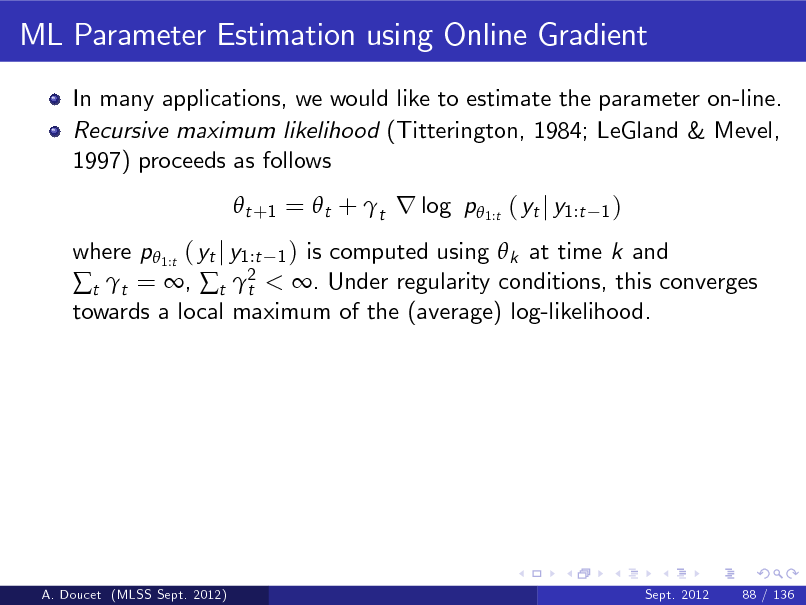

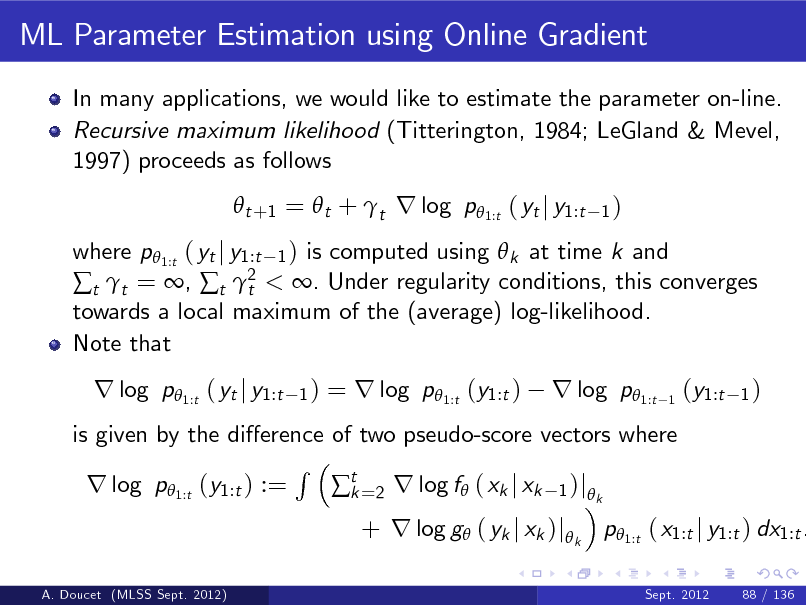

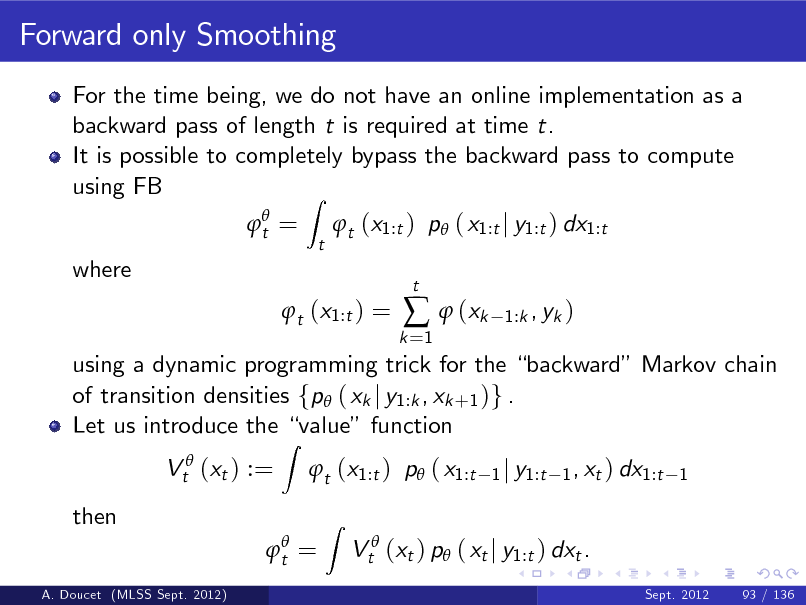

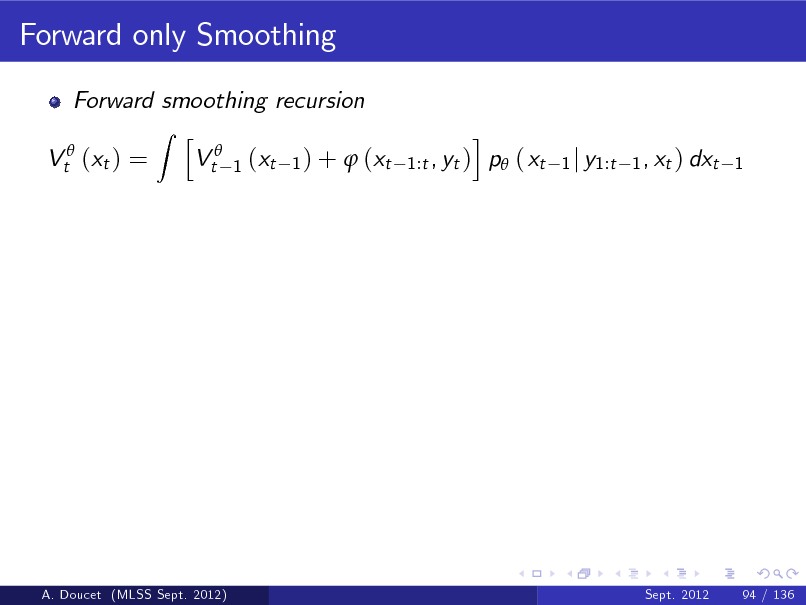

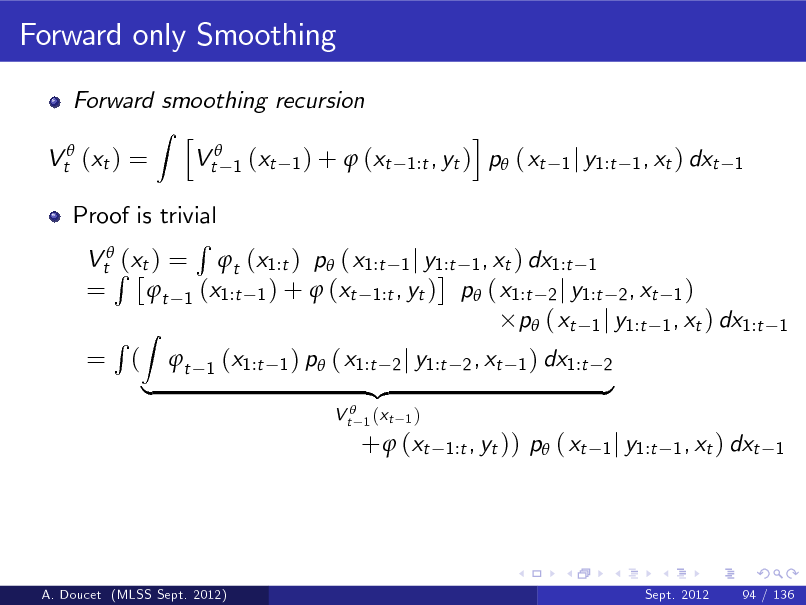

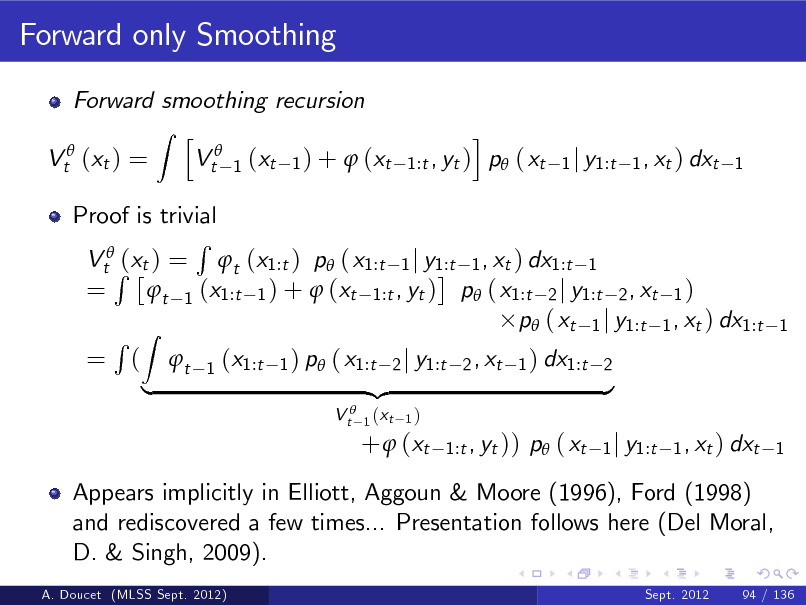

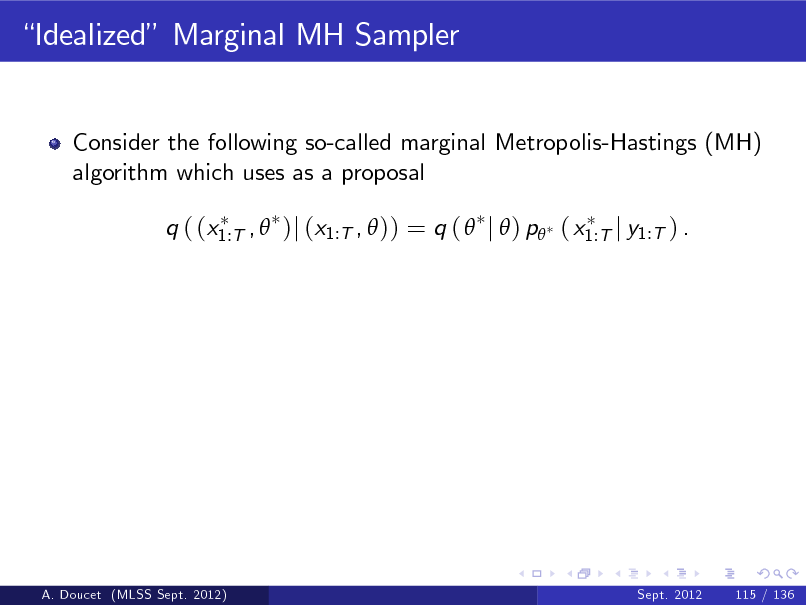

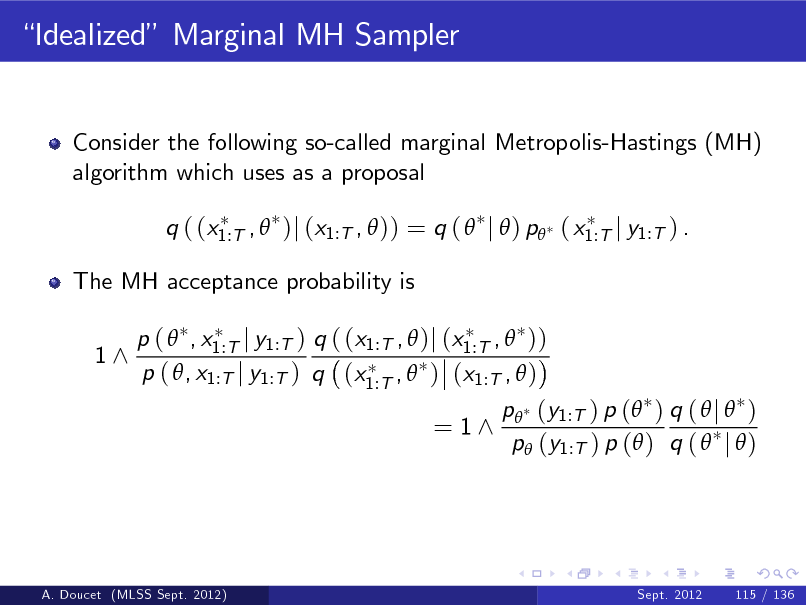

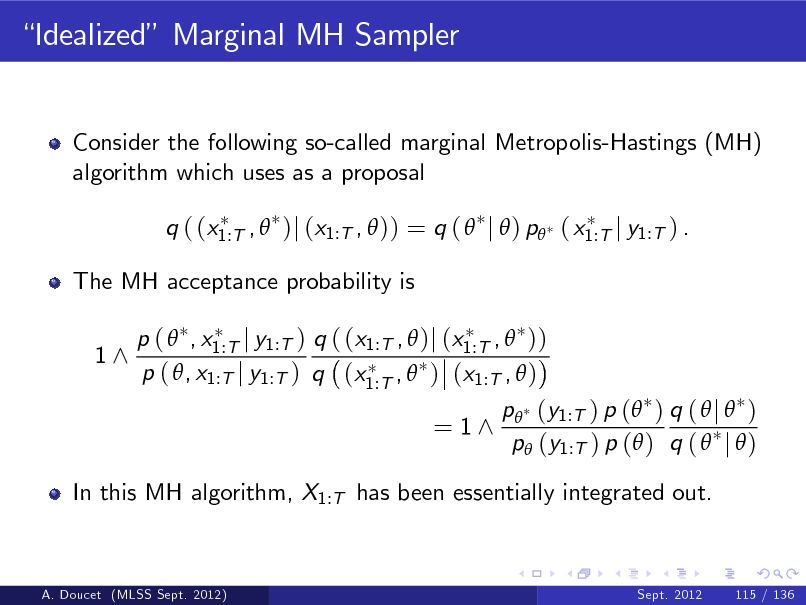

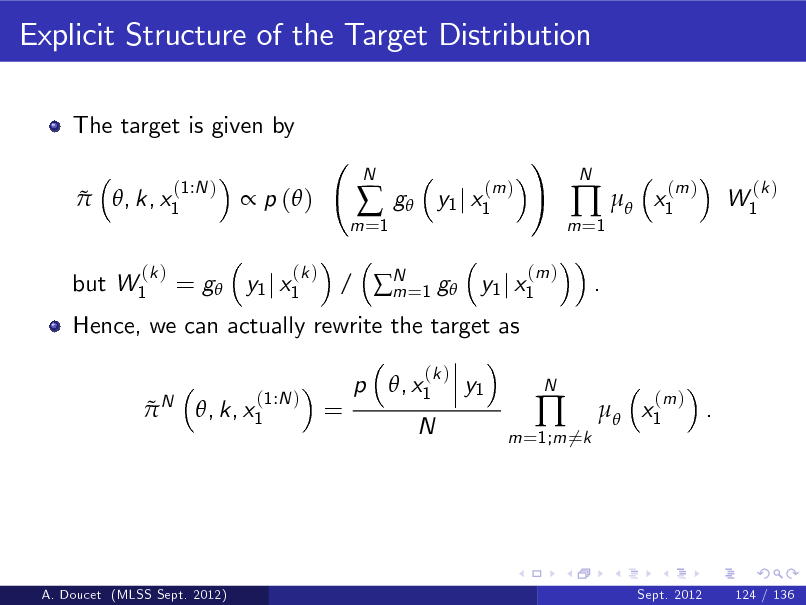

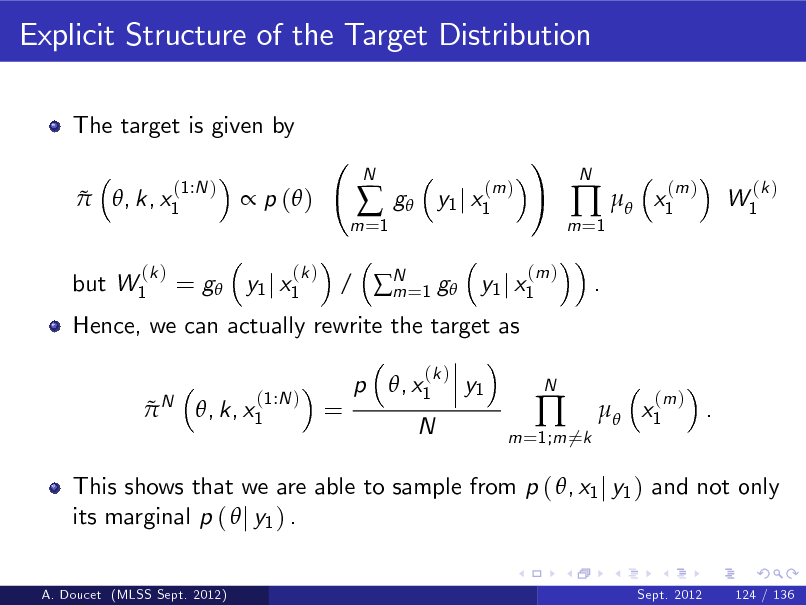

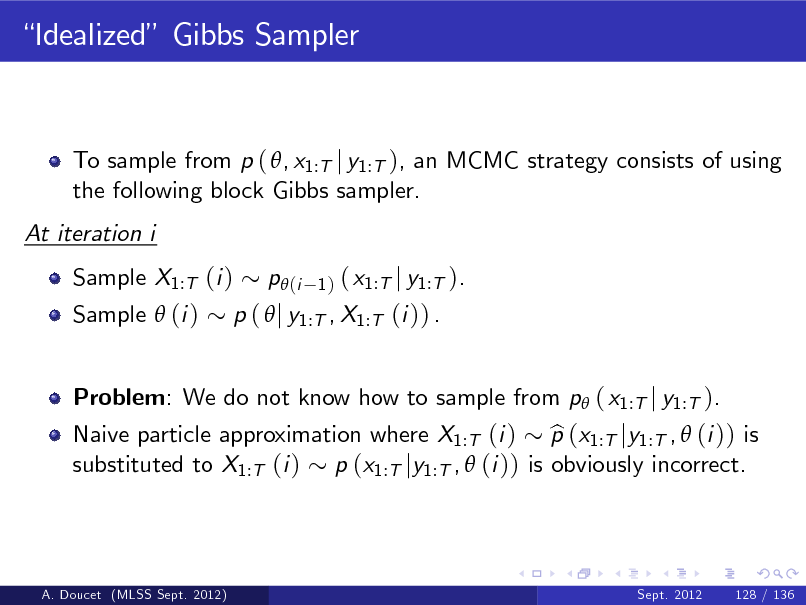

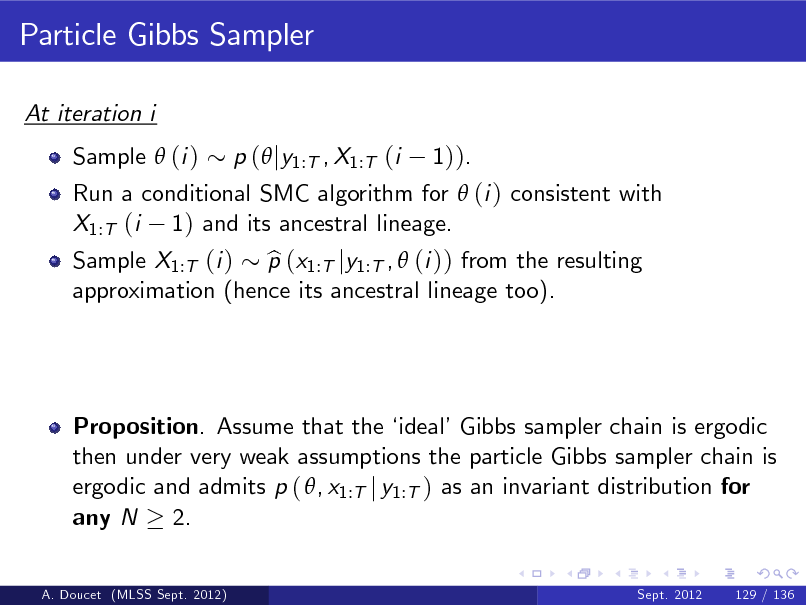

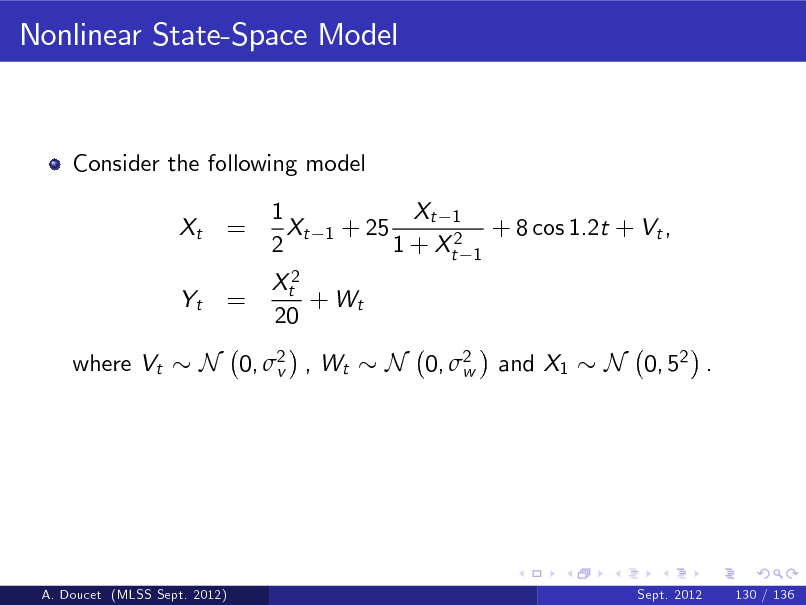

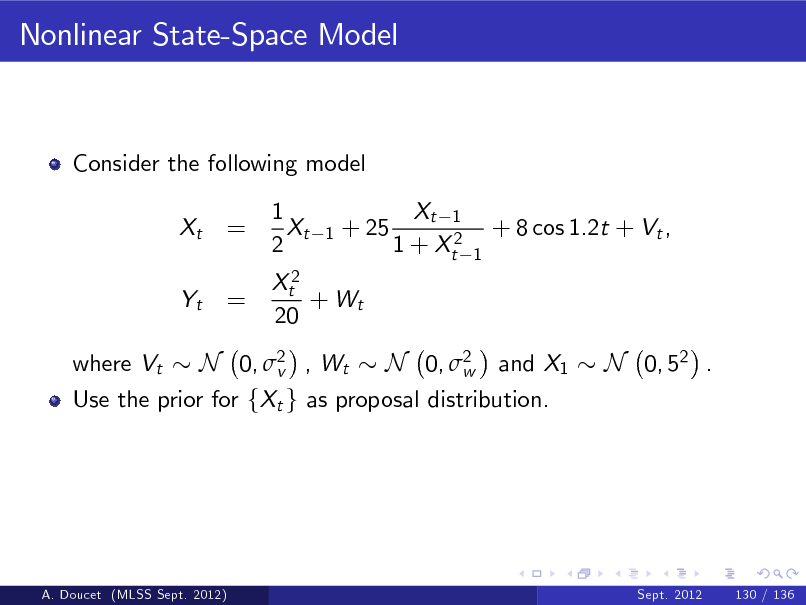

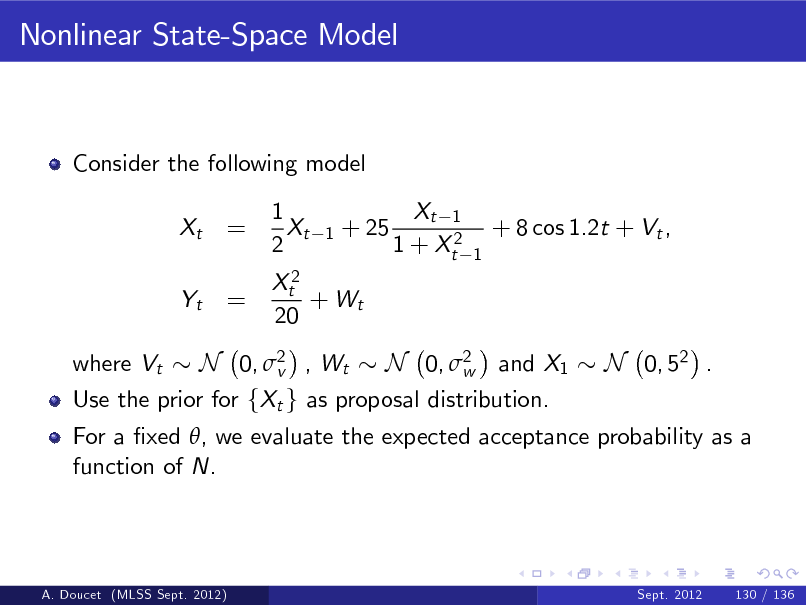

Introduce an arbitrary proposal distribution q ( xt j yt , xt approximation to p ( xt j yt , xt 1 ) .